Introduction to Supercomputing

Overview

Teaching: 60 min

Exercises: 30 minTopics

What is High-Performance Computing?

What is an HPC cluster or Supercomputer?

How does my computer compare with an HPC cluster?

Which are the main concepts in High-Performance Computing?

Objectives

Learn the components of the HPC

Learn the basic terminology in HPC

High-Performance Computing

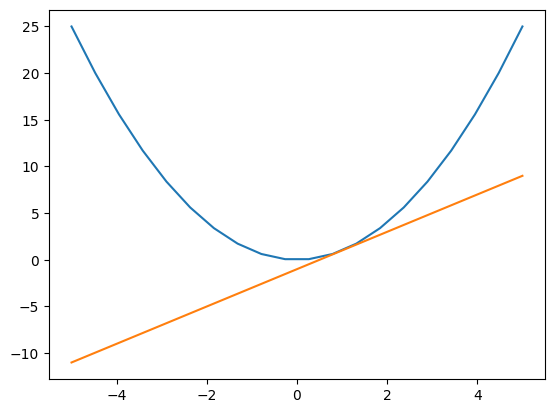

In everyday life, we are doing calculations. Before paying for some items, we may be interested in the total price. For that, we can do the sum on our heads, on paper, or by using the calculator that is now integrated into today’s smartphones. Those are simple operations. To compute interest on a loan or mortgage, we could better use a spreadsheet or web application to calculate loans and mortgages. There are more demanding calculations like those needed for computing statistics for a project, fitting some experimental values to a theoretical function, or analyzing the features of an image. Modern computers are more than capable of these tasks, and many friendly software applications are capable of solving those problems with an ordinary computer.

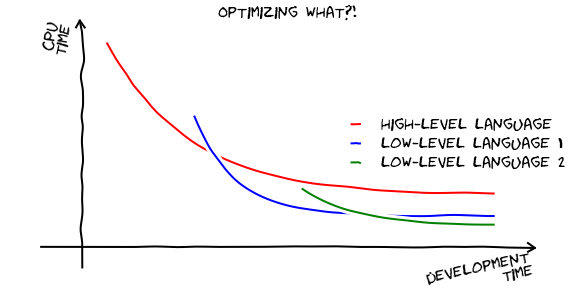

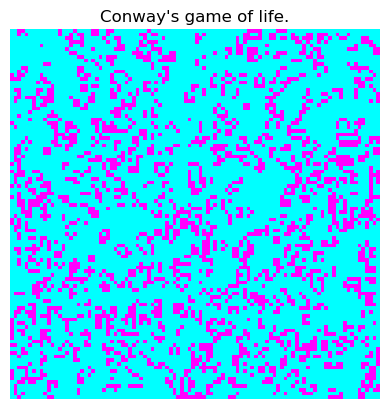

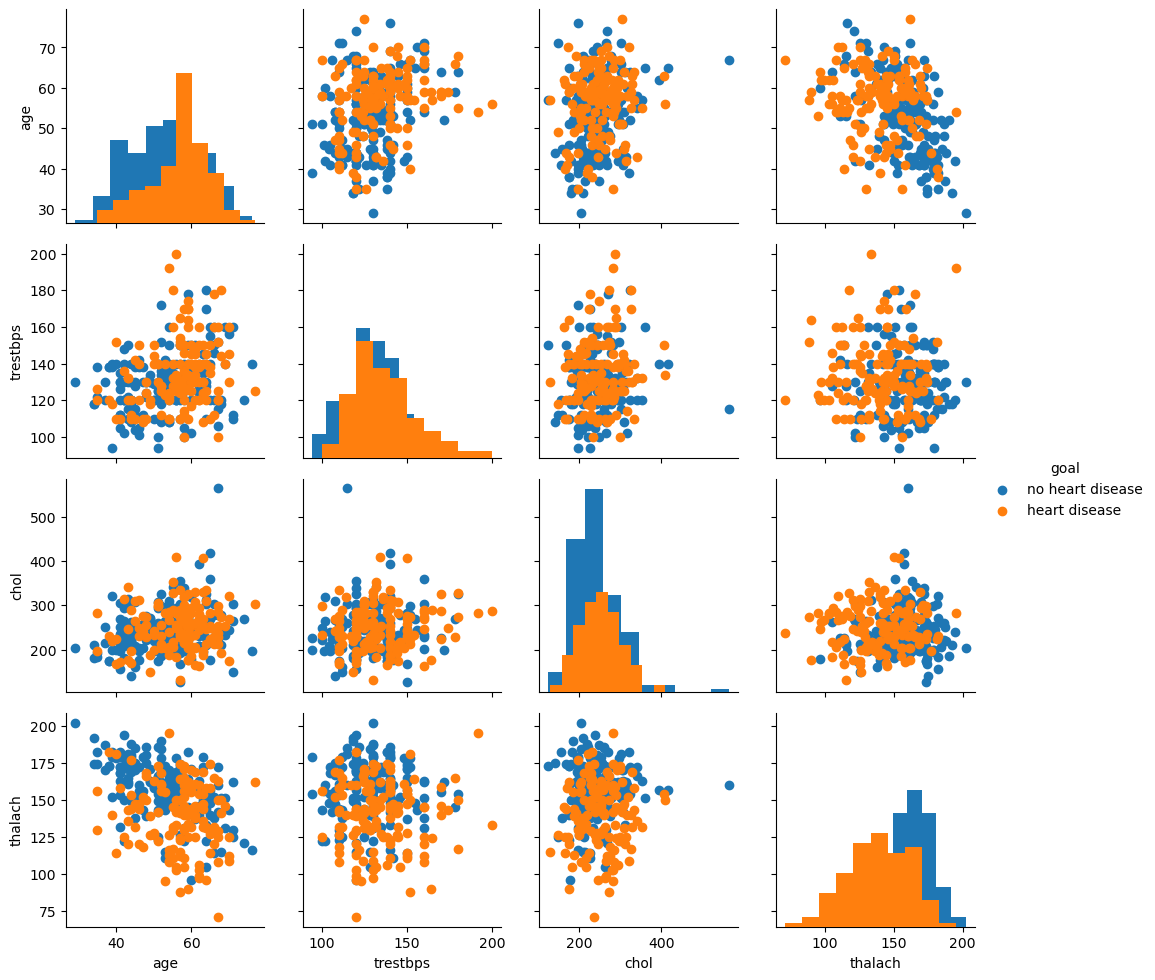

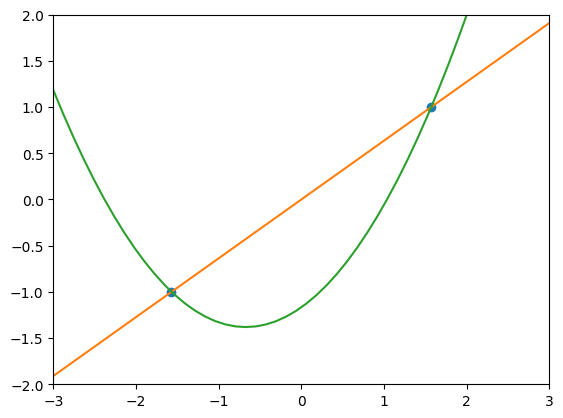

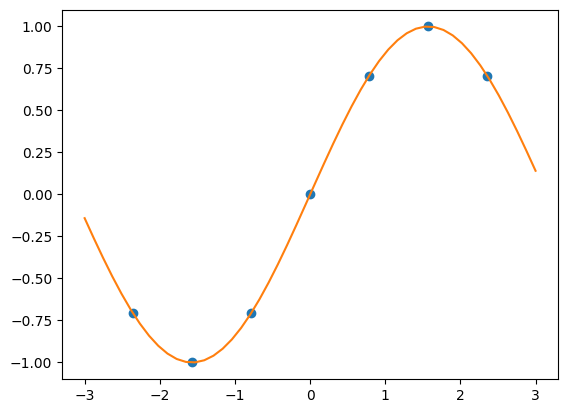

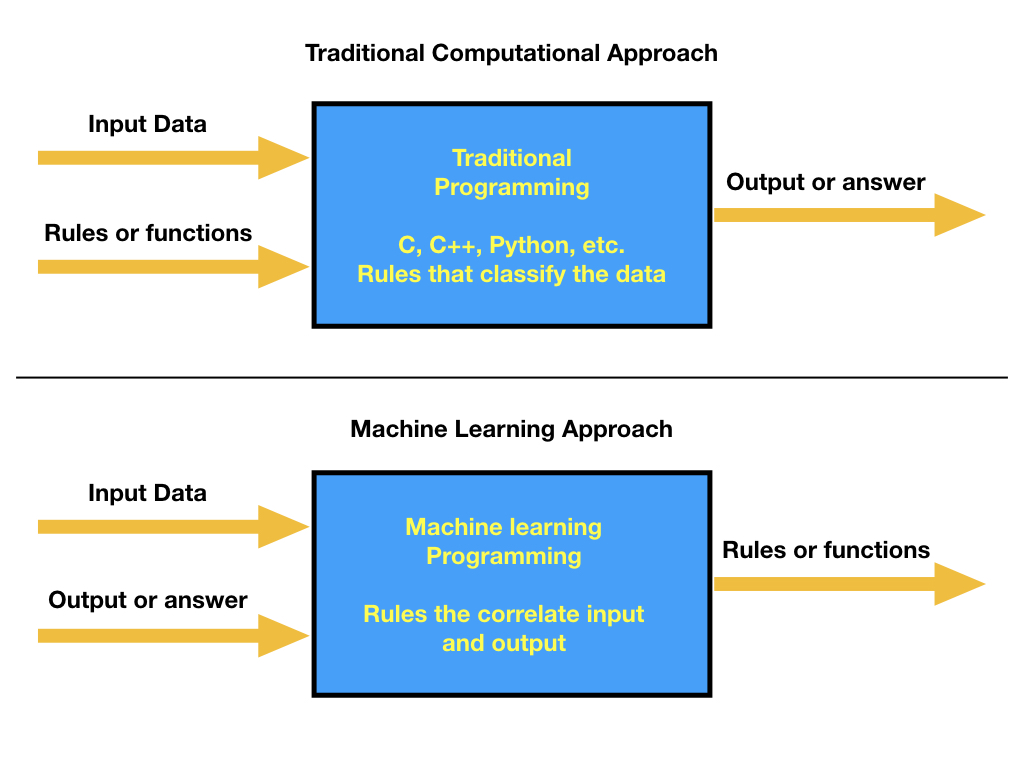

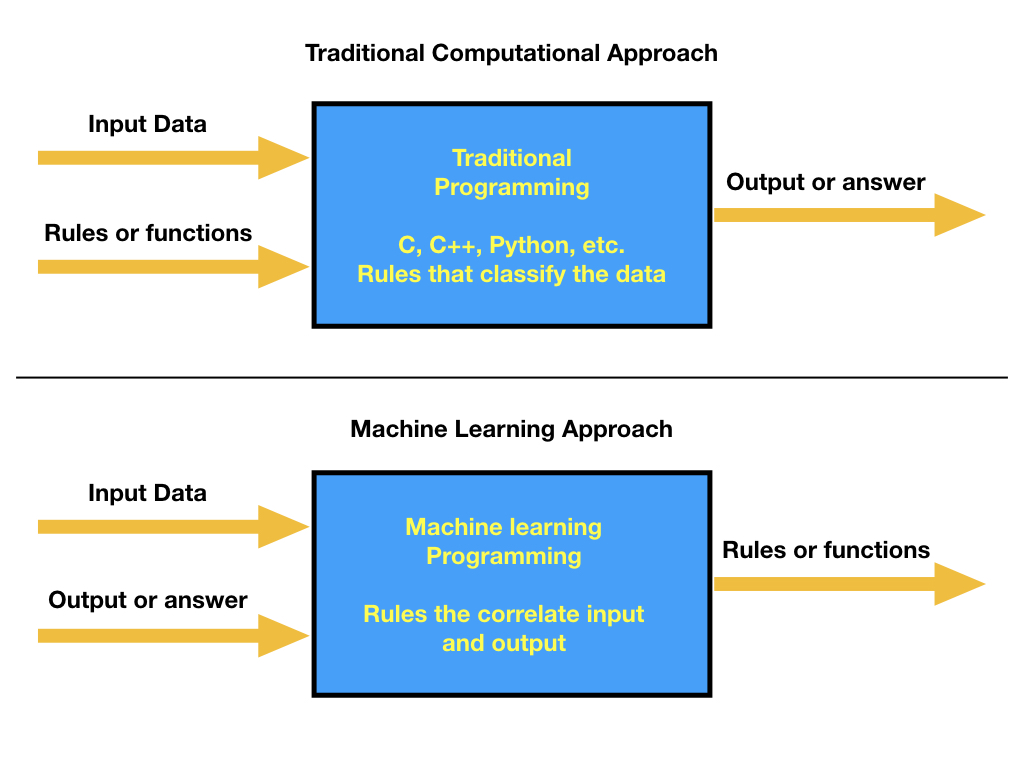

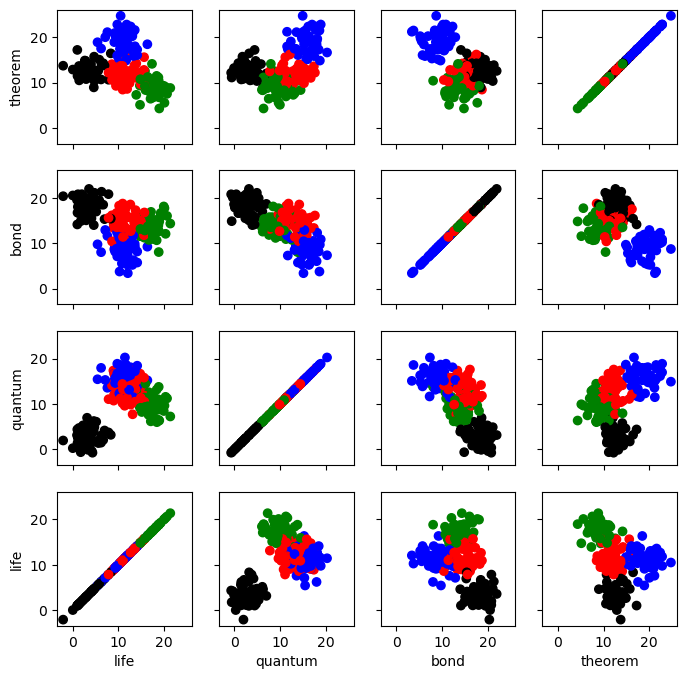

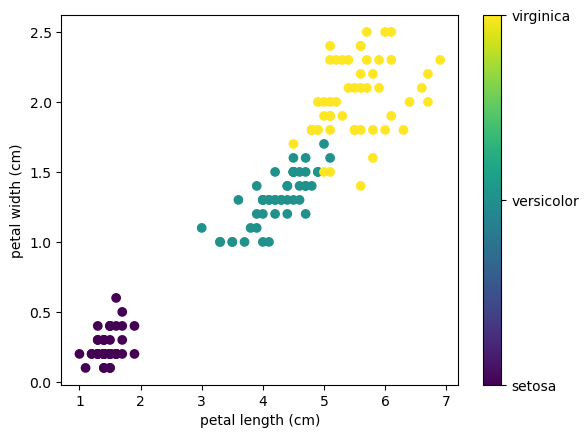

Scientific computing consists of using computers to answer questions that require computational resources. Several of the examples given fit the definition of scientific computations. Experimental problems can be modeled in the framework of some theory. We can use known scientific principles to simulate the behavior of atoms, molecules, fluids, bridges, or stars. We can train computers to recognize cancer on images or cardiac diseases from electrocardiograms. Some of those problems could be beyond the capabilities of regular desktop and laptop computers. In those cases, we need special machines capable of processing all the necessary computations in a reasonable time to get the answers we expect.

When the known solution to a computational problem exceeds what you can typically do with a single computer, we are in the realm of Supercomputing, and one area in supercomputing is called High-Performance Computing (HPC).

There are supercomputers of the most diverse kinds. Some of them do not resemble at all what you can think about a computer. Those are machines designed from scratch for very particular tasks, all the electronics are specifically designed to run very efficiently a narrow set of calculations, and those machines could be as big as entire rooms.

However, there is a class of supercomputers made of machines relatively similar to regular computers. Regular desktop computers (towers) aggregated and connected with some network, such as Ethernet, were one of the first supercomputers built from commodity hardware. These clusters were instrumental in developing the cheaper supercomputers devoted to scientific computing and are called Beowulf clusters.

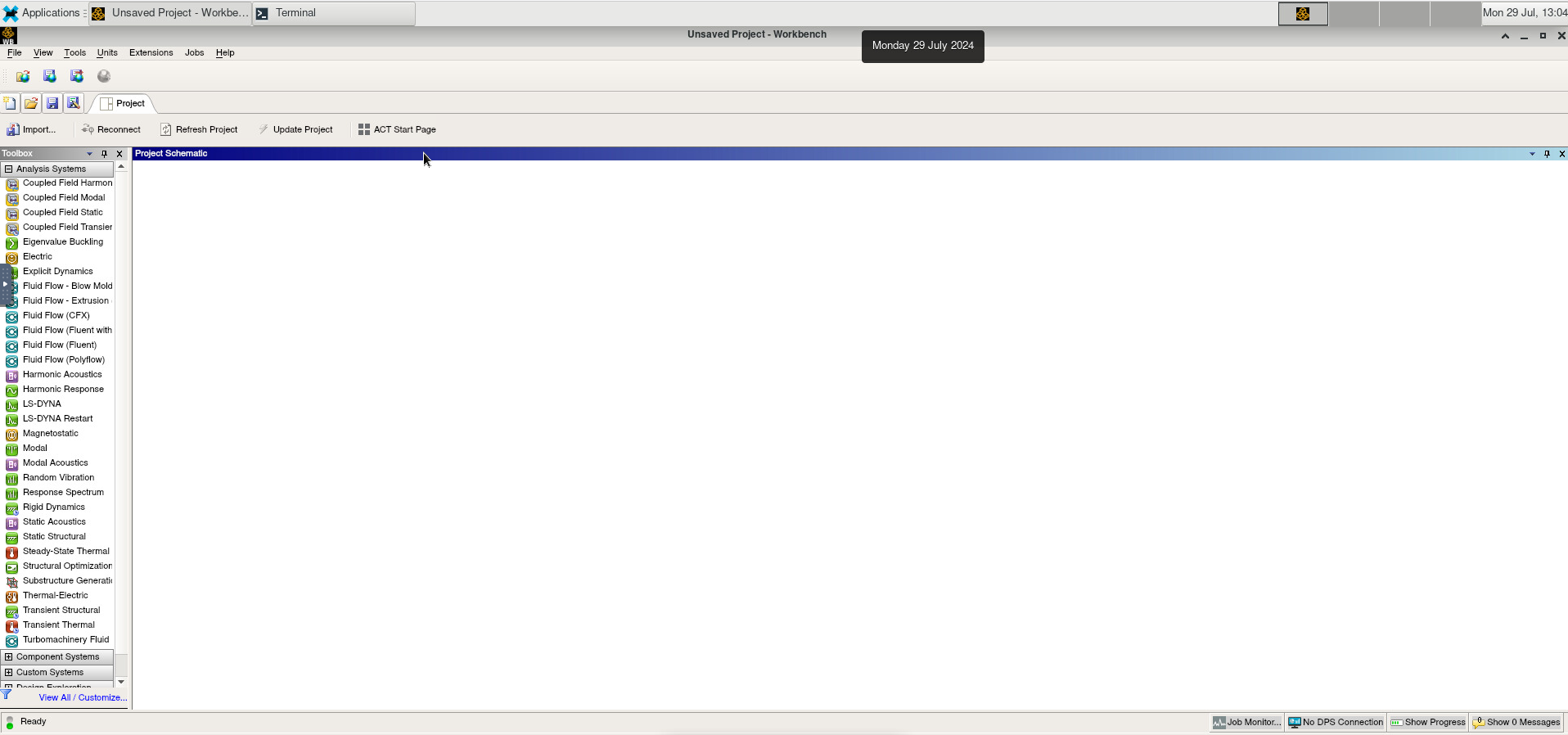

When more customized computers are used, those towers are replaced by slabs and positioned in racks. To increase the number of machines on the rack, several motherboards are sometimes added to a single chassis, and to improve performance, very fast networks are used. Those are what we understand today as HPC clusters.

In the world of HPC, machines are conceived based on the concepts of size and speed. The machines used for HPC are called Supercomputers, big machines designed to perform large-scale calculations. Supercomputers can be built for particular tasks or as aggregated or relatively common computers; in the latter case, we call those machines HPC clusters. An HPC cluster comprises tens, hundreds, or even thousands of relatively normal computers, especially connected to perform intensive computational operations and using software that makes these computers appear as a single entity rather than a network of independent machines.

Those computers are called nodes and can work independently of each other or together on a single job. In most cases, the kind of operations that supercomputers try to solve involves extensive numerical calculations that take too much time to complete and, therefore, are unfeasible to perform on an ordinary desktop computer or even the most powerful workstations.

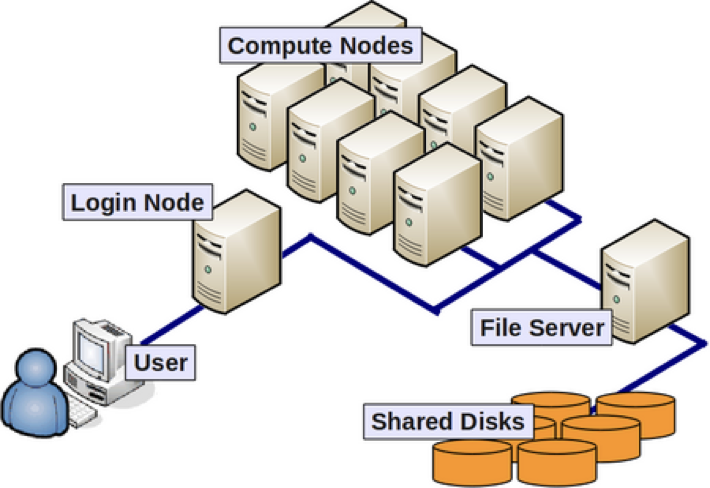

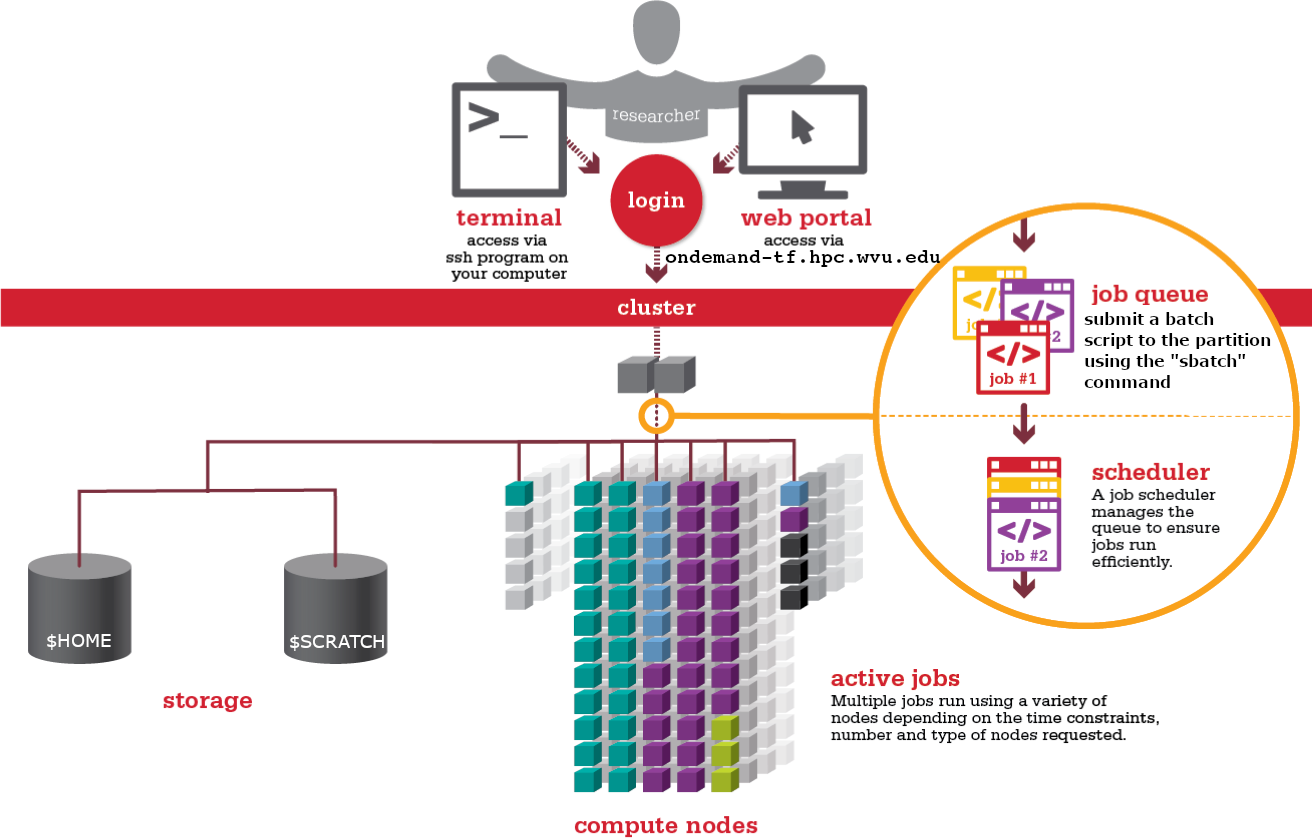

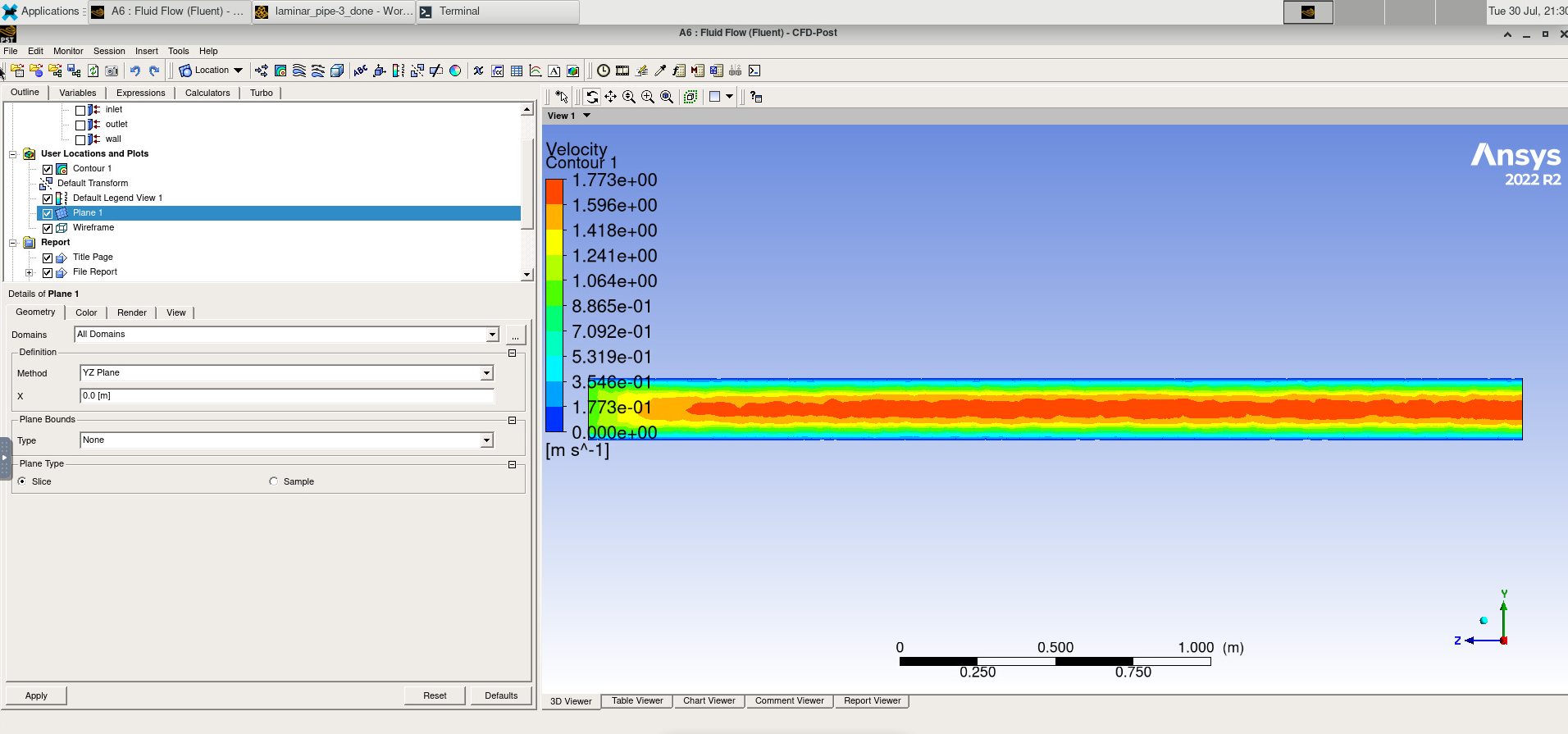

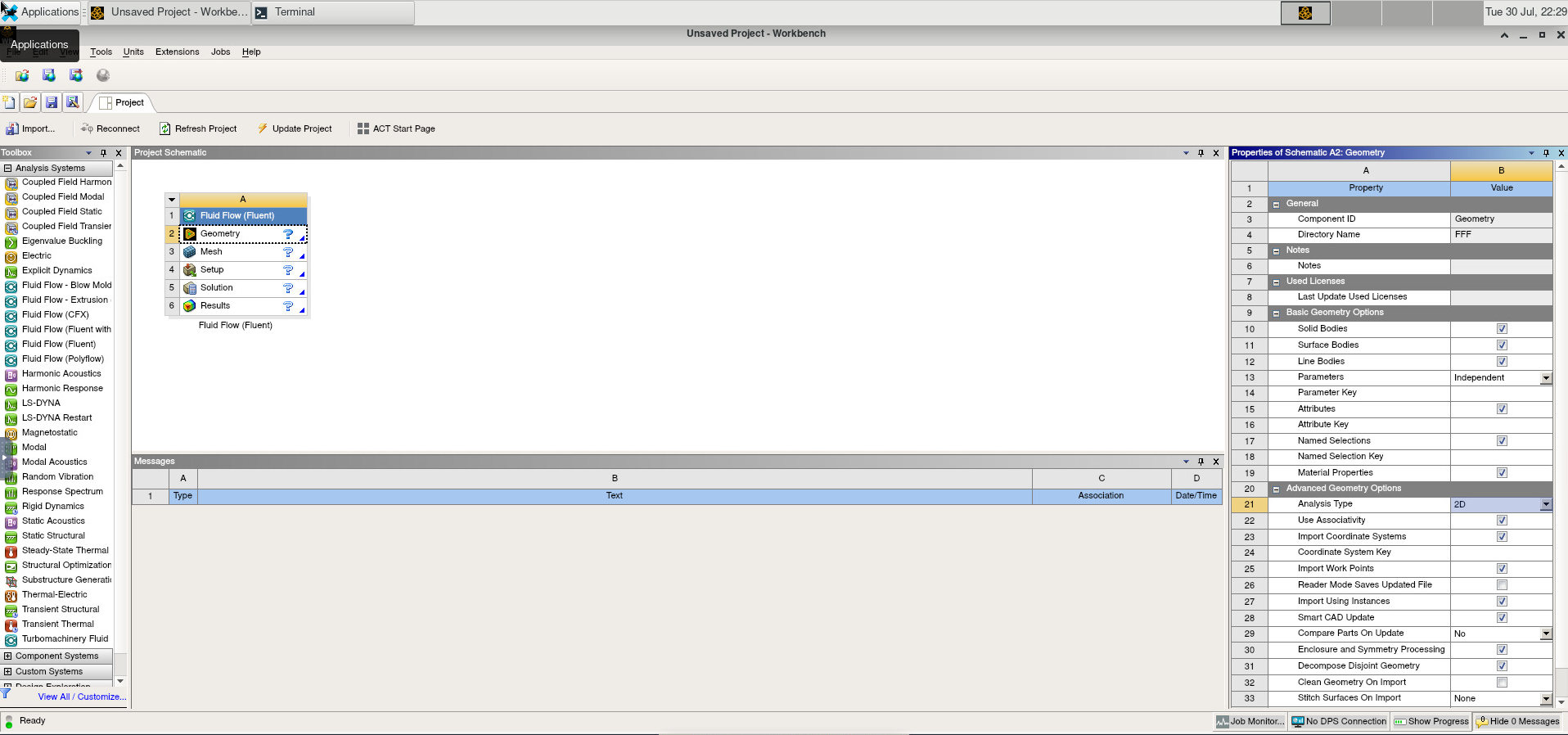

Anatomy of an HPC Cluster

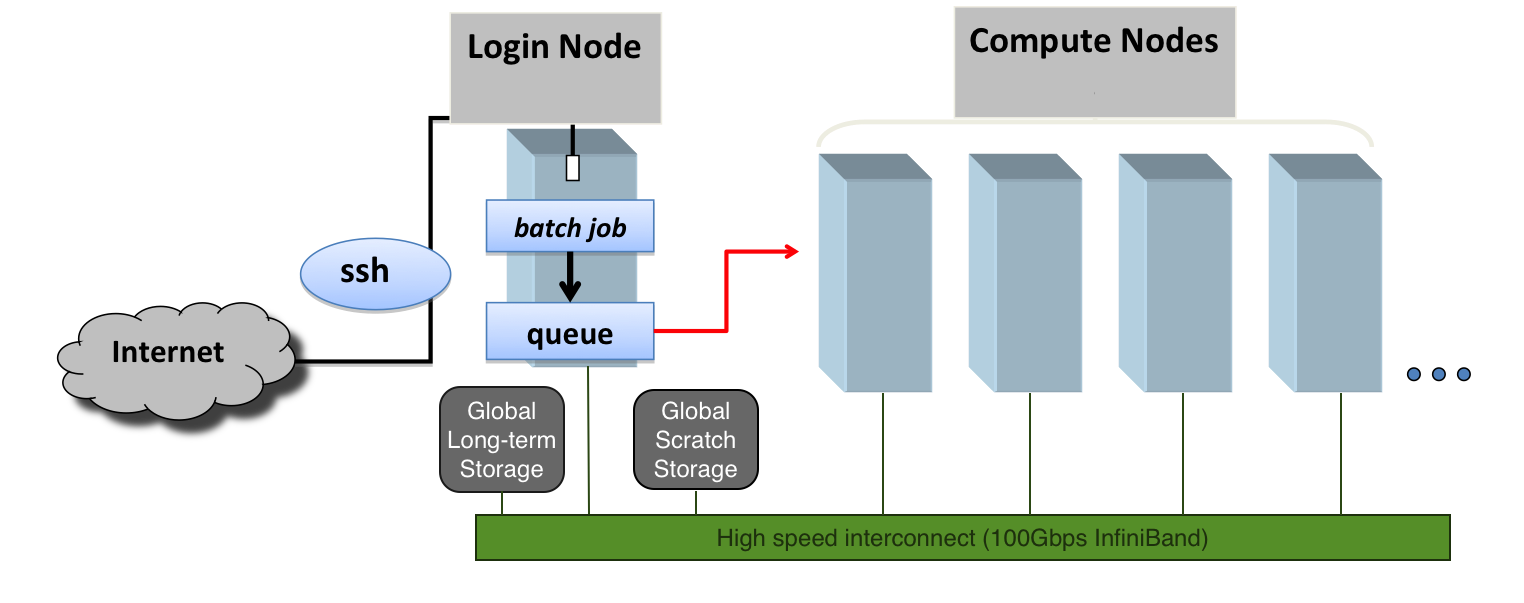

The diagram above shows that an HPC cluster comprises several computers, here depicted as desktop towers. Still, in modern HPC clusters, those towers are replaced by computers that can be stacked into racks. All those computers are called nodes, the machines that execute your jobs are called “compute nodes,” and all other computers in charge of orchestration, monitoring, storage, and allowing access to users are called “infrastructure nodes.” Storage is usually separated into nodes specialized to read and write from large pools of drives, either mechanical drives (HDD), solid-state drives (SSD), or even a combination of both. Access to the HPC cluster is done via a special infrastructure node called the “login node.” A single login node is enough in clusters serving a relatively small number of users. Larger clusters with thousands of users can have several login nodes to balance the load.

Despite an HPC cluster being composed of several computers, the cluster itself should be considered an entity, i.e., a system. In most cases, you are not concerned about where your code is executed or whether one or two machines are online or offline. All that matters is the capacity of the system to process jobs, execute your calculations in one of the many resources available, and deliver the results in a storage that you can easily access.

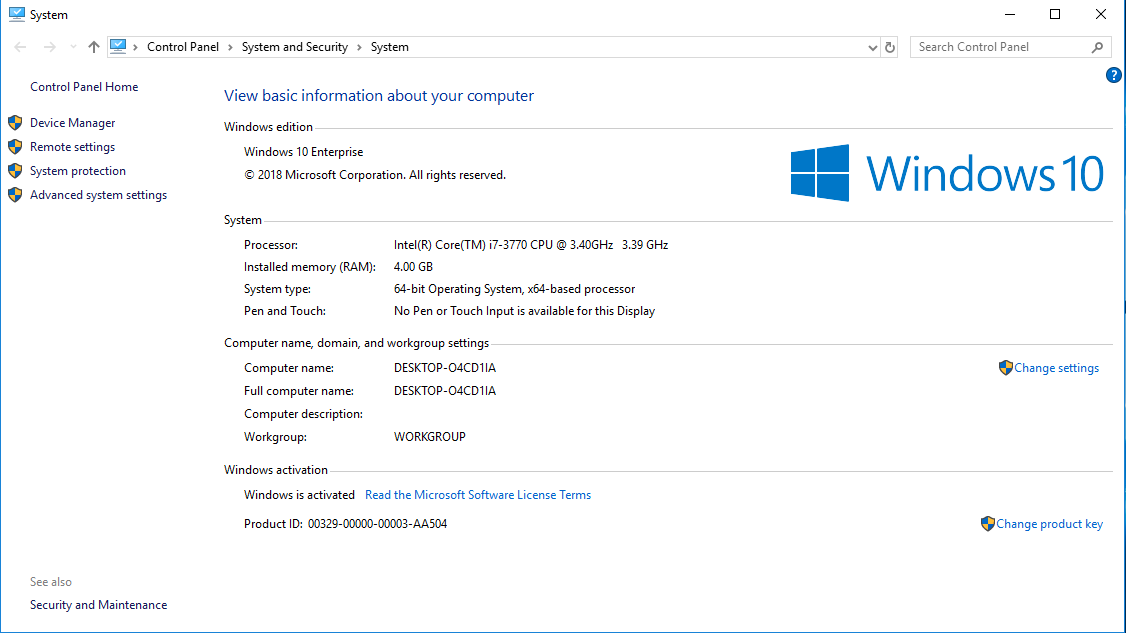

What are the specifications of my computer?

One way of understanding what Supercomputing is all about is to start by comparing an HPC cluster with your desktop computer. This is a good way of understanding supercomputers’ scale, speed, and power.

The first exercise consists of collecting critical information about the computer you have in front of you. We will use that information to identify the features of our HPC cluster. Gather information about the CPU, number of Cores, Total RAM, and Hard Drive from your computer.

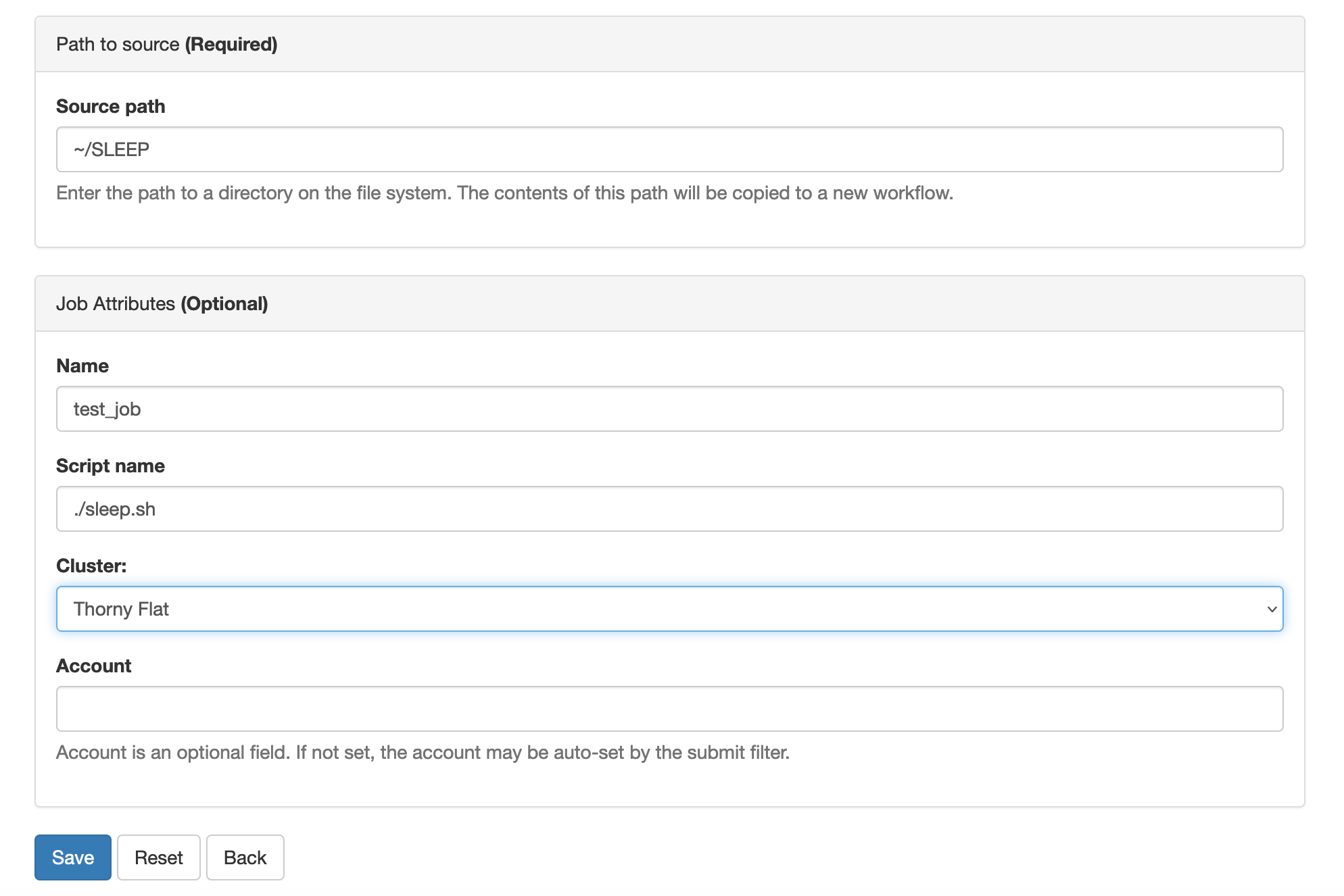

You can see specs for our cluster Thorny Flat

Try to gather an idea of the Hardware present on your machine and see the hardware we have on Thorny Flat.

Here are some tricks to get that data from several Operating Systems

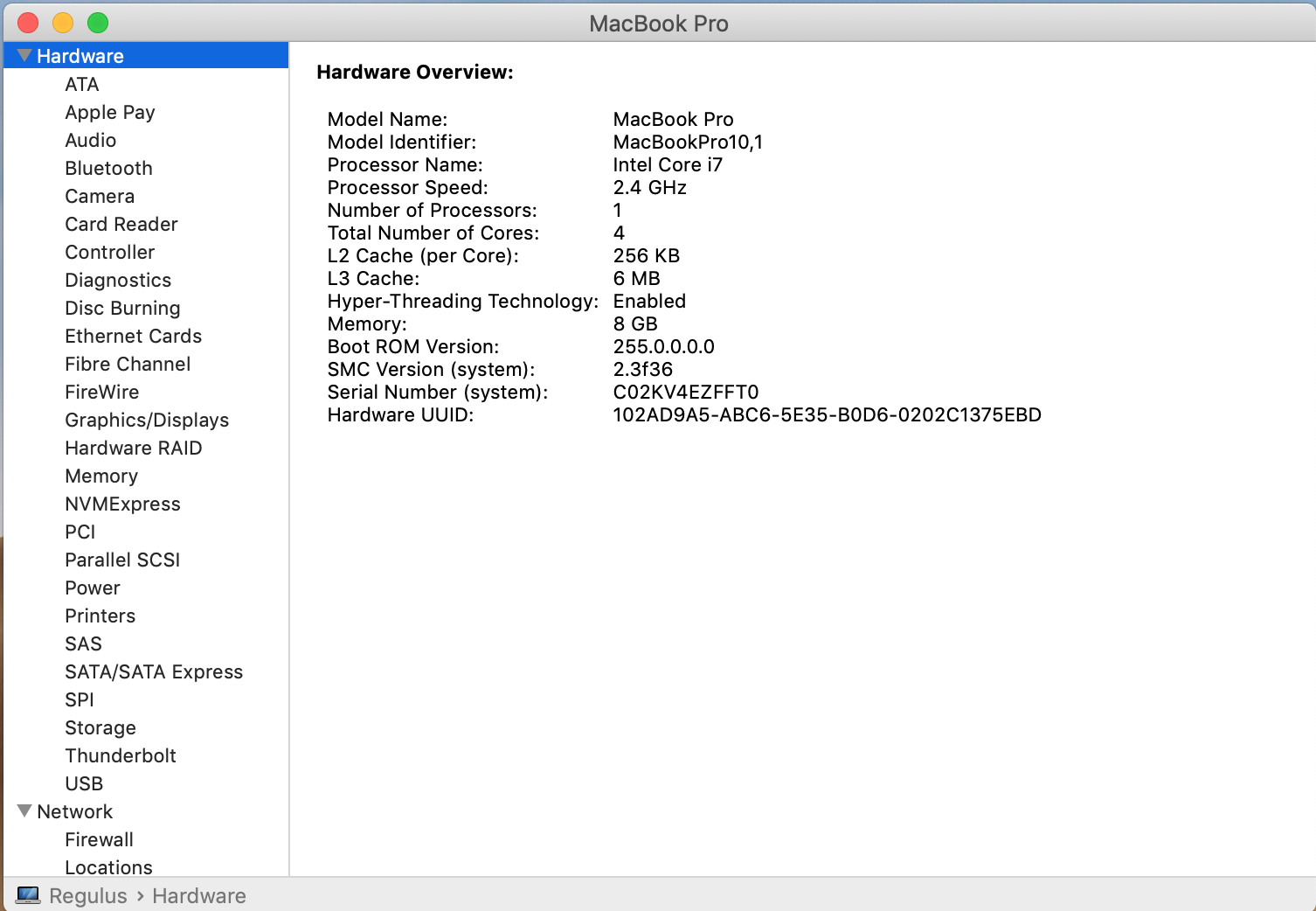

If you want more data click on "System Report..." and you will get:

If you want more data click on "System Report..." and you will get:

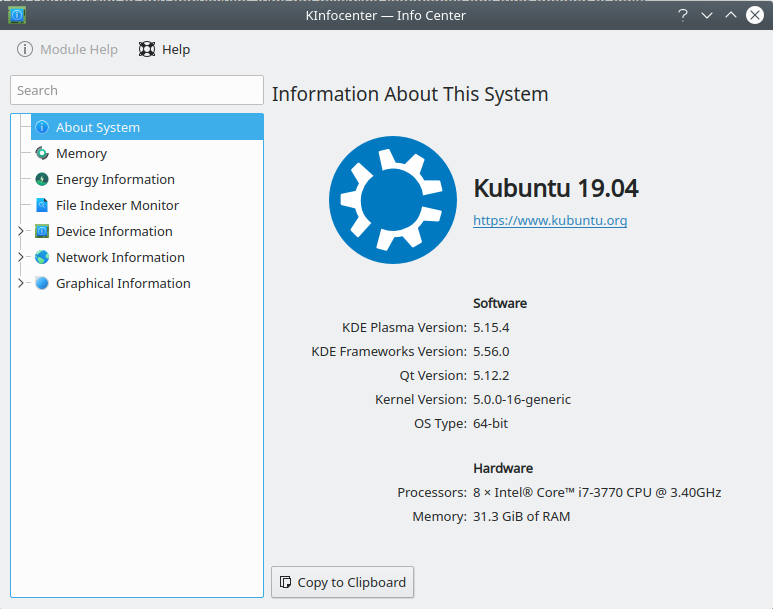

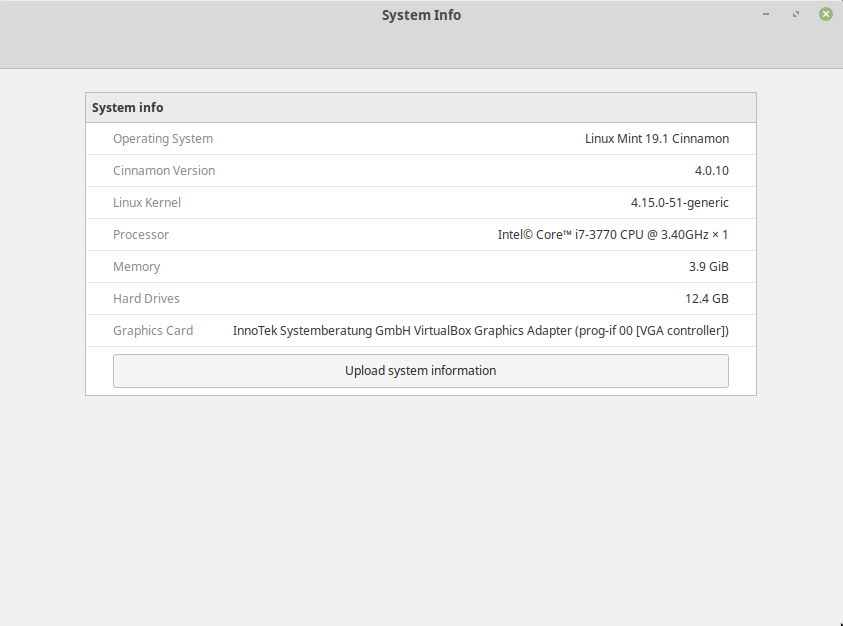

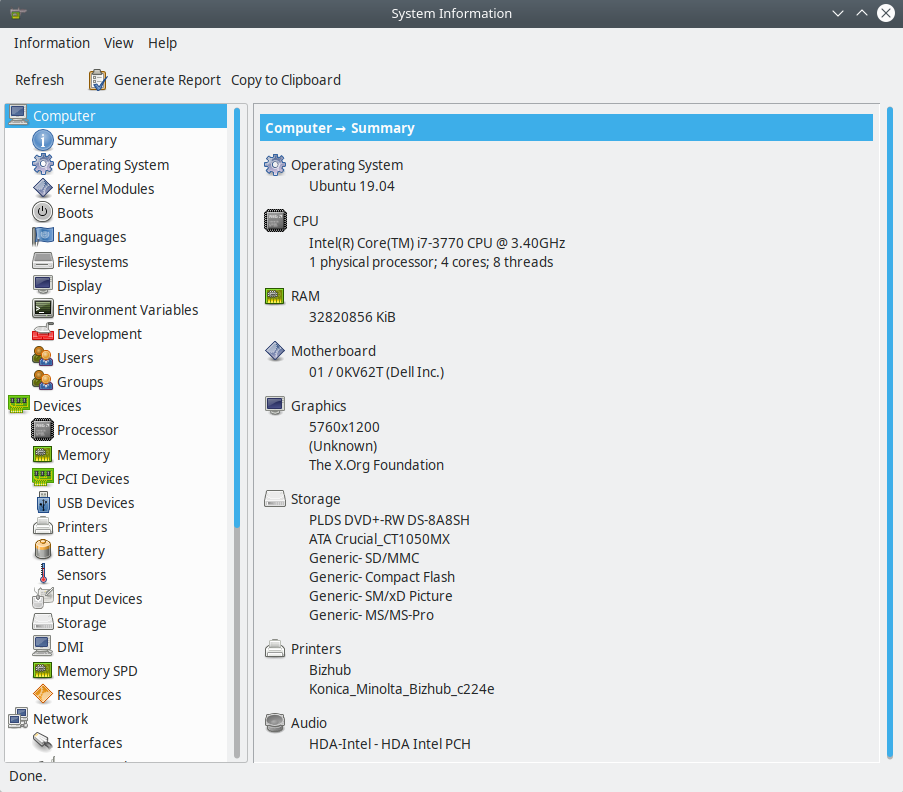

In Linux, gathering the data from a GUI depends much more on the exact distribution you use. Here are some tools that you can try:

KDE Info Center

Linux Mint Cinnamon System Info

Linux Mint Cinnamon System Info

Advantages of using an HPC cluster for research

Using a cluster often has the following advantages for researchers:

- Speed. An HPC cluster has many more CPU cores, often with higher performance specs, than a typical laptop or desktop, HPC systems can offer significant speed up.

- Volume. Many HPC systems have processing memory (RAM) and disk storage to handle large amounts of data. Many GB of RAM and TeraBytes (TB) storage is available for research projects. Desktop computers rarely achieve the same amount of memory and storage.

- Efficiency. Many HPC systems operate a pool of resources drawn on by many users. In most cases when the pool is large and diverse enough, the resources on the system are used almost constantly. A healthy HPC system usually achieves utilization on top of 80%. A normal desktop computer is idle for most of the day.

- Cost. Bulk purchasing and government funding mean that the cost to the a research community for using these systems is significantly less than it would be otherwise. There are also economies done in terms of energy and human maintenance costs compared with desktop computers

- Convenience. Maybe your calculations take a long time to run or are otherwise inconvenient to run on your personal computer. There’s no need to tie up your computer for hours when you can use someone else’s instead. Running on your machine could make it impossible to use it for other common tasks.

Compute nodes

On an HPC cluster, we have many machines, and each of them is a perfectly functional computer. It runs its copy of the Operating System, its mainboard, memory, and CPUs. All the internal components are the same as inside a desktop or laptop computer. The difference is subtle details like heat management systems, remote administration, subsystems to notify errors, special network storage devices, and parallel filesystems. All these subtle, important, and expensive differences make HPC clusters different from Beowulf clusters and normal PCs.

There are several kinds of computers in an HPC cluster. Most machines are used for running scientific calculations and are called Compute Nodes. A few machines are dedicated to administrative tasks, controlling the software that distributes jobs in the cluster, monitoring the health of all compute nodes, and interacting with the distributed storage devices. Among those administrative nodes, one or more are dedicated to be the front door to the cluster; they are called Head nodes. On HPC clusters with small to medium size, just one head node is enough; on larger systems, we can find several Head nodes, and you can end up connecting to one of them randomly to balance the load between them.

It would be best if you never ran intensive operations on the head node. Doing so will prevent the node from fulfilling its primary purpose, which is to serve other users, giving them access and allowing them to submit and manage the jobs running on the cluster. Instead of running on the head node, we use special software to submit jobs to the cluster, a queue system. We will discuss them later on in this lesson.

Central Processing Units

CPU Brands and Product lines

Only two manufacturers hold most of the market for PC consumer computing: Intel and AMD. Several other manufacturers of CPUs offer chips mainly for smartphones, Photo Cameras, Musical Instruments, and other very specialized Supercomputers and related equipment.

More than a decade ago, speed was the main feature used for marketing purposes on a CPU. That has changed as CPUs are not getting much faster due to faster clock speed. It is hard to market the performance of a new processor with a single number. That is why CPUs are now marketed with “Product Lines” and the “Model numbers.” Those numbers bear no direct relation to the actual characteristics of a given processor.

For example, Intel Core i3 processors are marketed for entry-level machines that are more tailored to basic computing tasks like word processing and web browsing. On the other hand, Intel’s Core i7 and i9 processors are for high-end products aimed at top-of-the-line gaming machines, which can run the most recent titles at high FPS and resolutions. Machines for enterprise usage are usually under the Xeon Line.

On AMD’s side, you have the Athlon line aimed at entry-level users, From Ryzen(TM) 3 for essential applications to the Ryzen(TM) 9, designed for enthusiasts and gamers. AMD also has product lines for enterprises like EPYC Server Processors.

Cores

Consumer-level CPUs up to the 2000s only had one core, but Intel and AMD hit a brick wall with incremental clock speed improvements. The heat and power consumption scales non-linearly with the CPU speed. That brings us to the current trend: CPUs now have two, three, four, eight, or sixteen cores on a single CPU instead of a single core. That means each CPU (in marketing terms) is several CPUs (in actual component terms).

There is a good metaphor, but I cannot claim it as mine, about CPUs, Cores, and Threads. The computer is like a Cooking Room; the cooking room could have one stove (CPUs) or several stoves (Dual Socket, for example). Each stove has multiple burners (Cores); you have multiple cookware like pans, casseroles, pots, etc (Threads). And you (OS) have to manage to cook all that in time, so you move the pan out of the burner to cook something else if needed and put it back to keep it warm.

Hyperthreading

Hyper-threading is intrinsically linked to cores and is best understood as a proprietary technology that allows the operating system to recognize the CPU as having double the number of cores.

In practical terms, a CPU with four physical cores would be recognized by the operating system as having eight virtual cores or capable of dealing with eight threads of execution. The idea is that by doing that, the CPU is expected to better manage the extra load by reordering execution and pipelining the workflow to the actual number of physical cores.

In the context of HPC, as loads are high for the CPU, activating hyper-threading is not necessarily beneficial for intensive numerical operations, and the question of whether that brings a benefit is very dependent on the scientific code and even the particular problem being solved. In our clusters, Hyper-threading is disabled on all compute nodes and enabled on service nodes.

CPU Frequency

Back in the 80s and 90s, CPU frequency was the most important feature of a CPU or at least that was how it was marketed.

Other names for CPU frequency are “clock rate”, or “clock speed”. CPUs work in steps instead of a continuous flow of information. Today, the speed of the CPU is measured in GHz, or how quickly the processor can process instructions in any given second (clock cycles per second). 1 Hz equals one cycle per second, so a 2 GHz frequency can handle 2 billion instructions for every second.

The higher the frequency, the more operations can be done. However, today that is not the whole story. Modern CPUs have complex CPU extensions (SSE, AVX, AVX2, and AVX512) that allow the CPU to execute several numerical operations on a single clock step.

On the other hand, CPUs can now change the speed up to certain limits, raising and lowering the value if needed. Sometimes raising the CPU frequency of a multicore CPU means that some cores are disabled.

One technique used to increase the performance of a CPU core is called overclocking. Overclocking is when the base frequency of a CPU is altered beyond the manufacturer’s official clock rate by user-generated means. In HPC, overclocking is not used, as doing so increases the chances of instability of the system. Stability is a well-regarded priority for a system intended for multiple users conducting scientific research.

Cache

The cache is a high-speed momentary memory device part of the CPU to facilitate future retrieval of data and instructions before processing. It’s very similar to RAM in that it acts as a temporary holding pen for data. However, CPUs access this memory in chunks, and the mapping to RAM is different.

Contrary to RAM, whose modules are independent hardware, cache sits on the CPU itself, so access times are significantly faster. The cache is an important portion of the production cost of a CPU, to the point where one of the differences between Intel’s main consumer lines, the Core i3s, i5s, and i7s, is the size of the cache memory.

There are several cache memories inside a CPU. They are called cache levels, or hierarchies, a bit like a pyramid: L1, L2, and L3. The lower the level the closer to the core.

From the HPC perspective, the cache size is an important feature for intensive numerical operations. Many CPU cycles are lost if you need to bring data all the time from the RAM or, even worse, from the Hard Drive. So, having large amounts of cache improves the efficiency of HPC codes. You, as an HPC user, must understand a bit about how cache works and impacts performance; however, users and developers have no direct control over the different cache levels.

Learn to read computer specifications

One of the central differences between one computer and another is the CPU, the chip or set of chips that control most of the numerical operations. When reading the specifications of a computer, you need to pay attention to the amount of memory, whether the drive is SSD or not, the presence of a dedicated GPU card, and several factors that could or could not be relevant for your computer. Have a look at the CPU specifications on your machine.

Intel

If your machine uses Intel Processors, go to https://ark.intel.com and enter the model of CPU you have. Intel models are, for example: “E5-2680 v3”, “E5-2680 v3”

AMD

If your machine uses AMD processors, go to https://www.amd.com/en/products/specifications/processors and check the details for your machine.

Storage

Storage devices are another area where general supercomputers and HPC clusters differ from normal computers and consumer devices. On a normal computer, you have, in most cases, just one hard drive, maybe a few in some configurations, but that is all. Storage devices are measured by their capacity to store data and the speed at which the data can be written and retrieved from those devices. Today, hard drives are measured in GigaBytes (GB) and TeraBytes (TB). One Byte is a sequence of 8 bits, with a bit being a zero or one. One GB is roughly one billion (10^9) bytes, and a TB is about 1000 GB. Today, it is common to find Hard Drives with 8 or 16 TB per drive.

One HPC cluster’s special storage is needed. There are mainly three reasons for that: you need to store a far larger amount of data. A few TB is not enough; we need 100s of TB, maybe Peta Bytes, ie, 1000s of TB. The data is read and written concurrently by all the nodes on the machine. Speed and resilience is another important factor. For that reason, data is not stored; data is spread across multiple physical hard drives, allowing faster retrieval times and preserving the data in case one or more physical drives fail.

Network

Computers today connect to the internet or other computers via WiFI or Ethernet. Those connections are limited to a few GB/s too slow for HPC clusters where compute nodes need to exchange data for large computational tasks performed by multiple compute nodes simultaneously.

On HPC clusters, we find very specialized networks that are several times faster than Ethernet in several respects. Two important concepts when dealing with data transfer are Band Width and Latency. Bandwidth is the ability to transfer data across a given medium. Latency relates to the obstruction that data faces before the first bit reaches the other end. Both elements are important in HPC data communication and are minimized by expensive network devices. Examples of network technologies in HPC are Infiniband and OmniPath.

WVU High-Performance Computer Clusters

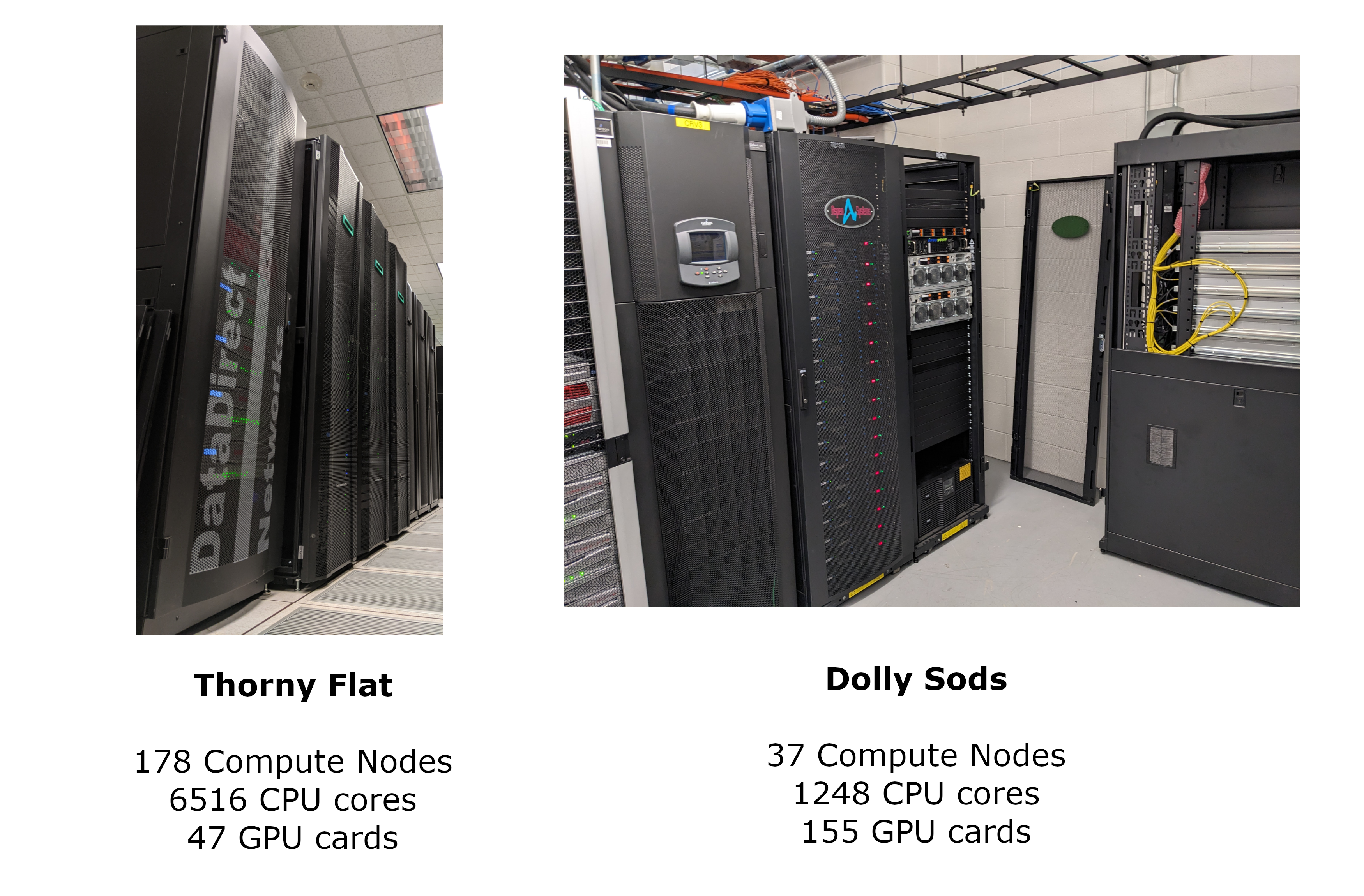

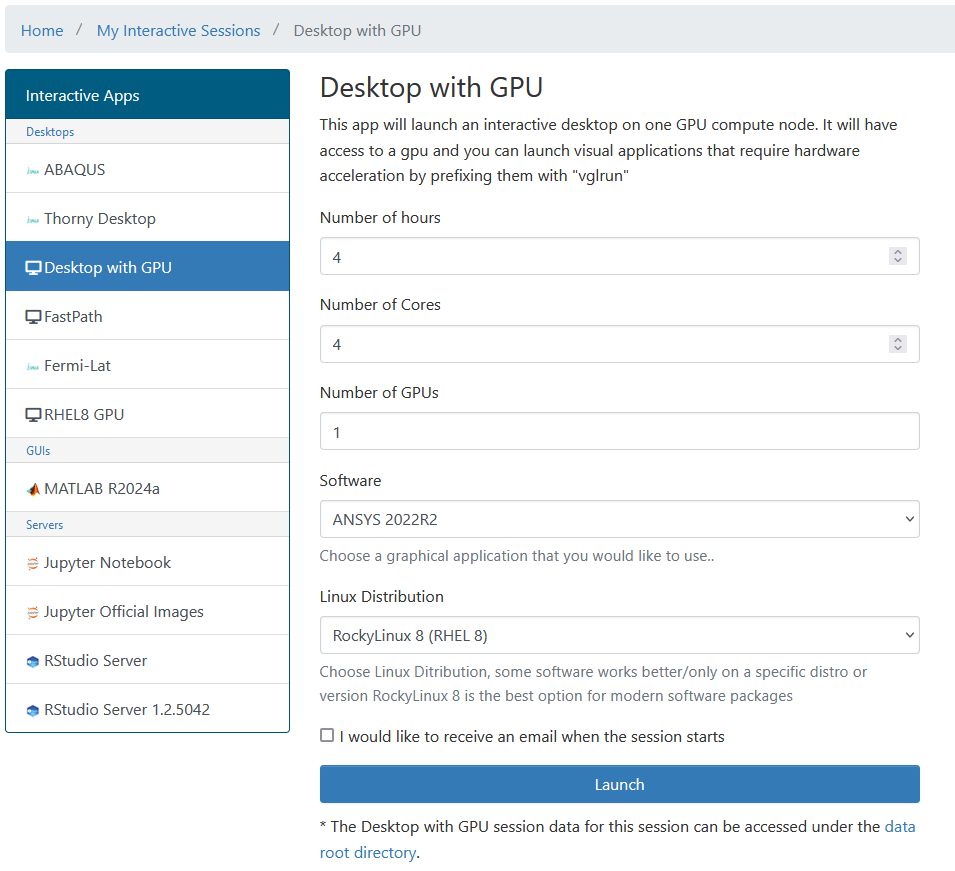

West Virginia University has two main clusters: Thorny Flat and Dolly Sods, our newest cluster that is specialized in GPU computing.

Thorny Flat

Thorny Flat is a general-purpose HPC cluster with 178 compute nodes; most nodes have 40 CPU cores. The total CPU core count is 6516 cores. There are 47 NVIDIA GPU cards ranging from P6000, RTX6000, and A100.

Dolly Sods

Dolly Sods is our newest cluster, and it is specialized in GPU computing. It has 37 nodes and 155 NVIDIA GPU cards ranging from A30, A40 and A100. The total CPU core count is 1248.

Command Line

Using HPC systems often involves the use of a shell through a command line interface (CLI) and either specialized software or programming techniques. The shell is a program with the special role of having the job of running other programs rather than doing calculations or similar tasks itself. What the user types goes into the shell, which then figures out what commands to run and orders the computer to execute them. (Note that the shell is called “the shell” because it encloses the operating system in order to hide some of its complexity and make it simpler to interact with.) The most popular Unix shell is Bash, the Bourne Again SHell (so-called because it’s derived from a shell written by Stephen Bourne). Bash is the default shell on most modern implementations of Unix and in most packages that provide Unix-like tools for Windows.

Interacting with the shell is done via a command line interface (CLI) on most HPC systems. In the earliest days of computers, the only way to interact with early computers was to rewire them. From the 1950s to the 1980s most people used line printers. These devices only allowed input and output of the letters, numbers, and punctuation found on a standard keyboard, so programming languages and software interfaces had to be designed around that constraint and text-based interfaces were the way to do this. Typing-based interfaces are often called a command-line interface, or CLI, to distinguish it from a graphical user interface, or GUI, which most people now use. The heart of a CLI is a read-evaluate-print loop, or REPL: when the user types a command and then presses the Enter (or Return) key, the computer reads it, executes it, and prints its output. The user then types another command, and so on until the user logs off.

Learning to use Bash or any other shell sometimes feels more like programming than like using a mouse. Commands are terse (often only a couple of characters long), their names are frequently cryptic, and their output is lines of text rather than something visual like a graph. However, using a command line interface can be extremely powerful, and learning how to use one will allow you to reap the benefits described above.

Secure Connections

The first step in using a cluster is establishing a connection from our laptop to the cluster. When we are sitting at a computer (or standing, or holding it in our hands or on our wrists), we expect a visual display with icons, widgets, and perhaps some windows or applications: a graphical user interface, or GUI. Since computer clusters are remote resources that we connect to over slow or intermittent interfaces (WiFi and VPNs especially), it is more practical to use a command-line interface, or CLI, to send commands as plain text. If a command returns output, it is printed as plain text as well. The commands we run today will not open a window to show graphical results.

If you have ever opened the Windows Command Prompt or macOS Terminal, you have seen a CLI. If you have already taken The Carpentries’ courses on the UNIX Shell or Version Control, you have used the CLI on your local machine extensively. The only leap to be made here is to open a CLI on a remote machine, while taking some precautions so that other folks on the network can’t see (or change) the commands you’re running or the results the remote machine sends back. We will use the Secure SHell protocol (or SSH) to open an encrypted network connection between two machines, allowing you to send & receive text and data without having to worry about prying eyes.

SSH clients are usually command-line tools, where you provide the remote

machine address as the only required argument. If your username on the remote

system differs from what you use locally, you must provide that as well. If

your SSH client has a graphical front-end, such as PuTTY or MobaXterm, you will

set these arguments before clicking “connect.” From the terminal, you’ll write

something like ssh userName@hostname, where the argument is just like an

email address: the “@” symbol is used to separate the personal ID from the

address of the remote machine.

When logging in to a laptop, tablet, or other personal device, a username, password, or pattern is normally required to prevent unauthorized access. In these situations, the likelihood of somebody else intercepting your password is low, since logging your keystrokes requires a malicious exploit or physical access. For systems like running an SSH server, anybody on the network can log in, or try to. Since usernames are often public or easy to guess, your password is often the weakest link in the security chain. Many clusters, therefore, forbid password-based login, requiring instead that you generate and configure a public-private key pair with a much stronger password. Even if your cluster does not require it, the next section will guide you through the use of SSH keys and an SSH agent to both strengthen your security and make it more convenient to log in to remote systems.

Exercise 1

Follow the instructions for connecting to the cluster. Once you are on Thorny, execute

$> lscpuOn your browser, go to https://ark.intel.com and enter the CPU model on the cluster’s head node.

Execute this command to know the amount of RAM on the machine.

$> lsmem

High-Performance Computing and Geopolitics

Western democracies are losing the global technological competition, including the race for scientific and research breakthroughs and the ability to retain global talent—crucial ingredients that underpin the development and control of the world’s most important technologies, including those that don’t yet exist.

The Australian Strategic Policy Institute (ASPI) released in 2023 a report studying the position of big powers in 44 critical areas of technology.

The report says that China’s global lead extends to 37 out of the 44 technologies. Those 44 technologies range from fields spanning defense, space, robotics, energy, the environment, biotechnology, artificial intelligence (AI), advanced materials, and key quantum technology areas.

From that report, the US still leads in High-Performance Computing. HPC is a critical enabler for innovation in other essential technologies and scientific discoveries. New materials, drugs, energy sources, and aerospace technologies. They all rely on simulations and modeling carried out with HPC clusters.

Key Points

Learn about CPUs, cores, and cache, and compare your machine with an HPC cluster.

Identify how an HPC cluster could benefit your research.

Command Line Interface

Overview

Teaching: 60 min

Exercises: 30 minTopics

How do I use the Linux terminal?

Objectives

Commands to connect to the HPC

Navigating the filesystem

Creating, moving, and removing files/directories

Command Line Interface

At a high level, an HPC cluster is a computer that several users can use simultaneously. The users expect to run a variety of scientific codes. To do that, users store the data needed as input, and at the end of the calculations, the data generated as output is also stored or used to create plots and tables via postprocessing tools and scripts. In HPC, compute nodes can communicate with each other very efficiently. For some calculations that are too demanding for a single computer, several computers could work together on a single calculation, eventually sharing information.

Our daily interactions with regular computers like desktop computers and laptops occur via various devices, such as the keyboard and mouse, touch screen interfaces, or the microphone when using speech recognition systems. Today, we are very used to interact with computers graphically, tablets, and phones, the GUI is widely used to interact with them. Everything takes place with graphics. You click on icons, touch buttons, or drag and resize photos with your fingers.

However, in HPC, we need an efficient and still very light way of communicating with the computer that acts as the front door of the cluster, the login node. We use the shell instead of a graphical user interface (GUI) for interacting with the HPC cluster.

In the GUI, we give instructions using a keyboard, mouse, or touchscreen. This way of interacting with a computer is intuitive and very easy to learn but scales very poorly for large streams of instructions, even if they are similar or identical. All that is very convenient but that is now how we use HPC clusters.

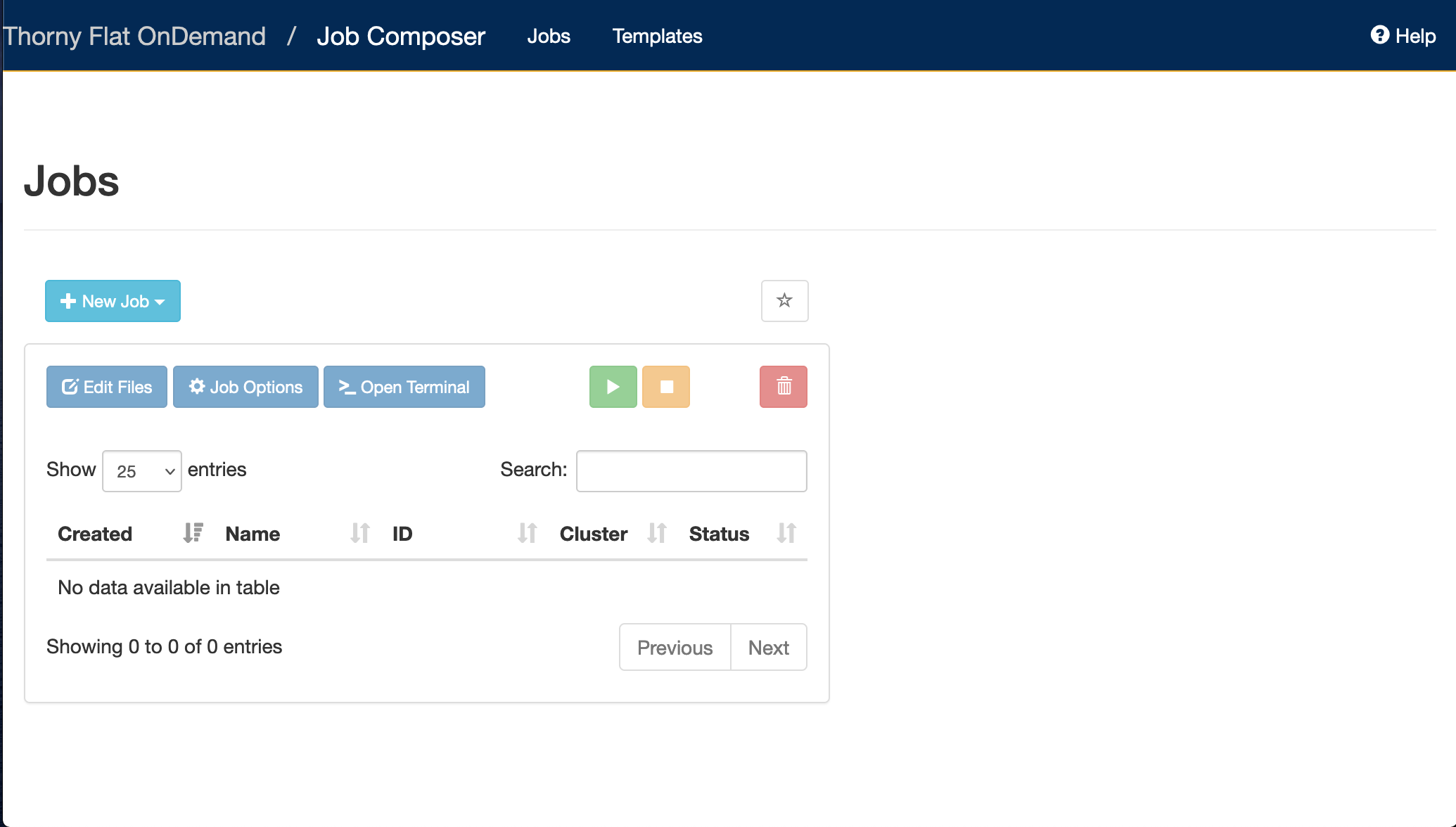

Later on in this lesson, we will show how to use Open On-demand, a web service that allows you to run interactive executions on the cluster using a web interface and your browser. For most of this lesson, we will use the Command Line Interface, and you need to familiarize yourself with it.

For example, you need to copy the third line of each of a thousand text files stored in a thousand different folders and paste it into a single file line by line. Using the traditional GUI approach of mouse clicks will take several hours to do this.

This is where we take advantage of the shell - a command-line interface (CLI) to make such repetitive tasks with less effort. It can take a single instruction and repeat it as is or with some modification as many times as we want. The task in the example above can be accomplished in a single line of a few instructions.

The heart of a command-line interface is a read-evaluate-print loop (REPL) so, called because when you type a command and press Return (also known as Enter), the shell reads your command, evaluates (or “executes”) it, prints the output of your command, loops back and waits for you to enter another command. The REPL is essential in how we interact with HPC clusters.

Even if you are using a GUI frontend such as Jupyter or RStudio, REPL is there for us to instruct computers on what to do next.

The Shell

The Shell is a program that runs other programs rather than doing calculations itself. Those programs can be as complicated as climate modeling software and as simple as a program that creates a new directory. The simple programs which are used to perform stand-alone tasks are usually referred to as commands. The most popular Unix shell is Bash (the Bourne Again SHell — so-called because it’s derived from a shell written by Stephen Bourne). Bash is the default shell on most modern implementations of Unix and in most packages that provide Unix-like tools for Windows.

When the shell is first opened, you are presented with a prompt, indicating that the shell is waiting for input.

$

The shell typically uses $ as the prompt but may use a different symbol like $>.

The prompt

When typing commands from these lessons or other sources, do not type the prompt, only the commands that follow it.

$> ls -al

Why use the Command Line Interface?

Before the usage of Command Line Interface (CLI), computer interaction took place with perforated cards or even switching cables on a big console. Despite all the years of new technology and innovation, the CLI remains one of the most powerful and flexible tools for interacting with computers.

Because it is radically different from a GUI, the CLI can take some effort and time to learn. A GUI presents you with choices to click on. With a CLI, the choices are combinations of commands and parameters, more akin to words in a language than buttons on a screen. Because the options are not presented to you, some vocabulary is necessary in this new “language.” But a small number of commands gets you a long way, and we’ll cover those essential commands below.

Flexibility and automation

The grammar of a shell allows you to combine existing tools into powerful pipelines and handle large volumes of data automatically. Sequences of commands can be written into a script, improving the reproducibility of workflows and allowing you to repeat them easily.

In addition, the command line is often the easiest way to interact with remote machines and supercomputers. Familiarity with the shell is essential to run a variety of specialized tools and resources including high-performance computing systems. As clusters and cloud computing systems become more popular for scientific data crunching, being able to interact with the shell is becoming a necessary skill. We can build on the command-line skills covered here to tackle a wide range of scientific questions and computational challenges.

Starting with the shell

If you still need to download the hands-on materials. This is the perfect opportunity to do so

$ git clone https://github.com/WVUHPC/workshops_hands-on.git

Let’s look at what is inside the workshops_hands-on folder and explore it further. First, instead of clicking on the folder name to open it and look at its contents, we have to change the folder we are in. When working with any programming tools, folders are called directories. We will be using folder and directory interchangeably moving forward.

To look inside the workshops_hands-on directory, we need to change which directory we are in. To do this, we can use the cd command, which stands for “change directory”.

$ cd workshops_hands-on

Did you notice a change in your command prompt? The “~” symbol from before should have been replaced by the string ~/workshops_hands-on$ . This means our cd command ran successfully, and we are now in the new directory. Let’s see what is in here by listing the contents:

$ ls

You should see:

Introduction_HPC LICENSE Parallel_Computing README.md Scientific_Programming Spark

Arguments

Six items are listed when you run ls, but what types of files are they, or are they directories or files?

To get more information, we can modify the default behavior of ls with one or more “arguments”.

$ ls -F

Introduction_HPC/ LICENSE Parallel_Computing/ README.md Scientific_Programming/ Spark/

Anything with a “/” after its name is a directory. Things with an asterisk “*” after them are programs. If there are no “decorations” after the name, it’s a regular text file.

You can also use the argument -l to show the directory contents in a long-listing format that provides a lot more information:

$ ls -l

total 64

drwxr-xr-x 13 gufranco its-rc-thorny 4096 Jul 23 22:50 Introduction_HPC

-rw-r--r-- 1 gufranco its-rc-thorny 35149 Jul 23 22:50 LICENSE

drwxr-xr-x 6 gufranco its-rc-thorny 4096 Jul 23 22:50 Parallel_Computing

-rw-r--r-- 1 gufranco its-rc-thorny 715 Jul 23 22:50 README.md

drwxr-xr-x 9 gufranco its-rc-thorny 4096 Jul 23 22:50 Scientific_Programming

drwxr-xr-x 2 gufranco its-rc-thorny 4096 Jul 23 22:50 Spark

Each line of output represents a file or a directory. The directory lines start with d. If you want to combine the two arguments -l and -F, you can do so by saying the following:

ls -lF

Do you see the modification in the output?

Explanation

Notice that the listed directories now have / at the end of their names.

Tip - All commands are essentially programs that are able to perform specific, commonly-used tasks.

Most commands will take additional arguments controlling their behavior, and some will take a file or directory name as input. How do we know what the available arguments that go with a particular command are? Most commonly used shell commands have a manual available in the shell. You can access the

manual using the man command. Let’s try this command with ls:

$ man ls

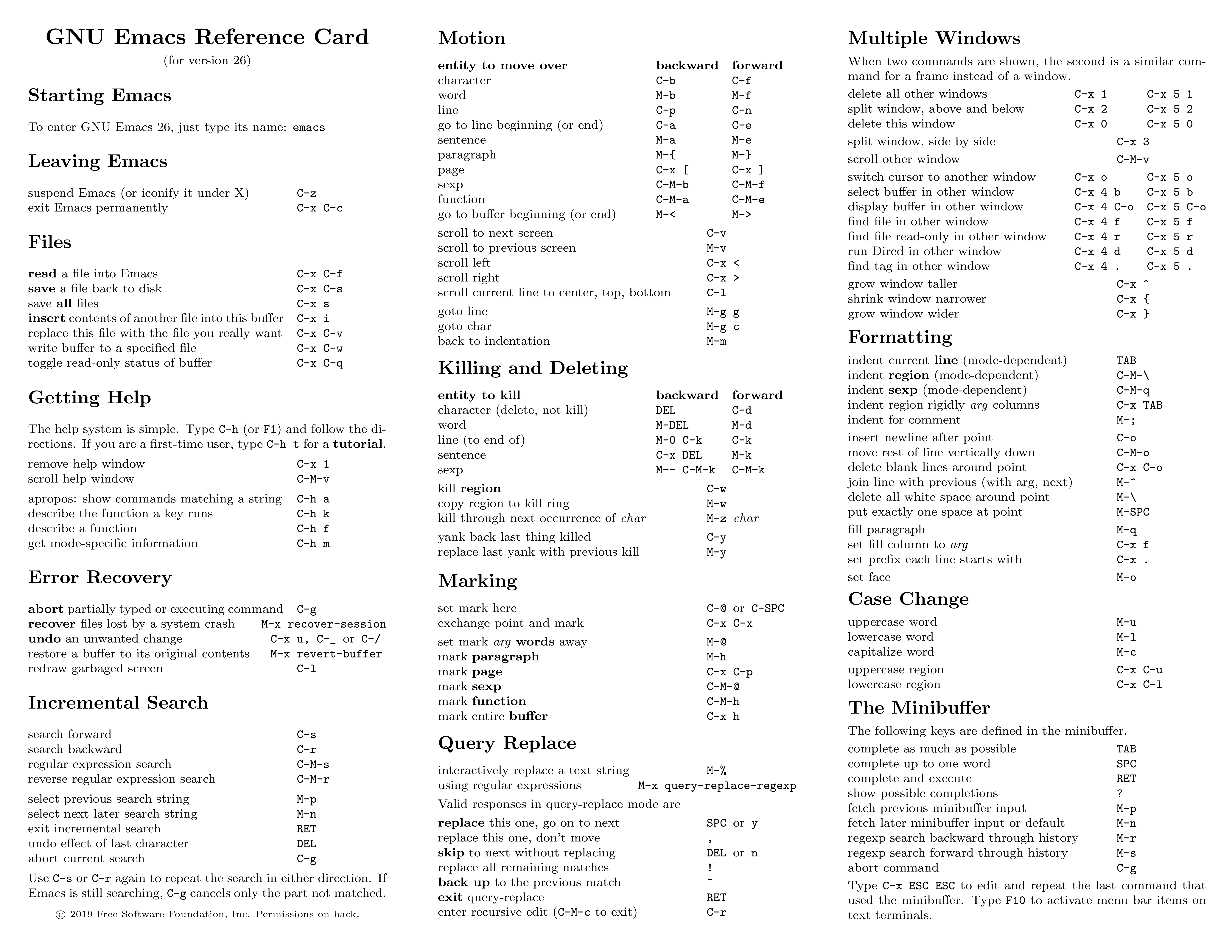

This will open the manual page for ls, and you will lose the command prompt. It will bring you to a so-called “buffer” page, a page you can navigate with your mouse, or if you want to use your keyboard, we have listed some basic keystrokes:

- ‘spacebar’ to go forward

- ‘b’ to go backward

- Up or down arrows to go forward or backward, respectively

To get out of the man “buffer” page and to be able to type commands again on the command prompt, press the q key!

Exercise

- Open up the manual page for the

findcommand. Skim through some of the information.- Would you be able to learn this much information about many commands by heart?

- Do you think this format of information display is useful for you?

- Quit the

manbuffer and return to your command prompt.

Tip - Shell commands can get extremely complicated. No one can learn all of these arguments, of course. So you will likely refer to the manual page frequently.

Tip - If the manual page within the Terminal is hard to read and traverse, the manual exists online, too. Use your web-searching powers to get it! In addition to the arguments, you can also find good examples online; Google is your friend.

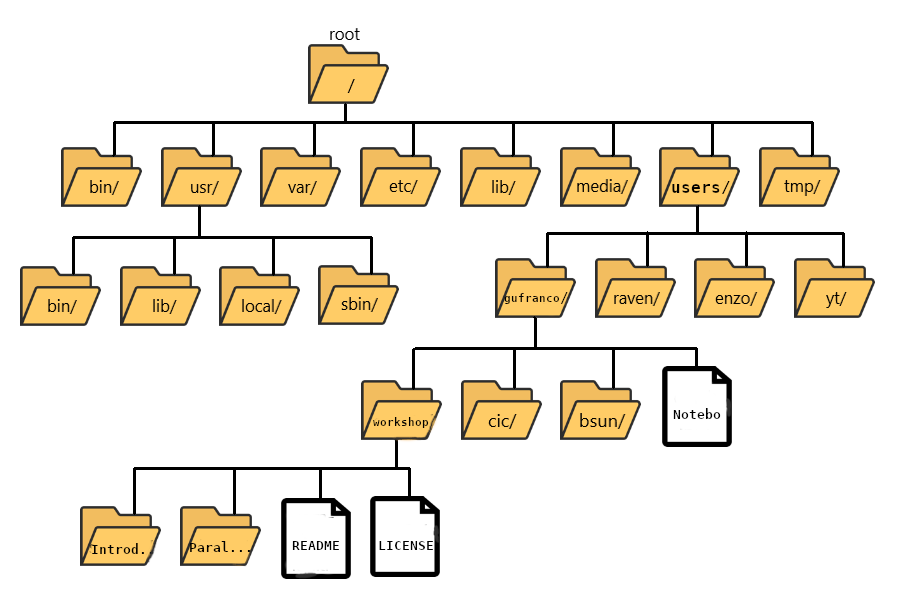

The Unix directory file structure (a.k.a. where am I?)

Let’s practice moving around a bit. Let’s go into the Introduction_HPC directory and see what is there.

$ cd Introduction_HPC

$ ls -l

Great, we have traversed some sub-directories, but where are we in the context of our pre-designated “home” directory containing the workshops_hands-on directory?!

The “root” directory!

Like on any computer you have used before, the file structure within a Unix/Linux system is hierarchical, like an upside-down tree with the “/” directory, called “root” as the starting point of this tree-like structure:

Tip - Yes, the root folder’s actual name is just

/(a forward slash).

That / or root is the ‘top’ level.

When you log in to a remote computer, you land on one of the branches of that tree, i.e., your pre-designated “home” directory that usually has your login name as its name (e.g. /users/gufranco).

Tip - On macOS, which is a UNIX-based OS, the root level is also “/”.

Tip - On a Windows OS, it is drive-specific; “C:" is considered the default root, but it changes to “D:", if you are on another drive.

Paths

Now let’s learn more about the “addresses” of directories, called “path”, and move around the file system.

Let’s check to see what directory we are in. The command prompt tells us which directory we are in, but it doesn’t give information about where the Introduction_HPC directory is with respect to our “home” directory or the / directory.

The command to check our current location is pwd. This command does not take any arguments, and it returns the path or address of your present working directory (the folder you are in currently).

$ pwd

In the output here, each folder is separated from its “parent” or “child” folder by a “/”, and the output starts with the root / directory. So, you are now able to determine the location of Introduction_HPC directory relative to the root directory!

But which is your pre-designated home folder? No matter where you have navigated to in the file system, just typing in cd will bring you to your home directory.

$ cd

What is your present working directory now?

$ pwd

This should now display a shorter string of directories starting with root. This is the full address to your home directory, also referred to as “full path”. The “full” here refers to the fact that the path starts with the root, which means you know which branch of the tree you are on in reference to the root.

Take a look at your command prompt now. Does it show you the name of this directory (your username?)?

No, it doesn’t. Instead of the directory name, it shows you a ~.

Why is this so?

This is because ~ = full path to the home directory for the user.

Can we just type ~ instead of /users/username?

Yes, we can!

Using paths with commands

You can do much more with the idea of stringing together parent/child directories. Let’s say we want to look at the contents of the Introduction_HPC folder but do it from our current directory (the home directory. We can use the list command and follow it up with the path to the folder we want to list!

$ cd

$ ls -l ~/workshops_hands-on/Introduction_HPC

Now, what if we wanted to change directories from ~ (home) to Introduction_HPC in a single step?

$ cd ~/workshops_hands-on/Introduction_HPC

Done! You have moved two levels of directories in one command.

What if we want to move back up and out of the Introduction_HPC directory? Can we just type cd workshops_hands-on? Try it and see what happens.

Unfortunately, that won’t work because when you say cd workshops_hands-on, shell is looking for a folder called workshops_hands-on within your current directory, i.e. Introduction_HPC.

Can you think of an alternative?

You can use the full path to workshops_hands-on!

$ cd ~/workshops_hands-on

Tip What if we want to navigate to the previous folder but can’t quite remember the full or relative path, or want to get there quickly without typing a lot? In this case, we can use

cd -. When-is used in this context it is referring to a special variable called$OLDPWDthat is stored without our having to assign it anything. We’ll learn more about variables in a future lesson, but for now you can see how this command works. Try typing:cd -This command will move you to the last folder you were in before your current location, then display where you now are! If you followed the steps up until this point it will have moved you to

~/workshops_hands-on/Introduction_HPC. You can use this command again to get back to where you were before (~/workshops_hands-on) to move on to the Exercises.

Exercises

- First, move to your home directory.

- Then, list the contents of the

Parallel_Computingdirectory within theworkshops_hands-ondirectory.

Tab completion

Typing out full directory names can be time-consuming and error-prone. One way to avoid that is to use tab completion. The tab key is located on the left side of your keyboard, right above the caps lock key. When you start typing out the first few characters of a directory name, then hit the tab key, Shell will try to fill in the rest of the directory name.

For example, first type cd to get back to your home directly, then type cd uni, followed by pressing the tab key:

$ cd

$ cd work<tab>

The shell will fill in the rest of the directory name for workshops_hands-on.

Now, let’s go into Introduction_HPC, then type ls 1, followed by pressing the tab key once:

$ cd Introduction_HPC/

$ ls 1<tab>

Nothing happens!!

The reason is that there are multiple files in the Introduction_HPC directory that start with 1. As a result, shell needs to know which one to fill in. When you hit tab a second time again, the shell will then list all the possible choices.

$ ls 1<tab><tab>

Now you can select the one you are interested in listed, enter the number, and hit the tab again to fill in the complete name of the file.

$ ls 15._Shell<tab>

NOTE: Tab completion can also fill in the names of commands. For example, enter

e<tab><tab>. You will see the name of every command that starts with ane. One of those isecho. If you enterech<tab>, you will see that tab completion works.

Tab completion is your friend! It helps prevent spelling mistakes and speeds up the process of typing in the full command. We encourage you to use this when working on the command line.

Relative paths

We have talked about full paths so far, but there is a way to specify paths to folders and files without having to worry about the root directory. You used this before when we were learning about the cd command.

Let’s change directories back to our home directory and once more change directories from ~ (home) to Introduction_HPC in a single step. (Feel free to use your tab-completion to complete your path!)

$ cd

$ cd workshops_hands-on/Introduction_HPC

This time we are not using the ~/ before workshops_hands-on. In this case, we are using a relative path, relative to our current location - wherein we know that workshops_hands-on is a child folder in our home folder, and the Introduction_HPC folder is within workshops_hands-on.

Previously, we had used the following:

$ cd ~/workshops_hands-on/Introduction_HPC

There is also a handy shortcut for the relative path to a parent directory, two periods ... Let’s say we wanted to move from the Introduction_HPC folder to its parent folder.

cd ..

You should now be in the workshops_hands-on directory (check the command prompt or run pwd).

You will learn more about the

..shortcut later. Can you think of an example when this shortcut to the parent directory won’t work?Answer

When you are at the root directory, since there is no parent to the root directory!

When using relative paths, you might need to check what the branches are downstream of the folder you are in. There is a really handy command (tree) that can help you see the structure of any directory.

$ tree

If you are aware of the directory structure, you can string together a list of directories as long as you like using either relative or full paths.

Synopsis of Full versus Relative paths

A full path always starts with a /, a relative path does not.

A relative path is like getting directions from someone on the street. They tell you to “go right at the Stop sign, and then turn left on Main Street”. That works great if you’re standing there together, but not so well if you’re trying to tell someone how to get there from another country. A full path is like GPS coordinates. It tells you exactly where something is, no matter where you are right now.

You can usually use either a full path or a relative path depending on what is most convenient. If we are in the home directory, it is more convenient to just enter the relative path since it involves less typing.

Over time, it will become easier for you to keep a mental note of the structure of the directories that you are using and how to quickly navigate among them.

Copying, creating, moving, and removing data

Now we can move around within the directory structure using the command line. But what if we want to do things like copy files or move them from one directory to another, rename them?

Let’s move into the Introduction_HPC directory, which contains some more folders and files:

cd ~/workshops_hands-on/Introduction_HPC

cd 2._Command_Line_Interface

Copying

Let’s use the copy (cp) command to make a copy of one of the files in this folder, Mov10_oe_1.subset.fq, and call the copied file Mov10_oe_1.subset-copy.fq.

The copy command has the following syntax:

cp path/to/item-being-copied path/to/new-copied-item

In this case the files are in our current directory, so we just have to specify the name of the file being copied, followed by whatever we want to call the newly copied file.

$ cp OUTCAR OUTCAR_BKP

$ ls -l

The copy command can also be used for copying over whole directories, but the -r argument has to be added after the cp command. The -r stands for “recursively copy everything from the directory and its sub-directories”. We used it earlier when we copied over the workshops_hands-on directory to our home directories.

Creating

Next, let’s create a directory called ABINIT and we can move the copy of the input files into that directory.

The mkdir command is used to make a directory, syntax: mkdir name-of-folder-to-be-created.

$ mkdir ABINIT

Tip - File/directory/program names with spaces in them do not work well in Unix. Use characters like hyphens or underscores instead. Using underscores instead of spaces is called “snake_case”. Alternatively, some people choose to skip spaces and rather just capitalize the first letter of each new word (i.e. MyNewFile). This alternative technique is called “CamelCase”.

Moving

We can now move our copied input files into the new directory. We can move files around using the move command, mv, syntax:

mv path/to/item-being-moved path/to/destination

In this case, we can use relative paths and just type the name of the file and folder.

$ mv 14si.pspnc INCAR t17.files t17.in ABINIT/

Let’s check if the move command worked like we wanted:

$ ls -l ABINIT

Let us run abinit, this is a quick execution, and you have not yet learned how to submit jobs. So, for this exceptional time, we will execute this on the login node

cd ABINIT

$ module load atomistic/abinit/9.8.4_intel22_impi22

$ mpirun -np 4 abinit < t17.files

Renaming

The mv command has a second functionality, it is what you would use to rename files, too. The syntax is identical to when we used mv for moving, but this time instead of giving a directory as its destination, we just give a new name as its destination.

The files t17.out can be renamed, the ABINIT could run again with some change in the input. We want to rename that file:

$ mv t17.out t17.backup.out

$ ls

Tip - You can use

mvto move a file and rename it simultaneously!

Important notes about mv:

- When using

mv, the shell will not ask if you are sure that you want to “replace existing file” or similar unless you use the -i option. - Once replaced, it is not possible to get the replaced file back!

Removing

We did not need to create a backup of our output as we noticed this file is no longer needed; in the interest of saving space on the cluster, we want to delete the contents of the t17.backup.out.

$ rm t17.backup.out

Important notes about rm

rmpermanently removes/deletes the file/folder.- There is no concept of “Trash” or “Recycle Bin” on the command line. When you use

rmto remove/delete, they’re really gone. - Be careful with this command!

- You can use the

-iargument if you want it to ask before removingrm -i file-name.

Let’s delete the ABINIT folder too. First, we’ll have to navigate our way to the parent directory (we can’t delete the folder we are currently in/using).

$ cd ..

$ rm ABINIT

Did that work? Did you get an error?

Explanation

By default, rm, will NOT delete directories, but you use the -r flag if you are sure that you want to delete the directories and everything within them. To be safe, let's use it with the -i flag.

$ rm -ri ABINIT

-r: recursive, commonly used as an option when working with directories, e.g. withcp.-i: prompt before every removal.

Exercise

- Create a new folder in

workshops_hands-oncalledabinit_test - Copy over the abinit inputs from

2._Command_Line_Interfaceto the~/workshops_hands-on/Introduction_HPC/2._Command_Line_Interface/abinit_testfolder - Rename the

abinit_testfolder and call itexercise1

Exiting from the cluster

To close the interactive session on the cluster and disconnect from the cluster, the command is exit. So, you are going to have to run the exit command twice.

00:11:05-gufranco@trcis001:~$ exit

logout

Connection to trcis001 closed.

guilleaf@MacBook-Pro-15in-2015 ~ %

10 Unix/Linux commands to learn and use

The echo and cat commands

The echo command is very basic; it returns what you give back to the terminal, kinda like an echo. Execute the command below.

$ echo "I am learning UNIX Commands"

I am learning UNIX Commands

This may not seem that useful right now. However, echo will also print the

contents of a variable to the terminal. There are some default variables set for

each user on the HPCs: $HOME is the pathway to the user’s “home” directory,

and $SCRATCH is Similarly the pathway to the user’s “scratch” directory. More

info on what those directories are for later, but for now, we can print them to

the terminal using the echo command.

$ echo $HOME

/users/<username>

$ echo $SCRATCH

/scratch/<username>

In addition, the shell can do basic arithmetical operations, execute this command:

$ echo $((23+45*2))

113

Notice that, as customary in mathematics, products take precedence over addition. That is called the PEMDAS order of operations, ie "Parentheses, Exponents, Multiplication and Division, and Addition and Subtraction". Check your understanding of the PEMDAS rule with this command:

$ echo $(((1+2**3*(4+5)-7)/2+9))

42

Notice that the exponential operation is expressed with the **

operator. The usage of echo is important. Otherwise, if you execute

the command without echo, the shell will do the operation and will try

to execute a command called 42 that does not exist on the system. Try

by yourself:

$ $(((1+2**3*(4+5)-7)/2+9))

-bash: 42: command not found

As you have seen before, when you execute a command on the terminal, in most cases you see the output printed on the screen. The next thing to learn is how to redirect the output of a command into a file. It will be very important to submit jobs later and control where and how the output is produced. Execute the following command:

$ echo "I am learning UNIX Commands." > report.log

With the character > redirects the output from echo into a file

called report.log. No output is printed on the screen. If the file

does not exist, it will be created. If the file existed previously, it was erased, and only the new contents were stored. In fact, > can be used

to redirect the output of any command to a file!

To check that the file actually contains the line produced by echo,

execute:

$ cat report.log

I am learning UNIX Commands.

The cat (concatenate) command displays the contents of one or several files. In the case of multiple files, the files are printed in the order they are described in the command line, concatenating the output as per the name of the command.

In fact, there are hundreds of commands, most of them with a variety of options that change the behavior of the original command. You can feel bewildered at first by a large number of existing commands, but most of the time, you will be using a very small number of them. Learning those will speed up your learning curve.

Folder commands

As mentioned, UNIX organizes data in storage devices as a

tree. The commands pwd, cd and mkdir will allow you to know where

you are, move your location on the tree, and create new folders. Later, we

will learn how to move folders from one location on the tree to another.

The first command is pwd. Just execute the command on the terminal:

$ pwd

/users/<username>

It is always very important to know where in the tree you are. Doing research usually involves dealing with a large amount of data, and exploring several parameters or physical conditions. Therefore, organizing the filesystem is key.

When you log into a cluster, by default, you are located on your $HOME

folder. That is why the pwd command should return that

location in the first instance.

The following command cd is used to change the directory.

A directory is another name for folder and is

widely used; in UNIX, the terms are

interchangeable. Other Desktop Operating Systems like Windows and MacOS

have the concept of smart folders or virtual folders, where the

folder that you see on screen has no correlation with a directory in

the filesystem. In those cases, the distinction is relevant.

There is another important folder defined in our clusters, it’s called

the scratch folder, and each user has its own. The location of the folder

is stored in the variable $SCRATCH. Notice that this is internal

convection and is not observed in other HPC clusters.

Use the next command to go to that folder:

$ cd $SCRATCH

$ pwd

/scratch/<username>

Notice that the location is different now; if you are using this account for the first time, you will not have files on this folder. It is time to learn another command to list the contents of a folder, execute:

$ ls

Assuming that you are using your HPC account for the first time, you

will not have anything in your $SCRATCH folder and should therefore see no

output from ls. This is a good opportunity to start your filesystem by creating one folder

and moving into it, execute:

$ mkdir test_folder

$ cd test_folder

mkdir allows you to create folders

in places where you are authorized to do so, such as your $HOME

and $SCRATCH folders. Try this command:

$ mkdir /test_folder

mkdir: cannot create directory `/test_folder': Permission denied

There is an important difference between test_folder and

/test_folder. The former is a location in your current

directory, and the latter is a location starting on the root directory

/. A normal user has no rights to create folders on that directory so

mkdir will fail, and an error message will be shown on your screen.

Notice that we named it test_folder instead of test folder. In UNIX, there is no restriction regarding files or

directories with spaces, but using them can become a nuisance on the command

line. If you want to create the folder with spaces from the command

line, here are the options:

$ mkdir "test folder with spaces"

$ mkdir another\ test\ folder\ with\ spaces

In any case, you have to type extra characters to prevent the command line application from considering those spaces as separators for several arguments in your command. Try executing the following:

$ mkdir another folder with spaces

$ ls

another folder with spaces folder spaces test_folder test folder with spaces with

Maybe is not clear what is happening here. There is an option for ls

that present the contents of a directory:

$ ls -l

total 0

drwxr-xr-x 2 myname mygroup 512 Nov 2 15:44 another

drwxr-xr-x 2 myname mygroup 512 Nov 2 15:45 another folder with spaces

drwxr-xr-x 2 myname mygroup 512 Nov 2 15:44 folder

drwxr-xr-x 2 myname mygroup 512 Nov 2 15:44 spaces

drwxr-xr-x 2 myname mygroup 512 Nov 2 15:45 test_folder

drwxr-xr-x 2 myname mygroup 512 Nov 2 15:45 test folder with spaces

drwxr-xr-x 2 myname mygroup 512 Nov 2 15:44 with

It should be clear, now what happens when the spaces are not contained

in quotes "test folder with spaces" or escaped as

another\ folder\ with\ spaces. This is the perfect opportunity to

learn how to delete empty folders. Execute:

$ rmdir another

$ rmdir folder spaces with

You can delete one or several folders, but all those folders must be empty. If those folders contain files or more folders, the command will fail and an error message will be displayed.

After deleting those folders created by mistake, let's check the

contents of the current directory. The command ls -1 will list the

contents of a file one per line, something very convenient for future

scripting:

$ ls -1

total 0

drwxr-xr-x 2 myname mygroup 512 Nov 2 15:45 another folder with spaces

drwxr-xr-x 2 myname mygroup 512 Nov 2 15:45 test_folder

drwxr-xr-x 2 myname mygroup 512 Nov 2 15:45 test folder with spaces

Commands for copy and move

The next two commands are cp and mv. They are used to copy or move

files or folders from one location to another. In its simplest usage,

those two commands take two arguments: the first argument is the source

and the last one is the destination. In the case of more than two

arguments, the destination must be a directory. The effect will be to

copy or move all the source items into the folder indicated as the

destination.

Before doing a few examples with cp and mv, let's use a very handy

command to create files. The command touch is used to update the

access and modification times of a file or folder to the current time.

If there is no such file, the command will create a new empty

file. We will use that feature to create some empty files for the

purpose of demonstrating how to use cp and mv.

Let’s create a few files and directories:

$ mkdir even odd

$ touch f01 f02 f03 f05 f07 f11

Now, lets copy some of those existing files to complete all the numbers

up to f11:

$ cp f03 f04

$ cp f05 f06

$ cp f07 f08

$ cp f07 f09

$ cp f07 f10

This is a good opportunity to present the * wildcard, and use it to

replace an arbitrary sequence of characters. For instance, execute this

command to list all the files created above:

$ ls f*

f01 f02 f03 f04 f05 f06 f07 f08 f09 f10 f11

The wildcard is able to replace zero or more arbitrary characters, for example:

$ ls f*1

f01 f11

There is another way of representing files or directories that follow a pattern, execute this command:

$ ls f0[3,5,7]

f03 f05 f07

The files selected are those whose last character is on the list

[3,5,7]. Similarly, a range of characters can be represented. See:

$ ls f0[3-7]

f03 f04 f05 f06 f07

We will use those special characters to move files based on their parity. Execute:

$ mv f[0,1][1,3,5,7,9] odd

$ mv f[0,1][0,2,4,6,8] even

The command above is equivalent to executing the explicit listing of sources:

$ mv f01 f03 f05 f07 f09 f11 odd

$ mv f02 f04 f06 f08 f10 even

Delete files and Folders

As we mentioned above, empty folders can be deleted with the command

rmdir, but that only works if there are no subfolders or files inside

the folder that you want to delete. See for example, what happens if you

try to delete the folder called odd:

$ rmdir odd

rmdir: failed to remove `odd': Directory not empty

If you want to delete odd, you can do it in two ways. The command

rm allows you to delete one or more files entered as arguments. Let's

delete all the files inside odd, followed by the deletion of the folder

odd itself:

$ rm odd/*

$ rmdir odd

Another option is to delete a folder recursively, this is a powerful but also dangerous option. Quite unlike Windows/MacOS, recovering deleted files through a “Trash Can” or “Recycling Bin” does not happen in Linux; deleting is permanent. Let's delete the folder even recursively:

$ rm -r even

Summary of Basic Commands

The purpose of this brief tutorial is to familiarize you with the most common commands used in UNIX environments. We have shown ten commands that you will be using very often in your interaction. These 10 basic commands and one editor from the next section are all that you need to be ready to submit jobs on the cluster.

The next table summarizes those commands.

| Command | Description | Examples |

|---|---|---|

echo |

Display a given message on the screen | $ echo "This is a message" |

cat |

Display the contents of a file on screen Concatenate files |

$ cat my_file |

date |

Shows the current date on screen | $ date Sun Jul 26 15:41:03 EDT 2020 |

pwd |

Return the path to the current working directory | $ pwd /users/username |

cd |

Change directory | $ cd sub_folder |

mkdir |

Create directory | $ mkdir new_folder |

touch |

Change the access and modification time of a file Create empty files |

$ touch new_file |

cp |

Copy a file in another location Copy several files into a destination directory |

$ cp old_file new_file |

mv |

Move a file in another location Move several files into a destination folder |

$ mv old_name new_name |

rm |

Remove one or more files from the file system tree | $ rm trash_file $ rm -r full_folder |

Exercise 1

Get into Thorny Flat with your training account and execute the commands

ls,date, andcalExit from the cluster with

exitSo let’s try our first command, which will list the contents of the current directory:

[training001@srih0001 ~]$ ls -altotal 64 drwx------ 4 training001 training 512 Jun 27 13:24 . drwxr-xr-x 151 root root 32768 Jun 27 13:18 .. -rw-r--r-- 1 training001 training 18 Feb 15 2017 .bash_logout -rw-r--r-- 1 training001 training 176 Feb 15 2017 .bash_profile -rw-r--r-- 1 training001 training 124 Feb 15 2017 .bashrc -rw-r--r-- 1 training001 training 171 Jan 22 2018 .kshrc drwxr-xr-x 4 training001 training 512 Apr 15 2014 .mozilla drwx------ 2 training001 training 512 Jun 27 13:24 .sshCommand not found

If the shell can’t find a program whose name is the command you typed, it will print an error message such as:

$ ksks: command not foundUsually this means that you have mis-typed the command.

Exercise 2

Commands in Unix/Linux are very stable with some existing for decades now. This exercise begins to give you a feeling of the different parts of a command.

Execute the command

cal, we executed the command before but this time execute it again like thiscal -y. You should get an output like this:[training001@srih0001 ~]$ cal -y2021 January February March Su Mo Tu We Th Fr Sa Su Mo Tu We Th Fr Sa Su Mo Tu We Th Fr Sa 1 2 1 2 3 4 5 6 1 2 3 4 5 6 3 4 5 6 7 8 9 7 8 9 10 11 12 13 7 8 9 10 11 12 13 10 11 12 13 14 15 16 14 15 16 17 18 19 20 14 15 16 17 18 19 20 17 18 19 20 21 22 23 21 22 23 24 25 26 27 21 22 23 24 25 26 27 24 25 26 27 28 29 30 28 28 29 30 31 31 April May June Su Mo Tu We Th Fr Sa Su Mo Tu We Th Fr Sa Su Mo Tu We Th Fr Sa 1 2 3 1 1 2 3 4 5 4 5 6 7 8 9 10 2 3 4 5 6 7 8 6 7 8 9 10 11 12 11 12 13 14 15 16 17 9 10 11 12 13 14 15 13 14 15 16 17 18 19 18 19 20 21 22 23 24 16 17 18 19 20 21 22 20 21 22 23 24 25 26 25 26 27 28 29 30 23 24 25 26 27 28 29 27 28 29 30 30 31 July August September Su Mo Tu We Th Fr Sa Su Mo Tu We Th Fr Sa Su Mo Tu We Th Fr Sa 1 2 3 1 2 3 4 5 6 7 1 2 3 4 4 5 6 7 8 9 10 8 9 10 11 12 13 14 5 6 7 8 9 10 11 11 12 13 14 15 16 17 15 16 17 18 19 20 21 12 13 14 15 16 17 18 18 19 20 21 22 23 24 22 23 24 25 26 27 28 19 20 21 22 23 24 25 25 26 27 28 29 30 31 29 30 31 26 27 28 29 30 October November December Su Mo Tu We Th Fr Sa Su Mo Tu We Th Fr Sa Su Mo Tu We Th Fr Sa 1 2 1 2 3 4 5 6 1 2 3 4 3 4 5 6 7 8 9 7 8 9 10 11 12 13 5 6 7 8 9 10 11 10 11 12 13 14 15 16 14 15 16 17 18 19 20 12 13 14 15 16 17 18 17 18 19 20 21 22 23 21 22 23 24 25 26 27 19 20 21 22 23 24 25 24 25 26 27 28 29 30 28 29 30 26 27 28 29 30 31 31Another very simple command that is very useful in HPC is

date. Without any arguments, it prints the current date to the screen.$ dateSun Jul 26 15:41:03 EDT 2020

Exercise 3

Create two folders called

oneandtwo. Inonecreate the empty filenone1and intwocreate > the empty filenone2.Create also in those two folders, files

date1and >date2by redirecting the output from the commanddate> using>.$ date > date1Check with

catthat those files contain dates.Now, create the folders

empty_filesanddatesand move > the corresponding filesnone1andnone2to >empty_filesand do the same fordate1anddate2.The folders

oneandtwoshould be empty now; delete > them withrmdirDo the same with foldersempty_filesanddateswithrm -r.

Exercise 4

The command line is powerful enough even to do programming. Execute the command below and see the answer.

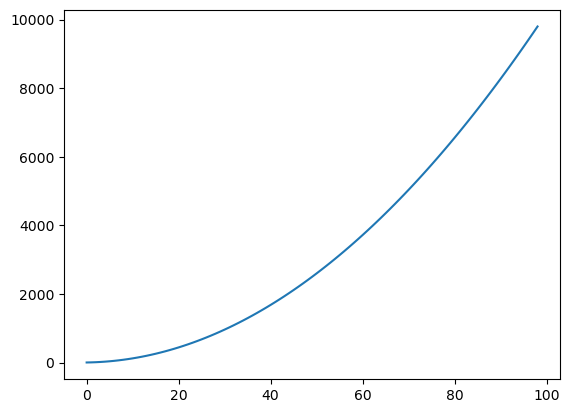

[training001@srih0001 ~]$ n=1; while test $n -lt 10000; do echo $n; n=`expr 2 \* $n`; done1 2 4 8 16 32 64 128 256 512 1024 2048 4096 8192If you are not getting this output check the command line very carefully. Even small changes could be interpreted by the shell as entirely different commands so you need to be extra careful and gather insight when commands are not doing what you want.

Now the challenge consists on tweaking the command line above to show the calendar for August for the next 10 years.

Hint

Use the command

cal -hto get a summary of the arguments to show just one month for one specific year You can useexprto increasenby one on each cycle, but you can also usen=$(n+1)

Grabbing files from the internet

To download files from the internet,

the absolute best tool is wget.

The syntax is relatively straightforwards: wget https://some/link/to/a/file.tar.gz

Downloading the Drosophila genome

The Drosophila melanogaster reference genome is located at the following website: http://metazoa.ensembl.org/Drosophila_melanogaster/Info/Index. Download it to the cluster with

wget.

cdto your genome directoryCopy this URL and paste it onto the command line:

$> wget ftp://ftp.ensemblgenomes.org:21/pub/metazoa/release-51/fasta/drosophila_melanogaster/dna/Drosophila_melanogaster.BDGP6.32.dna_rm.toplevel.fa.gz

Working with compressed files, using unzip and gunzip

The file we just downloaded is gzipped (has the

.gzextension). You can uncompress it withgunzip filename.gz.File decompression reference:

- .tar.gz -

tar -xzvf archive-name.tar.gz- .tar.bz2 -

tar -xjvf archive-name.tar.bz2- .zip -

unzip archive-name.zip- .rar -

unrar archive-name.rar- .7z -

7z x archive-name.7zHowever, sometimes we will want to compress files ourselves to make file transfers easier. The larger the file, the longer it will take to transfer. Moreover, we can compress a whole bunch of little files into one big file to make it easier on us (no one likes transferring 70000) little files!

The two compression commands we’ll probably want to remember are the following:

- Compress a single file with Gzip -

gzip filename- Compress a lot of files/folders with Gzip -

tar -czvf archive-name.tar.gz folder1 file2 folder3 etc

Wildcards, shortcuts, and other time-saving tricks

Wild cards

The “*” wildcard:

Navigate to the ~/workshops_hands-on/Introduction_HPC/2._Command_Line_Interface/ABINIT directory.

The “*” character is a shortcut for “everything”. Thus, if you enter ls *, you will see all of the contents of a given directory. Now try this command:

$ ls 2*

This lists every file that starts with a 2. Try this command:

$ ls /usr/bin/*.sh

This lists every file in /usr/bin directory that ends in the characters .sh. “*” can be placed anywhere in your pattern. For example:

$ ls t17*.nc

This lists only the files that begin with ‘t17’ and end with .nc.

So, how does this actually work? The Shell (bash) considers an asterisk “*” to be a wildcard character that can match one or more occurrences of any character, including no character.

Tip - An asterisk/star is only one of the many wildcards in Unix, but this is the most powerful one, and we will be using this one the most for our exercises.

The “?” wildcard:

Another wildcard that is sometimes helpful is ?. ? is similar to * except that it is a placeholder for exactly one position. Recall that * can represent any number of following positions, including no positions. To highlight this distinction, lets look at a few examples. First, try this command:

$ ls /bin/d*

This will display all files in /bin/ that start with “d” regardless of length. However, if you only wanted the things in /bin/ that starts with “d” and are two characters long, then you can use:

$ ls /bin/d?

Lastly, you can chain together multiple “?” marks to help specify a length. In the example below, you would be looking for all things in /bin/ that start with a “d” and have a name length of three characters.

$ ls /bin/d??

Exercise

Do each of the following using a single ls command without

navigating to a different directory.

- List all of the files in

/binthat start with the letter ‘c’ - List all of the files in

/binthat contain the letter ‘a’ - List all of the files in

/binthat end with the letter ‘o’

BONUS: Using one command to list all of the files in /bin that contain either ‘a’ or ‘c’. (Hint: you might need to use a different wildcard here. Refer to this post for some ideas.)

Shortcuts

There are some very useful shortcuts that you should also know about.

Home directory or “~”

Dealing with the home directory is very common. In the shell, the tilde character “~”, is a shortcut for your home directory. Let’s first navigate to the ABINIT directory (try to use tab completion here!):

$ cd

$ cd ~/workshops_hands-on/Introduction_HPC/2._Command_Line_Interface

Then enter the command:

$ ls ~

This prints the contents of your home directory without you having to type the full path. This is because the tilde “~” is equivalent to “/home/username”, as we had mentioned in the previous lesson.

Parent directory or “..”

Another shortcut you encountered in the previous lesson is “..”:

$ ls ..

The shortcut .. always refers to the parent directory of whatever directory you are currently in. So, ls .. will print the contents of unix_lesson. You can also chain these .. together, separated by /:

$ ls ../..

This prints the contents of /n/homexx/username, which is two levels above your current directory (your home directory).

Current directory or “.”

Finally, the special directory . always refers to your current directory. So, ls and ls . will do the same thing - they print the contents of the current directory. This may seem like a useless shortcut, but recall that we used it earlier when we copied over the data to our home directory.

To summarize, the commands ls ~ and ls ~/. do exactly the same thing. These shortcuts can be convenient when you navigate through directories!

Command History

You can easily access previous commands by hitting the arrow key on your keyboard. This way, you can step backward through your command history. On the other hand, the arrow key takes you forward in the command history.

Try it out! While on the command prompt, hit the arrow a few times, and then hit the arrow a few times until you are back to where you started.

You can also review your recent commands with the history command. Just enter:

$ history

You should see a numbered list of commands, including the history command you just ran!

Only a certain number of commands can be stored and displayed with the history command by default, but you can increase or decrease it to a different number. It is outside the scope of this workshop, but feel free to look it up after class.

NOTE: So far, we have only run very short commands that have very few or no arguments. It would be faster to just retype it than to check the history. However, as you start to run analyses on the command line, you will find that the commands are longer and more complex, and the

historycommand will be very useful!

Cancel a command or task

Sometimes as you enter a command, you realize that you don’t want to continue or run the current line. Instead of deleting everything you have entered (which could be very long), you could quickly cancel the current line and start a fresh prompt with Ctrl + C.

$ # Run some random words, then hit "Ctrl + C". Observe what happens

Another useful case for Ctrl + C is when a task is running that you would like to stop. In order to illustrate this, we will briefly introduce the sleep command. sleep N pauses your command line from additional entries for N seconds. If we would like to have the command line not accept entries for 20 seconds, we could use:

$ sleep 20

While your sleep command is running, you may decide that in fact, you do want to have your command line back. To terminate the rest of the sleep command simply type:

Ctrl + C

This should terminate the rest of the sleep command. While this use may seem a bit silly, you will likely encounter many scenarios when you accidentally start running a task that you didn’t mean to start, and Ctrl + C can be immensely helpful in stopping it.

Other handy command-related shortcuts

- Ctrl + A will bring you to the start of the command you are writing.

- Ctrl + E will bring you to the end of the command.

Exercise

- Checking the

historycommand output, how many commands have you typed in so far? - Use the arrow key to check the command you typed before the

historycommand. What is it? Does it make sense? - Type several random characters on the command prompt. Can you bring the cursor to the start with + ? Next, can you bring the cursor to the end with + ? Finally, what happens when you use + ?

Summary: Commands, options, and keystrokes covered

~ # home dir

. # current dir

.. # parent dir

* # wildcard

ctrl + c # cancel current command

ctrl + a # start of line

ctrl + e # end of line

history

Advanced Bash Commands and Utilities

As you begin working more with the Shell, you will discover that there are mountains of different utilities at your fingertips to help increase command-line productivity. So far, we have introduced you to some of the basics to help you get started. In this lesson, we will touch on more advanced topics that can be very useful as you conduct analyses in a cluster environment.

Configuring your shell

In your home directory, there are two hidden files, .bashrc and .bash_profile. These files contain all the startup configuration and preferences for your command line interface and are loaded before your Terminal loads the shell environment. Modifying these files allows you to change your preferences for features like your command prompt, the colors of text, and add aliases for commands you use all the time.

NOTE: These files begin with a dot (

.) which makes it a hidden file. To view all hidden files in your home directory, you can use:

$ ls -al ~/

.bashrc versus .bash_profile

You can put configurations in either file, and you can create either if it doesn’t exist. But why two different files? What is the difference?

The difference is that .bash_profile is executed for login shells, while .bashrc is executed for interactive non-login shells. It is helpful to have these separate files when there are preferences you only want to see on the login and not every time you open a new terminal window. For example, suppose you would like to print some lengthy diagnostic information about your machine (load average, memory usage, current users, etc) - the .bash_profile would be a good place since you would only want in displayed once when starting out.

Most of the time you don’t want to maintain two separate configuration files for login and non-login shells. For example, when you export a $PATH (as we had done previously), you want it to apply to both. You can do this by sourcing .bashrc from within your .bash_profile file. Take a look at your .bash_profile file, it has already been done for you:

$ less ~/.bash_profile

You should see the following lines:

if [ -f ~/.bashrc ]; then

source ~/.bashrc

fi

What this means is that if a .bashrc files exist, all configuration settings will be sourced upon logging in. Any settings you would like applied to all shell windows (login and interactive) can simply be added directly to the .bashrc file rather than in two separate files.

Changing the prompt

In your file .bash_profile, you can change your prompt by adding this:

PS1="\[\033[35m\]\t\[\033[m\]-\[\033[36m\]\u\[\033[m\]@$HOST_COLOR\h:\[\033[33;1m\]\w\[\033[m\]\$ "

export PS1