Introduction to GPU Computing

Overview

Teaching: 30 min

Exercises: 0 minQuestions

What is GPU?

How a GPU is different from a CPU?

Which scientific problems work better on GPUs?

Objectives

Learn about how and why GPUs are important today in scientific computing

Understand the differences and similarities of GPUs and CPUs for scientific computing

Heterogeneous Parallel Computing

For basically all the 21st century two trends have dominated the area of High Perfomance Computing. The first one is the increase number of cores in CPUs from the dual core to the current CPUs on consumer market with 4, 8 and more cores. The second trend is the availability of modern coprocessors.

Coprocessors are devices that take some processing out of the CPU by specializing on specific tasks. Those coprocessors have not all the capabilities of CPUs and in general are not able to act as a CPU but they are very efficient in certain tasks. Examples of coprocessors in recent years are the Intel Xeon Phi coprocessors and most notoriously for this lesson GPUs in particular those from NVIDIA that thanks to CUDA we will examine in these series of lectures.

These trends will most likely follow and enhance in the foreseeable future. CPUs will come with 20, 40 and even more cores per socket. GPUs will become more common in addition to another specialized coprocessors such as FPGAs, and neural processors.

In this lesson we will concentrate on parallel computing with CUDA and OpenACC. The central theme about using GPUs is to manage a large number of computing threads a number that is 2 or 3 orders of magnitude larger than the number of computing threads on CPUs. In contrast to what is called multithreading parallel computing, on GPUs we talk about many-threading parallel computing.

The first GPUs used for general computing, came from repurposing GPUs. GPUs were originally designed to compute textures, graphics and rotations of 3D graphics for display on screen. These tasks do not require for most part double precision numbers. Only relatively recently double precision capabilities were integrated in those GPUs but for most part significant performance edge can be achieved when you can work with single precision floats.

Memory bandwidth is another important constrainer in GPUs. As GPUs have their own memory and cannot manage the central RAM, the ability to perform better on a GPU depends on enough processing being done with the data allocated on the GPU memory before the data is copied back to the Host RAM. A GPU must be capable of transferring large amounts of data, processing the data for a meaningful mount of time and return the results back in order to produce a positive return.

As such GPUs were designed for very specific tasks and not all tasks can be efficiently being offloaded to the GPU. There are tasks for which CPUs perform better and will continue to do so in the near future. At the end we will have what we called heterogeneous parallel computing a paradigm where parallel computing is exploited at 3 different and even entangled levels.

First is CPU multithreading execution. In the next lesson we will demonstrate one example of OpenMP a popular model for these kind of parallelism. In CPU multithreading we use the ability of modern CPUs to have several cores that see the same memory. The next level is coprocessor parallelism, exemplified with OpenACC, OpenCL and CUDA. Using for example GPUs certain tasks what require many threads with relatively small amount of memory can be processed on those devices and get an advantage from them. The final level is distributed computing with a prototypical case is MPI. We will demonstrate a brief example of MPI in the next lesson but it is out of scope for this workshop.

Accelerators (like GPUs) in the world largest supercomputers

NVIDIA GPUs Powered 168 of The Top500 Supercomputers On The Planet. Eight of the world’s top 10 supercomputers now use NVIDIA GPUs, InfiniBand networking or both. They include the most powerful systems in the U.S., Europe and China. The fastest supercomputers all rely on Accelerators. More than 50% of the computational power in the top500 comes from accelerators.

As per the latest figures, it looks like NVIDIA GPUs are powering the bulk of the supercomputers in the Top500 list with a total of 168 systems while AMD’s CPUs and GPUs power a total of 121 supercomputers. At the same time, Supercomputers housing AMD and NVIDIA GPU-based accelerators are largely running Intel CPUs which cover around 400 supercomputers and that’s a huge figure & while the number of systems running Intel CPUs are in a clear lead in quantity, AMD actually wins the crown for the fastest supercomputer around in the form of Frontier.

Frontier

HPE Cray EX235a, AMD Optimized 3rd Generation EPYC 64C 2GHz, AMD Instinct MI250X, Slingshot-11, HPE DOE/SC/Oak Ridge National Laboratory United States

- CPU cores 8,699,904

- Rmax (PFlop/s): 1,194.00

- Rpeak (PFlop/s) : 1,679.82

- Power (kW) : 22,703

Summit

IBM Power System AC922, IBM POWER9 22C 3.07GHz, NVIDIA Volta GV100, Dual-rail Mellanox EDR Infiniband, IBM DOE/SC/Oak Ridge National Laboratory United States

- CPU cores 2,414,592

- Rmax (PFlop/s): 148.60

- Rpeak (PFlop/s) : 200.79

- Power (kW) : 10,096

WVU High-Performance Computer Clusters

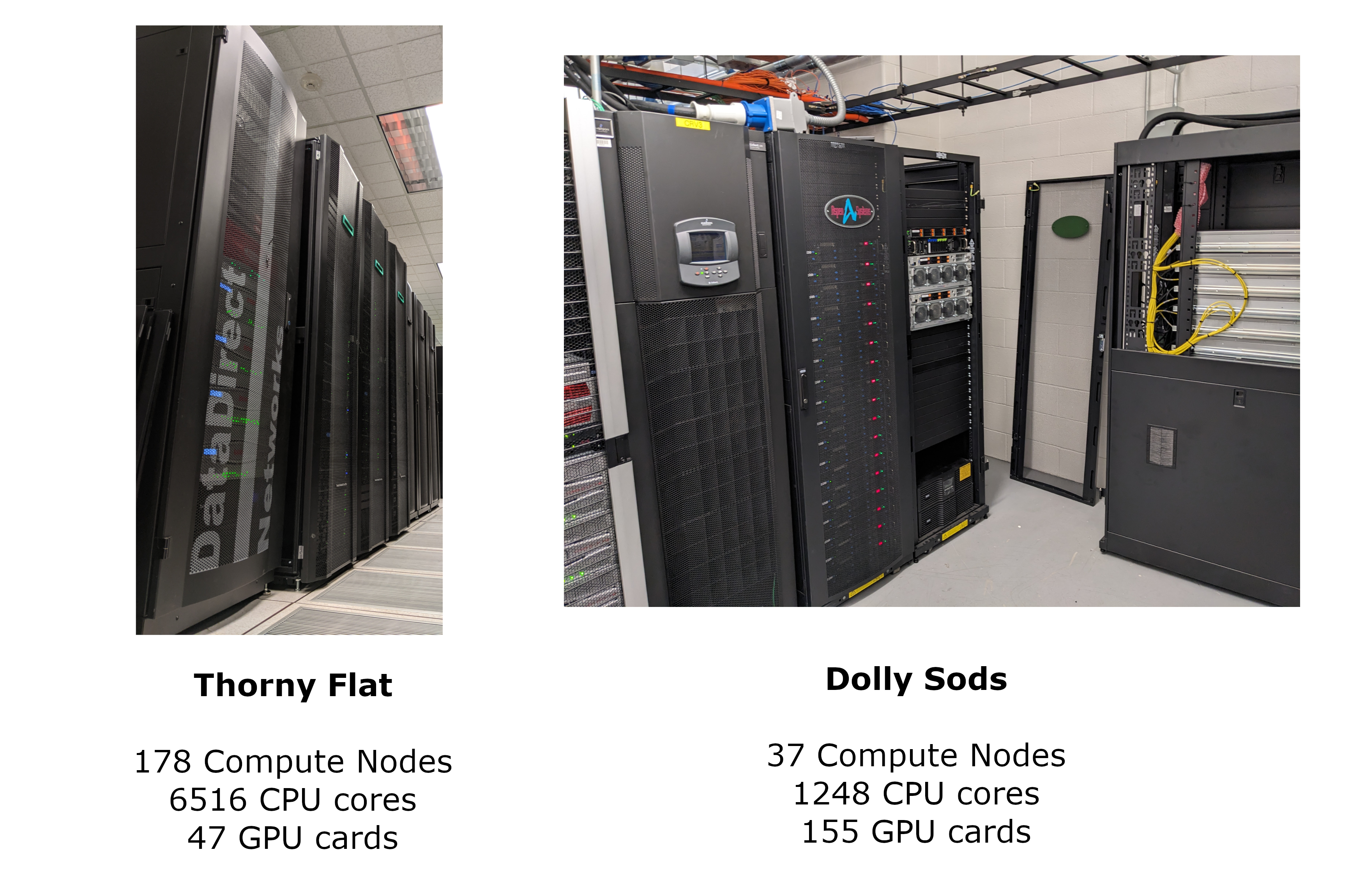

West Virginia University has 2 main clusters: Thorny Flat and Dolly Sods, our newest cluster that will be available later in August 2023.

Thorny Flat

Thorny Flat is a general-purpose HPC cluster with 178 compute nodes, most nodes have 40 CPU cores. The total CPU core count is 6516 cores. There are 47 NVIDIA GPU cards ranging from P6000, RTX6000, and A100

Dolly Sods

Dolly Sods is our newest cluster and it is specialized in GPU computing. It has 37 nodes and 155 NVIDIA GPU cards ranging from A30, A40 and A100. The total CPU core count is 1248.

GPUs on Thorny Flat

NVIDIA Quadro RTX6000

| RTX 6000 | P 6000 | |

|---|---|---|

| Architecture | Turing | Pascal |

| CUDA Parallel-Processing Cores | 4,608 | 3,840 |

| Bus width | 384 bit | 384 bit |

| Memory Clock | 1750 | 1127 MHz |

| NVIDIA Tensor Cores | 576 | |

| NVIDIA RT Cores | 72 | |

| GPU Memory | 24 GB GDDR6 | 24 GB GDDR5X |

| FP32 Performance | 16.3 TFLOPS | 12.63 TFLOPS |

| FP64 Performance | 564 GFLOPS | 394 GFLOPS |

| Max Power Consumption | 260 W | 250 W |

| CUDA | 6.1 | 6.1 |

| OpenCL | 1.2 | 1.2 |

Knowing the GPUs on the machine

A first view of the availability of GPUs can be seen with the command:

$> nvidia-smi

Thorny Flat has several machines with GPUs the output in one of them is:

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 455.32.00 Driver Version: 455.32.00 CUDA Version: 11.1 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Quadro P6000 Off | 00000000:37:00.0 Off | Off |

| 16% 28C P0 58W / 250W | 0MiB / 24449MiB | 0% E. Process |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 Quadro P6000 Off | 00000000:AF:00.0 Off | Off |

| 17% 27C P0 57W / 250W | 0MiB / 24449MiB | 0% E. Process |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 2 Quadro P6000 Off | 00000000:D8:00.0 Off | Off |

| 16% 28C P0 58W / 250W | 0MiB / 24449MiB | 0% E. Process |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 455.32.00 Driver Version: 455.32.00 CUDA Version: 11.1 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Quadro RTX 6000 Off | 00000000:1A:00.0 Off | 0 |

| N/A 38C P0 55W / 250W | 0MiB / 22698MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 Quadro RTX 6000 Off | 00000000:1B:00.0 Off | 0 |

| N/A 39C P0 56W / 250W | 0MiB / 22698MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 2 Quadro RTX 6000 Off | 00000000:3D:00.0 Off | 0 |

| N/A 39C P0 57W / 250W | 0MiB / 22698MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 3 Quadro RTX 6000 Off | 00000000:3E:00.0 Off | 0 |

| N/A 40C P0 57W / 250W | 0MiB / 22698MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 4 Quadro RTX 6000 Off | 00000000:8B:00.0 Off | 0 |

| N/A 37C P0 56W / 250W | 0MiB / 22698MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 5 Quadro RTX 6000 Off | 00000000:8C:00.0 Off | 0 |

| N/A 37C P0 56W / 250W | 0MiB / 22698MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 6 Quadro RTX 6000 Off | 00000000:B5:00.0 Off | 0 |

| N/A 37C P0 54W / 250W | 0MiB / 22698MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 7 Quadro RTX 6000 Off | 00000000:B6:00.0 Off | 0 |

| N/A 37C P0 57W / 250W | 0MiB / 22698MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

Launching interactive sessions with GPUs

A very simple way of launching an interactive job is using the command srun:

The following is an example of a request for an interactive job asking for 1 GPU 8 CPU cores for 2 hours:

trcis001:~$ srun -p comm_gpu_inter -G 1 -t 2:00:00 -c 8 --pty bash

You can verify the assigned GPU using the command nvidia-smi:

trcis001:~$ srun -p comm_gpu_inter -G 1 -t 2:00:00 -c 8 --pty bash

srun: job 22599 queued and waiting for resources

srun: job 22599 has been allocated resources

tbegq200:~$ nvidia-smi

Wed Jan 18 13:27:01 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 515.43.04 Driver Version: 515.43.04 CUDA Version: 11.7 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA A100-PCI... Off | 00000000:3B:00.0 Off | 0 |

| N/A 28C P0 31W / 250W | 0MiB / 40960MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

The command above shows an NVIDIA A100 as the GPU assigned to us during the lifetime of the job.

Key Points

On the largest Supercomputers in the world, most of the computational power comes from accelerators such as GPUs

Not everything a CPU can do a GPU can do.

GPUs are particularly good on a restricted set of tasks

Paradigms of Parallel Computing

Overview

Teaching: 30 min

Exercises: 0 minQuestions

Which are the ways we can use parallel computing?

On which of them GPUs can be used?

Objectives

Review one example of a code written with several methods of parallelization

We will pursue an exploration of several paradigms in parallel computing, from purely CPU computing to GPU computing. The paradigms chosen were OpenMP, OpenACC, OpenCL, MPI, and CUDA. By using the same problem as baseline we hope to give a sense of perspective of how those different alternatives of parallelization work in practice.

We will be solving a very simple problem. The DAXPY function is the name given in BLAS to a routine that implements the function Y = A * X + Y where X and Y may be either native double-precision valued matrices or numeric vectors, and A is a scalar. We will be computing a trigonometric identity using DAXPY as the function that will be the target of parallelization.

We will compute this formula:

[cos(2x) = cos^2(x)-sin^2(x)]

For an array of vectors in a domain in the range of \(x=[0:\pi]\)

All the codes in this lesson will be compiled with the NVIDIA HPC compilers. That will give us some uniformity on the compiler choice as this compiler supports several of the parallel models that will be demonstrated.

To access the compilers on Thorny you need to load the module:

$> module load lang/nvidia/nvhpc/23.3

To check that the compiler is available execute:

$> nvc --version

You should get something like:

nvc 23.3-0 64-bit target on x86-64 Linux -tp skylake-avx512

NVIDIA Compilers and Tools

Copyright (c) 2022, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

Serial Code

We will start without parallelization. The term given to codes that use one single sequence of instructions is serial. We will use this to explain a few of the basic elements of C programming for those not familiar with the language.

The C programming language uses plain text files to describe the program. The code needs to be compiled. Compiling means that using a software package called compiler the text file is interpreted resulting in a new file that contains the instructions that the computer can follow directly to run the program.

The program below shows a code that populates two vectors and computes a new vector, the subtraction of the input vectors. Here is the code:

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

#define VECTOR_SIZE 100

int main( int argc, char* argv[] )

{

int i;

double alpha=1.0;

// Number of bytes to allocate for N doubles

size_t bytes = VECTOR_SIZE*sizeof(double);

// Allocate memory for arrays X, A, B, and C on host

double *X = (double*)malloc(bytes);

double *A = (double*)malloc(bytes);

double *B = (double*)malloc(bytes);

double *C = (double*)malloc(bytes);

for(i=0;i<VECTOR_SIZE;i++){

X[i]=M_PI*(double)(i+1)/VECTOR_SIZE;

A[i]=B[i]=C[i]=0.0;

}

for(i=0;i<VECTOR_SIZE;i++){

A[i]=cos(X[i])*cos(X[i]);

B[i]=sin(X[i])*sin(X[i]);

C[i]=alpha*A[i]-B[i];

}

if (VECTOR_SIZE<=100){

for(i=0;i<VECTOR_SIZE;i++) printf("%9.5f %9.5f %9.5f %9.5f\n",X[i],A[i],B[i],C[i]);

}

else{

for(i=VECTOR_SIZE-10;i<VECTOR_SIZE;i++) printf("%9.5f %9.5f %9.5f %9.5f\n",X[i],A[i],B[i],C[i]);

}

// Release memory

free(A);

free(B);

free(C);

return 0;

}

Most of this code will be seen in the other implementations, for those unfamiliar with C programming, the code will be reviewed in some detail. That should facilitate understanding when moving into the parallel versions of this code.

The first lines in the code are:

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

#define VECTOR_SIZE 100

The first three indicate which files must be processed to access functions that will appear in the code below. In particular we need stdio.h because we are calling the function printf. We need stdlib.h for using the functions to allocate malloc and deallocate free arrays in memory. Finally we need math.h because we are calling math functions such as sin and cos.

The next line is a preprocessor instruction. Any place in the code where the word VECTOR_SIZE appears, excluding string constants, will be replace by the characters 100 before sending the code to the actual compiler. For us that help us to have a single place to change the code in case we want a larger or shorter array. As it is the lenght of the array is hardcoded meaning that in order to change the lenght of the arrays the source needs to be changed and recompiled.

The next lines are:

int main( int argc, char* argv[] )

{

int i;

double alpha=1.0;

// Number of bytes to allocate for N doubles

size_t bytes = VECTOR_SIZE*sizeof(double);

// Allocate memory for arrays X, A, B, and C on host

double *X = (double*)malloc(bytes);

double *A = (double*)malloc(bytes);

double *B = (double*)malloc(bytes);

double *C = (double*)malloc(bytes);

The first line in this chunk, indicates the main function on any program written in C. The code starts execution exactly starting on that line. A program written in C can read command line arguments from the shell and those arguments are stored in the array variable argv and the number of arguments with the integer argc

Next lines are declarations of the variables and the kind of each variable. Different from higher level languages like Python or R, in C each variable must have a very definite kind. int for integers, double for floating point numbers, ie, truncated real numbers. size_t is a kind defined on the stdlib.h header used to declare the number of bytes to allocate on each array.

The final lines are declarations for the 4 arrays that we will use. One for the domain X, the array A to store $cos(x)^2$, the array B to store $sin(x)^2$ and the array C for storing the difference of those two arrays.

Next lines are the core of the program:

for(i=0;i<VECTOR_SIZE;i++){

X[i]=M_PI*(double)(i+1)/VECTOR_SIZE;

A[i]=B[i]=C[i]=0.0;

}

for(i=0;i<VECTOR_SIZE;i++){

A[i]=cos(X[i])*cos(X[i]);

B[i]=sin(X[i])*sin(X[i]);

C[i]=alpha*A[i]-B[i];

}

There are two loops in this chunk of code. The counter is the integer i that starts on 0 and stops before the variable reaches VECTOR_SIZE. In C language, the index of vectors of length N start in 0 and end in N-1. After each cycle the variable is increased by one with the instructions i++ a short for i=i+1.

The vector X is filled with numbers starting with 0 and ending with $\pi$. Notice the use of M_PI, a constant declared in math.h that provides a long precision numerical value for PI. The other arrays are zeroed. Not really necessary but done here to show how several variables can receive the same value.

The next loop is actually the main piece of code that will change the most when we explore the different models of parallel programming. In this case, we have a single loop that will compute A, compute B and subtract those values to compute C.

The final portion of the code, shows the results of the calculations.

if (VECTOR_SIZE<=100){

for(i=0;i<VECTOR_SIZE;i++) printf("%9.5f %9.5f %9.5f %9.5f\n",X[i],A[i],B[i],C[i]);

}

else{

for(i=VECTOR_SIZE-10;i<VECTOR_SIZE;i++) printf("%9.5f %9.5f %9.5f %9.5f\n",X[i],A[i],B[i],C[i]);

}

// Release memory

free(A);

free(B);

free(C);

return 0;

}

Here we have a conditional, for small arrays we will print the entire set of arrays, X, A, B, and C as 4 columns of text on the screen. For larger arrays only the last 10 elements are shown.

Notice how to indicate the format of the numbers that will be shown on the screen.

The string %9.5f means that each number will have 9 characters to display with 5 of them being decimals. The character f means that the content of a floating point number will be shown. Other indicators are f for floats, d for integers, and s for strings.

The next lines deallocate the memory used for the 4 arrays. Every program in written in C should return an integer at the end, with 0 meaning a successful completion of the program. Any other return value can be interpreted as some sort of failure.

To compile the code, execute:

$> nvc daxpy_serial.c

Execute the code with:

$> ./a.out

That concludes the first program. No parallelism here. The code will use just one core, no matter how big the array is and how many cores you have available on your computer. This is a serial code and can only execute one instruction at a time.

No we will use this first program to present a few popular models for parallel computing.

OpenMP: Shared-memory parallel programming.

The OpenMP (Open Multi-Processing) API is a model for parallel computing that supports multi-platform shared-memory multiprocessing programming in C, C++, and Fortran, on many platforms, instruction-set architectures and operating systems, including Solaris, AIX, HP-UX, Linux, macOS, and Windows. Shared-memory multiprocessing means that it can parallelize operations on multiple cores that are able to address a single pool of memory.

Programming in OpenMP consists of a set of compiler directives, library routines, and environment variables that influence run-time behavior. We will focus only on the C language but there are equivalent directives for C++ and Fortran.

Programming in OpenMP consists in adding a few directives in critical places of the code that we want to parallelize, it is very simple to use and requires minimal changes to the source code.

Consider the next code based on the serial code with OpenMP directives:

#include <stdio.h>

#include <stdlib.h>

#include <omp.h>

#include <math.h>

#define VECTOR_SIZE 100

int main( int argc, char* argv[] )

{

int i,id;

double alpha=1.0;

// Number of bytes to allocate for N doubles

size_t bytes = VECTOR_SIZE*sizeof(double);

// Allocate memory for arrays X, A, B, and C on host

double *X = (double*)malloc(bytes);

double *A = (double*)malloc(bytes);

double *B = (double*)malloc(bytes);

double *C = (double*)malloc(bytes);

#pragma omp parallel for shared(A,B) private(i,id)

for(i=0;i<VECTOR_SIZE;i++){

id = omp_get_thread_num();

printf("Initializing vectors id=%d working on i=%d\n", id,i);

X[i]=M_PI*(double)(i+1)/VECTOR_SIZE;

A[i]=cos(X[i])*cos(X[i]);

B[i]=sin(X[i])*sin(X[i]);

}

#pragma omp parallel for shared(A,B,C) private(i,id) schedule(static,10)

for(i=0;i<VECTOR_SIZE;i++){

id = omp_get_thread_num();

printf("Computing C id=%d working on i=%d\n", id,i);

C[i]=alpha*A[i]-B[i];

}

if (VECTOR_SIZE<=100){

for(i=0;i<VECTOR_SIZE;i++) printf("%9.5f %9.5f %9.5f %9.5f\n",X[i],A[i],B[i],C[i]);

}

else{

for(i=VECTOR_SIZE-10;i<VECTOR_SIZE;i++) printf("%9.5f %9.5f %9.5f %9.5f\n",X[i],A[i],B[i],C[i]);

}

// Release memory

free(A);

free(B);

free(C);

return 0;

}

The good thing about OpenMP is that not much of the code has to change in order to get a decent parallelization. As such, we will only focus of the 4 lines that have changed here:

#include <omp.h>

There is no need of importing any header for using OpenMP, however, we will use

function call to omp_get_thread_num() that is in the header above.

The next two sections with OpenMP directives are on top of the two loops, the initialization loop:

#pragma omp parallel for shared(A,B) private(i,id)

for(i=0;i<VECTOR_SIZE;i++){

id = omp_get_thread_num();

And the evaluation of the vector:

#pragma omp parallel for shared(A,B,C) private(i,id) schedule(static,10)

for(i=0;i<VECTOR_SIZE;i++){

id = omp_get_thread_num();

These two #pragma lines are sit on top of the for loops. From the point of view of the C language, they are just comments, so the language itself is not concerned with them. A C compiler that supports OpenMP will interpret those lines and will parallelize the for loops. The parallelization is possible because each evaluation of the i element is independent from the j element. That independence allows for different evaluations go to different cores.

There are several directives in the OpenMP specification. The directive omp parallel for, is specific to parallelize for loops. There are others for assigning executions to a core. For the parallel for directive there are arguments, one important set is the shared and private arguments that declare which variables will be shared on all the threads created by OpenMP and for which variables a copy will be created independently for each thread. The index i and the thread number id are always private. In this example, the variables are being declared explicitly even if that is not always needed.

The final argument is schedule(static,10) when the indices will be assigned in chunks of 10.

OpenMP is a good option for easy parallelization of codes, but before OpenMP 4.0 the model was restricted only to parallelization with CPUs.

MPI: Distributed Parallel Programming

Message Passing Interface (MPI) is a communication protocol for programming parallel computers. It is designed to be allow the coordination of multiple cores on machines when cores are potentially independent, such as HPC clusters.

With MPI point-to-point and collective communication are supported. Point-to-point communication is when one process sends a message to another specific process, different processes are differentiated by their rank, an integer that uniquely identifies the process.

MPI’s goals are high performance, scalability, and portability. MPI remains the dominant model used in high-performance computing today.

The code below solves a rewrite of the original serial program using point-to-point functions to distribute the computation across several MPI processes.

#include "mpi.h"

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

#define MASTER 0

#define VECTOR_SIZE 100

int main (int argc, char *argv[])

{

double alpha=1.0;

double * A;

double * B;

double * C;

double * X;

// arrays a and b

int total_proc;

// total nuber of processes

int rank;

// rank of each process

int T;

// total number of test cases

long long int n_per_proc;

// elements per process

long long int i, j, n;

MPI_Status status;

// Initialization of MPI environment

MPI_Init (&argc, &argv);

MPI_Comm_size (MPI_COMM_WORLD, &total_proc); //The total number of processes running in parallel

MPI_Comm_rank (MPI_COMM_WORLD, &rank); //The rank of the current process

double * x;

double * ap;

double * bp;

double * cp;

double * buf;

n_per_proc = VECTOR_SIZE/total_proc;

if(VECTOR_SIZE%total_proc != 0) n_per_proc+=1; // to divide data evenly by the number of processors

x = (double *) malloc(sizeof(double)*n_per_proc);

ap = (double *) malloc(sizeof(double)*n_per_proc);

bp = (double *) malloc(sizeof(double)*n_per_proc);

cp = (double *) malloc(sizeof(double)*n_per_proc);

buf = (double *) malloc(sizeof(double)*n_per_proc);

for(i=0;i<n_per_proc;i++){

//printf("i=%d\n",i);

x[i]=M_PI*(rank*n_per_proc+i)/VECTOR_SIZE;

ap[i]=cos(x[i])*cos(x[i]);

bp[i]=sin(x[i])*sin(x[i]);

cp[i]=alpha*ap[i]-bp[i];

}

if (rank == MASTER){

for(i=0;i<total_proc;i++){

if(i==MASTER){

printf("Skip\n");

}

else{

printf("Receiving from %d...\n",i);

MPI_Recv(buf, n_per_proc, MPI_DOUBLE, i, 0, MPI_COMM_WORLD, &status);

for(j=0;j<n_per_proc;j++) printf("rank=%d i=%d x=%f c=%f\n",i,j,M_PI*(i*n_per_proc+j)/VECTOR_SIZE,buf[j]);

}

}

}

else{

MPI_Bsend(cp, n_per_proc, MPI_DOUBLE, 0, 0, MPI_COMM_WORLD);

}

MPI_Finalize();

//Terminate MPI Environment

return 0;

}

There are many changes in the code here, writing MPI code means important changes in the overall structure of the code as a result of organizing the sending and receiving of data from different processes.

The first change is the header:

#include "mpi.h"

All MPI programs must import that header to access the functions in the MPI API.

The next relevant chunk of code is:

MPI_Status status;

// Initialization of MPI environment

MPI_Init (&argc, &argv);

MPI_Comm_size (MPI_COMM_WORLD, &total_proc); //The total number of processes running in parallel

MPI_Comm_rank (MPI_COMM_WORLD, &rank); //The rank of the current process

Here we see a call to MPI_Init() the very first MPI function that must be call before any other. A variable MPI_Status is added to be used later for the receiving function. The call to MPI_Comm_size and MPI_Comm_rank will retrieve the total number of processes involved in the calculation, a number that could change at runtime. Each individual process receives a number called rank and we are storing the integer in the variable with that name.

In MPI we can avoid allocating big arrays full size in memory, but just allocating the portion of the array that will be used on each rank we can decrease the overall memory usage. A poorly written code will allocate entire arrays and use just a portion of the values. Here we are just allocating the amount of data actually needed.

n_per_proc = VECTOR_SIZE/total_proc;

if(VECTOR_SIZE%total_proc != 0) n_per_proc+=1; // to divide data evenly by the number of processors

x = (double *) malloc(sizeof(double)*n_per_proc);

ap = (double *) malloc(sizeof(double)*n_per_proc);

bp = (double *) malloc(sizeof(double)*n_per_proc);

cp = (double *) malloc(sizeof(double)*n_per_proc);

buf = (double *) malloc(sizeof(double)*n_per_proc);

for(i=0;i<n_per_proc;i++){

//printf("i=%d\n",i);

x[i]=M_PI*(rank*n_per_proc+i)/VECTOR_SIZE;

ap[i]=cos(x[i])*cos(x[i]);

bp[i]=sin(x[i])*sin(x[i]);

cp[i]=alpha*ap[i]-bp[i];

}

The arrays, ap, bp, cp and x are smaller with size n_per_proc instead of VECTOR_SIZE. Notice that x must be initialized correctly with

x[i]=M_PI*(rank*n_per_proc+i)/VECTOR_SIZE;

Each rank is just allocating and initializing the portion of data that it will manage.

The last chunk of source:

if (rank == MASTER){

for(i=0;i<total_proc;i++){

if(i==MASTER){

printf("Skip\n");

}

else{

printf("Receiving from %d...\n",i);

MPI_Recv(buf, n_per_proc, MPI_DOUBLE, i, 0, MPI_COMM_WORLD, &status);

for(j=0;j<n_per_proc;j++) printf("rank=%d i=%d x=%f c=%f\n",i,j,M_PI*(i*n_per_proc+j)/VECTOR_SIZE,buf[j]);

}

}

}

else{

MPI_Bsend(cp, n_per_proc, MPI_DOUBLE, 0, 0, MPI_COMM_WORLD);

}

The MASTER rank (usually 0) will receive the data from the other ranks, and it could be used to assemble the complete array or simply to continue the execution based on the data processed by all the ranks. In this simple example, we are just printing the data. The important lines here are:

MPI_Bsend(cp, n_per_proc, MPI_DOUBLE, 0, 0, MPI_COMM_WORLD);

and

MPI_Recv(buf, n_per_proc, MPI_DOUBLE, i, 0, MPI_COMM_WORLD, &status);

They send and receive the arrays in the first argument, in our case each array contains n_per_proc numbers of MPI_DOUBLE kind, (double in C), from the rank i with tag 0, ranks that belong to MPI_COMM_WORLD the MPI world initialized.

The final MPI call of any program is:

MPI_Finalize();

//Terminate MPI Environment

return 0;

The calls MPI_Init() and MPI_Finalize() are the first and last MPI functions called on any program using MPI.

OpenCL: A model for heterogeneous parallel computing

OpenCL is a framework for writing programs that execute across heterogeneous platforms consisting of CPUs, GPUs, digital signal processors, field-programmable gate arrays, and other processors or hardware accelerators. OpenCL specifies programming languages for programming these devices and application programming interfaces to control the platform and execute programs on the computing devices.

Our program written in OpenCL looks like this:

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

#ifdef __APPLE__

#include <OpenCL/cl.h>

#else

#include <CL/cl.h>

#endif

#define VECTOR_SIZE 1024

//OpenCL kernel which is run for every work item created.

const char *daxpy_kernel =

"__kernel \n"

"void daxpy_kernel(double alpha, \n"

" __global double *A, \n"

" __global double *B, \n"

" __global double *C) \n"

"{ \n"

" //Get the index of the work-item \n"

" int index = get_global_id(0); \n"

" C[index] = alpha* A[index] - B[index]; \n"

"} \n";

int main(void) {

int i;

// Allocate space for vectors A, B and C

double alpha = 1.0;

double *X = (double*)malloc(sizeof(double)*VECTOR_SIZE);

double *A = (double*)malloc(sizeof(double)*VECTOR_SIZE);

double *B = (double*)malloc(sizeof(double)*VECTOR_SIZE);

double *C = (double*)malloc(sizeof(double)*VECTOR_SIZE);

for(i = 0; i < VECTOR_SIZE; i++)

{

X[i] = M_PI*(double)(i+1)/VECTOR_SIZE;

A[i] = cos(X[i])*cos(X[i]);

B[i] = sin(X[i])*sin(X[i]);

C[i] = 0;

}

// Get platform and device information

cl_platform_id * platforms = NULL;

cl_uint num_platforms;

//Set up the Platform

cl_int clStatus = clGetPlatformIDs(0, NULL, &num_platforms);

platforms = (cl_platform_id *)

malloc(sizeof(cl_platform_id)*num_platforms);

clStatus = clGetPlatformIDs(num_platforms, platforms, NULL);

//Get the devices list and choose the device you want to run on

cl_device_id *device_list = NULL;

cl_uint num_devices;

clStatus = clGetDeviceIDs( platforms[0], CL_DEVICE_TYPE_GPU, 0,NULL, &num_devices);

device_list = (cl_device_id *)

malloc(sizeof(cl_device_id)*num_devices);

clStatus = clGetDeviceIDs( platforms[0],CL_DEVICE_TYPE_GPU, num_devices, device_list, NULL);

// Create one OpenCL context for each device in the platform

cl_context context;

context = clCreateContext( NULL, num_devices, device_list, NULL, NULL, &clStatus);

// Create a command queue

cl_command_queue command_queue = clCreateCommandQueue(context, device_list[0], 0, &clStatus);

// Create memory buffers on the device for each vector

cl_mem A_clmem = clCreateBuffer(context, CL_MEM_READ_ONLY,VECTOR_SIZE * sizeof(double), NULL, &clStatus);

cl_mem B_clmem = clCreateBuffer(context, CL_MEM_READ_ONLY,VECTOR_SIZE * sizeof(double), NULL, &clStatus);

cl_mem C_clmem = clCreateBuffer(context, CL_MEM_WRITE_ONLY,VECTOR_SIZE * sizeof(double), NULL, &clStatus);

// Copy the Buffer A and B to the device

clStatus = clEnqueueWriteBuffer(command_queue, A_clmem, CL_TRUE, 0, VECTOR_SIZE * sizeof(double), A, 0, NULL, NULL);

clStatus = clEnqueueWriteBuffer(command_queue, B_clmem, CL_TRUE, 0, VECTOR_SIZE * sizeof(double), B, 0, NULL, NULL);

// Create a program from the kernel source

cl_program program = clCreateProgramWithSource(context, 1,(const char **)&daxpy_kernel, NULL, &clStatus);

// Build the program

clStatus = clBuildProgram(program, 1, device_list, NULL, NULL, NULL);

// Create the OpenCL kernel

cl_kernel kernel = clCreateKernel(program, "daxpy_kernel", &clStatus);

// Set the arguments of the kernel

clStatus = clSetKernelArg(kernel, 0, sizeof(double), (void *)&alpha);

clStatus = clSetKernelArg(kernel, 1, sizeof(cl_mem), (void *)&A_clmem);

clStatus = clSetKernelArg(kernel, 2, sizeof(cl_mem), (void *)&B_clmem);

clStatus = clSetKernelArg(kernel, 3, sizeof(cl_mem), (void *)&C_clmem);

// Execute the OpenCL kernel on the list

size_t global_size = VECTOR_SIZE; // Process the entire lists

size_t local_size = 64; // Process one item at a time

clStatus = clEnqueueNDRangeKernel(command_queue, kernel, 1, NULL, &global_size, &local_size, 0, NULL, NULL);

// Read the cl memory C_clmem on device to the host variable C

clStatus = clEnqueueReadBuffer(command_queue, C_clmem, CL_TRUE, 0, VECTOR_SIZE * sizeof(double), C, 0, NULL, NULL);

// Clean up and wait for all the comands to complete.

clStatus = clFlush(command_queue);

clStatus = clFinish(command_queue);

// Display the result to the screen

for(i = 0; i < VECTOR_SIZE; i++)

printf("%f * %f - %f = %f\n", alpha, A[i], B[i], C[i]);

// Finally release all OpenCL allocated objects and host buffers.

clStatus = clReleaseKernel(kernel);

clStatus = clReleaseProgram(program);

clStatus = clReleaseMemObject(A_clmem);

clStatus = clReleaseMemObject(B_clmem);

clStatus = clReleaseMemObject(C_clmem);

clStatus = clReleaseCommandQueue(command_queue);

clStatus = clReleaseContext(context);

free(A);

free(B);

free(C);

free(platforms);

free(device_list);

return 0;

}

There are many new lines here, most of it boilerplate code, so we will digest the most relevant chunks.

#ifdef __APPLE__

#include <OpenCL/cl.h>

#else

#include <CL/cl.h>

#endif

These lines are needed for reading the header for OpenCL, the location varies between MacOS and Linux, so preprocessor conditionals are used.

//OpenCL kernel which is run for every work item created.

const char *daxpy_kernel =

"__kernel \n"

"void daxpy_kernel(double alpha, \n"

" __global double *A, \n"

" __global double *B, \n"

" __global double *C) \n"

"{ \n"

" //Get the index of the work-item \n"

" int index = get_global_id(0); \n"

" C[index] = alpha* A[index] - B[index]; \n"

"} \n";

Central to OpenCL is the idea of kernel function, the portion of code that will be offloaded to the GPU or any other accelerator. For academic purposes it is here written as a constant, but it can be a separate file for the kernel. In this function we are just doing the difference alpha*A[i]-B[i]

// Get platform and device information

cl_platform_id * platforms = NULL;

cl_uint num_platforms;

//Set up the Platform

cl_int clStatus = clGetPlatformIDs(0, NULL, &num_platforms);

platforms = (cl_platform_id *)

malloc(sizeof(cl_platform_id)*num_platforms);

clStatus = clGetPlatformIDs(num_platforms, platforms, NULL);

//Get the devices list and choose the device you want to run on

cl_device_id *device_list = NULL;

cl_uint num_devices;

clStatus = clGetDeviceIDs( platforms[0], CL_DEVICE_TYPE_GPU, 0,NULL, &num_devices);

device_list = (cl_device_id *)

malloc(sizeof(cl_device_id)*num_devices);

clStatus = clGetDeviceIDs( platforms[0],CL_DEVICE_TYPE_GPU, num_devices, device_list, NULL);

// Create one OpenCL context for each device in the platform

cl_context context;

context = clCreateContext( NULL, num_devices, device_list, NULL, NULL, &clStatus);

// Create a command queue

cl_command_queue command_queue = clCreateCommandQueue(context, device_list[0], 0, &clStatus);

// Create memory buffers on the device for each vector

cl_mem A_clmem = clCreateBuffer(context, CL_MEM_READ_ONLY,VECTOR_SIZE * sizeof(double), NULL, &clStatus);

cl_mem B_clmem = clCreateBuffer(context, CL_MEM_READ_ONLY,VECTOR_SIZE * sizeof(double), NULL, &clStatus);

cl_mem C_clmem = clCreateBuffer(context, CL_MEM_WRITE_ONLY,VECTOR_SIZE * sizeof(double), NULL, &clStatus);

Most of this is just boilerplate code, it identifies the device, create an OpenCL context and allocate the memory on the device.

// Copy the Buffer A and B to the device

clStatus = clEnqueueWriteBuffer(command_queue, A_clmem, CL_TRUE, 0, VECTOR_SIZE * sizeof(double), A, 0, NULL, NULL);

clStatus = clEnqueueWriteBuffer(command_queue, B_clmem, CL_TRUE, 0, VECTOR_SIZE * sizeof(double), B, 0, NULL, NULL);

// Create a program from the kernel source

cl_program program = clCreateProgramWithSource(context, 1,(const char **)&daxpy_kernel, NULL, &clStatus);

// Build the program

clStatus = clBuildProgram(program, 1, device_list, NULL, NULL, NULL);

// Create the OpenCL kernel

cl_kernel kernel = clCreateKernel(program, "daxpy_kernel", &clStatus);

// Set the arguments of the kernel

clStatus = clSetKernelArg(kernel, 0, sizeof(double), (void *)&alpha);

clStatus = clSetKernelArg(kernel, 1, sizeof(cl_mem), (void *)&A_clmem);

clStatus = clSetKernelArg(kernel, 2, sizeof(cl_mem), (void *)&B_clmem);

clStatus = clSetKernelArg(kernel, 3, sizeof(cl_mem), (void *)&C_clmem);

// Execute the OpenCL kernel on the list

size_t global_size = VECTOR_SIZE; // Process the entire lists

size_t local_size = 64; // Process one item at a time

clStatus = clEnqueueNDRangeKernel(command_queue, kernel, 1, NULL, &global_size, &local_size, 0, NULL, NULL);

This section of the code is will be very similar when we explore CUDA, the main language in the next lectures. Here we are copying data from the host to the device, creating the kernel program and preparing the execution on the device.

// Read the cl memory C_clmem on device to the host variable C

clStatus = clEnqueueReadBuffer(command_queue, C_clmem, CL_TRUE, 0, VECTOR_SIZE * sizeof(double), C, 0, NULL, NULL);

// Clean up and wait for all the comands to complete.

clStatus = clFlush(command_queue);

clStatus = clFinish(command_queue);

// Display the result to the screen

for(i = 0; i < VECTOR_SIZE; i++)

printf("%f * %f - %f = %f\n", alpha, A[i], B[i], C[i]);

// Finally release all OpenCL allocated objects and host buffers.

clStatus = clReleaseKernel(kernel);

clStatus = clReleaseProgram(program);

clStatus = clReleaseMemObject(A_clmem);

clStatus = clReleaseMemObject(B_clmem);

clStatus = clReleaseMemObject(C_clmem);

clStatus = clReleaseCommandQueue(command_queue);

clStatus = clReleaseContext(context);

free(A);

free(B);

free(C);

free(platforms);

free(device_list);

return 0;

}

The final section cleans the memory from the device, print the values of the arrays as we did on the serial code and free the memory on the host.

OpenACC: Model for heterogeneous parallel programming

OpenACC (for open accelerators) is a programming standard for parallel computing developed by Cray, CAPS, Nvidia and PGI. The standard is designed to simplify the parallel programming of heterogeneous CPU/GPU systems.

OpenACC is similar to OpenMP. In OpenMP, the programmer can annotate C, C++, and Fortran source code to identify the areas that should be accelerated using compiler directives and additional functions. Like OpenMP 4.0 and newer, OpenACC can target both the CPU and GPU architectures and launch computational code on them.

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

#define VECTOR_SIZE 100

int main( int argc, char* argv[] )

{

double alpha=1.0;

double *restrict X;

// Input vectors

double *restrict A;

double *restrict B;

// Output vector

double *restrict C;

// Size, in bytes, of each vector

size_t bytes = VECTOR_SIZE*sizeof(double);

// Allocate memory for each vector

X = (double*)malloc(bytes);

A = (double*)malloc(bytes);

B = (double*)malloc(bytes);

C = (double*)malloc(bytes);

// Initialize content of input vectors, vector A[i] = cos(i)^2 vector B[i] = sin(i)^2

int i;

for(i=0; i<VECTOR_SIZE; i++) {

X[i]=M_PI*(double)(i+1)/VECTOR_SIZE;

A[i]=cos(X[i])*cos(X[i]);

B[i]=sin(X[i])*sin(X[i]);

}

// sum component wise and save result into vector c

#pragma acc kernels copyin(A[0:VECTOR_SIZE],B[0:VECTOR_SIZE]), copyout(C[0:VECTOR_SIZE])

for(i=0; i<VECTOR_SIZE; i++) {

C[i] = alpha*A[i]-B[i];

}

for(i=0; i<VECTOR_SIZE; i++) printf("X=%9.5f C=%9.5f\n",X[i],C[i]);

// Release memory

free(A);

free(B);

free(C);

return 0;

}

OpenACC works very similar to OpenMP, you add #pragma lines that are comments from the point of view of the C language but interpreted by the compiler if support for OpenACC is granted on the compiler.

On this chunk:

// sum component wise and save result into vector c

#pragma acc kernels copyin(A[0:VECTOR_SIZE],B[0:VECTOR_SIZE]), copyout(C[0:VECTOR_SIZE])

for(i=0; i<VECTOR_SIZE; i++) {

C[i] = alpha*A[i]-B[i];

}

How OpenACC works is better understood with a more deep understanding of CUDA. The #pragma is parallelizing the for loop copying the A and B arrays to the memory on the GPU and returning the C array back. The main difference between OpenMP and OpenACC is for variables being able to operate on accelerators like GPUs those arrays and variables must be copied to the device and the results copied back to host.

CUDA: Parallel Computing on NVIDIA GPUs

CUDA (Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) model created by NVIDIA to work on its GPUs. The purpose of CUDA is to leverage general-purpose computing on CUDA-enabled graphics processing unit (GPU) sometimes termed GPGPU (General-Purpose computing on Graphics Processing Units).

The CUDA platform is a software layer that gives direct access to the GPU’s virtual instruction set and parallel computational elements, for the execution of compute kernels. CUDA works as a superset of the C language and as such it cannot be compiled on a normal C language compiler. CUDA provides what is called the CUDA Toolkit a set of tools to compile and run programs written in CUDA.

To access the CUDA compilers on Thorny you need to load the module:

$> module load lang/nvidia/nvhpc/23.3

To check that the compiler is available execute:

$> nvcc --version

You should get something like:

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2023 NVIDIA Corporation

Built on Fri_Jan__6_16:45:21_PST_2023

Cuda compilation tools, release 12.0, V12.0.140

Build cuda_12.0.r12.0/compiler.32267302_0

#include <stdio.h>

// Size of array

#define VECTOR_SIZE 100

// Kernel

__global__ void add_vectors(double alpha, double *a, double *b, double *c)

{

int id = blockDim.x * blockIdx.x + threadIdx.x;

if(id < VECTOR_SIZE) c[id] = a[id] - b[id];

}

// Main program

int main( int argc, char* argv[] )

{

double alpha=1.0;

// Number of bytes to allocate for N doubles

size_t bytes = VECTOR_SIZE*sizeof(double);

// Allocate memory for arrays A, B, and C on host

double *X = (double*)malloc(bytes);

double *A = (double*)malloc(bytes);

double *B = (double*)malloc(bytes);

double *C = (double*)malloc(bytes);

// Allocate memory for arrays d_A, d_B, and d_C on device

double *d_A, *d_B, *d_C;

cudaMalloc(&d_A, bytes);

cudaMalloc(&d_B, bytes);

cudaMalloc(&d_C, bytes);

// Fill host arrays A and B

for(int i=0; i<VECTOR_SIZE; i++)

{

X[i]=M_PI*(double)(i+1)/VECTOR_SIZE;

A[i]=cos(X[i])*cos(X[i]);

B[i]=sin(X[i])*sin(X[i]);

}

// Copy data from host arrays A and B to device arrays d_A and d_B

cudaMemcpy(d_A, A, bytes, cudaMemcpyHostToDevice);

cudaMemcpy(d_B, B, bytes, cudaMemcpyHostToDevice);

// Set execution configuration parameters

// thr_per_blk: number of CUDA threads per grid block

// blk_in_grid: number of blocks in grid

int thr_per_blk = 32;

int blk_in_grid = ceil( double(VECTOR_SIZE) / thr_per_blk );

// Launch kernel

add_vectors<<< blk_in_grid, thr_per_blk >>>(alpha, d_A, d_B, d_C);

// Copy data from device array d_C to host array C

cudaMemcpy(C, d_C, bytes, cudaMemcpyDeviceToHost);

// Verify results

for(int i=0; i<VECTOR_SIZE; i++)

{

if(C[i] != alpha*A[i]-B[i])

{

printf("\nError: value of C[%d] = %f instead of %f\n\n", i, C[i], alpha*A[i]-B[i]);

//exit(-1);

}

else

{

printf("X=%f C=%f\n",X[i],C[i]);

}

}

// Free CPU memory

free(A);

free(B);

free(C);

// Free GPU memory

cudaFree(d_A);

cudaFree(d_B);

cudaFree(d_C);

printf("\n---------------------------\n");

printf("__SUCCESS__\n");

printf("---------------------------\n");

printf("VECTOR SIZE = %d\n", VECTOR_SIZE);

printf("Threads Per Block = %d\n", thr_per_blk);

printf("Blocks In Grid = %d\n", blk_in_grid);

printf("---------------------------\n\n");

return 0;

}

There are two main sections in this code that depart from the C language:

// Kernel

__global__ void add_vectors(double alpha, double *a, double *b, double *c)

{

int id = blockDim.x * blockIdx.x + threadIdx.x;

if(id < VECTOR_SIZE) c[id] = a[id] - b[id];

}

The function add_vectors will operate on the GPU using memory allocated on the device. The integer id will be associated to the exact index in the array based on the indices of the thread and block the main components in the thread hierarchy of execution in CUDA.

// Allocate memory for arrays d_A, d_B, and d_C on device

double *d_A, *d_B, *d_C;

cudaMalloc(&d_A, bytes);

cudaMalloc(&d_B, bytes);

cudaMalloc(&d_C, bytes);

In this section, the memory on the device is allocated. Those allocations are different from the arrays on the host and next lines will transfer data from the host to the device:

// Copy data from host arrays A and B to device arrays d_A and d_B

cudaMemcpy(d_A, A, bytes, cudaMemcpyHostToDevice);

cudaMemcpy(d_B, B, bytes, cudaMemcpyHostToDevice);

The operation of transferring data from the host to the device is a blocking operation. The execution will not return to the CPU until the transfer is completed.

// Set execution configuration parameters

// thr_per_blk: number of CUDA threads per grid block

// blk_in_grid: number of blocks in grid

int thr_per_blk = 32;

int blk_in_grid = ceil( double(VECTOR_SIZE) / thr_per_blk );

// Launch kernel

add_vectors<<< blk_in_grid, thr_per_blk >>>(alpha, d_A, d_B, d_C);

// Copy data from device array d_C to host array C

cudaMemcpy(C, d_C, bytes, cudaMemcpyDeviceToHost);

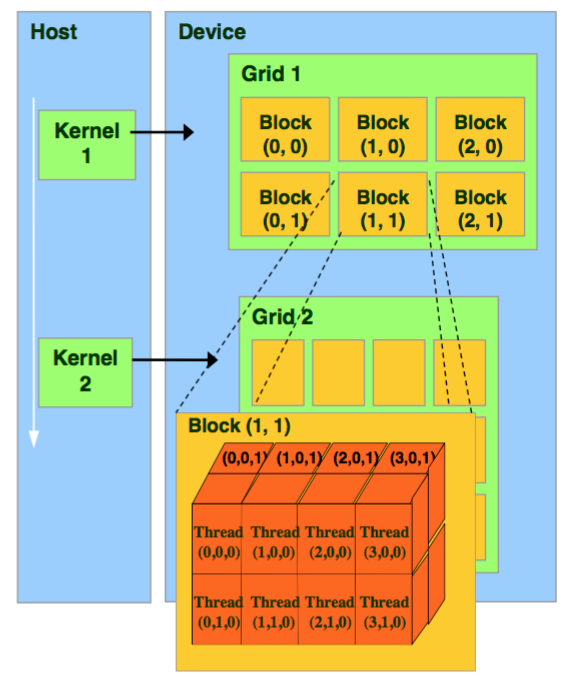

In this section, we decide two important numbers in CUDA execution that affect performance. The number of threads per block and the number of blocks in the grid. CUDA organizes parallel executions in threads those threads are grouped in a 3D array called blocks and blocks are arranged in a 3D array called a grid. For the simple case above we have just unidimensional blocks and unidimensional grids.

The call to add_vectors is a non-blocking operation. Execution returns to the host as soon as the function is called. The device will work on that function independently from the host.

Only the function cudaMemcpy() will impose a barrier, waiting for the device to complete execution before copying the data back from the device to the host.

Key Points

The serial code only allows one core to follow the instructions in the code

OpenMP uses several cores on the same machine as all the cores are able to address the same memory

OpenACC is capable to use both multiple cores and accelerators such as GPUs

MPI is used for distributed parallel computing. Being able to use cores on different compute nodes.

OpenCL is an effort for a platform-independent model for hybrid computing

CUDA is the model used by NVIDIA for accessing compute power from NVIDIA GPUs

Introduction to CUDA

Overview

Teaching: 30 min

Exercises: 0 minQuestions

What is CUDA and how is it used for computing?

What is the basic programming model used by CUDA?

How are CUDA programs structured?

What is the difference between host memory and device memory in a CUDA program?

Objectives

Learn how CUDA programs are structured to make efficient use of GPUs.

Learn how memory must be taken into consideration when writing CUDA programs.

Introduction to CUDA

In November 2006, NVIDIA introduced CUDA, which originally stood for “Compute Unified Device Architecture”, a general purpose parallel computing platform and programming model that leverages the parallel compute engine in NVIDIA GPUs to solve many complex computational problems in a more efficient way than on a CPU.

The CUDA parallel programming model has three key abstractions at its core:

- a hierarchy of thread groups

- shared memories

- barrier synchronization

There are exposed to the programmer as a minimal set of language extensions.

In parallel programming, granularity means the amount of computation in relation to communication (or transfer) of data. Fine-grained parallelism means individual tasks are relatively small in terms of code size and execution time. The data is transferred among processors frequently in amounts of one or a few memory words. Coarse-grained is the opposite in that data is communicated infrequently, after larger amounts of computation.

The CUDA abstractions provide fine-grained data parallelism and thread parallelism, nested within coarse-grained data parallelism and task parallelism. They guide the programmer to partition the problem into coarse sub-problems that can be solved independently in parallel by blocks of threads, and each sub-problem into finer pieces that can be solved cooperatively in parallel by all threads within the block.

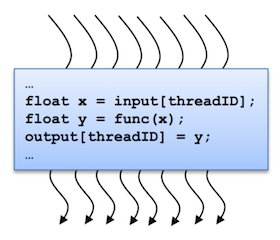

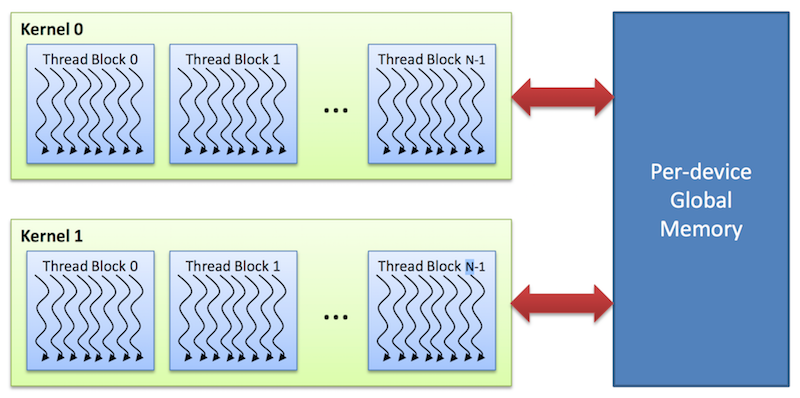

A kernel is executed in parallel by an array of threads:

- All threads run the same code.

- Each thread has an ID that it uses to compute memory addresses and make control decisions.

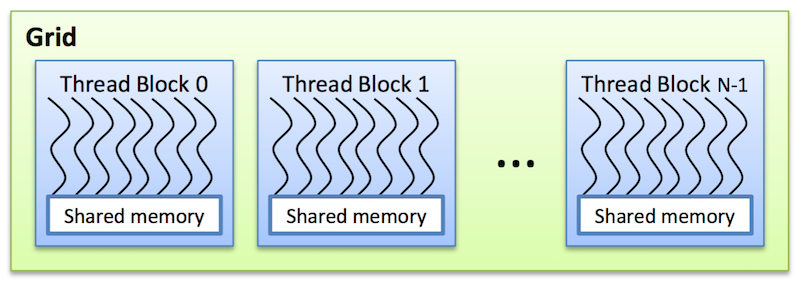

Threads are arranged as a grid of thread blocks:

- Different kernels can have different grid/block configuration

- Threads from the same block have access to a shared memory and their execution can be synchronized

Thread blocks are required to execute independently: It must be possible to execute them in any order, in parallel or in series. This independence r equirement allows thread blocks to be scheduled in any order across any number of cores, enabling programmers to write code that scales with the number of cores. Threads within a block can cooperate by sharing data through some shared memory and by synchronizing their execution to coordinate memory accesses.

The grid of blocks and the thread blocks can be 1, 2, or 3-dimensional.

The CUDA architecture is built around a scalable array of multithreaded Streaming Multiprocessors (SMs) as shown below. Each SM has a set of execution units, a set of registers and a chunk of shared memory.

In an NVIDIA GPU, the basic unit of execution is the warp. A warp is a collection of threads, 32 in current implementations, that are executed simultaneously by an SM. Multiple warps can be executed on an SM at once.

When a CUDA program on the host CPU invokes a kernel grid, the blocks of the grid are enumerated and distributed to SMs with available execution capacity. The threads of a thread block execute concurrently on one SM, and multiple thread blocks can execute concurrently on one SM. As thread blocks terminate, new blocks are launched on the vacated SMs.

The mapping between warps and thread blocks can affect the performance of the kernel. It is usually a good idea to keep the size of a thread block a multiple of 32 in order to avoid this as much as possible.

Thread Identity

The index of a thread and its thread ID relate to each other as follows:

- For a 1-dimensional block, the thread index and thread ID are the same

- For a 2-dimensional block, the thread index (x,y) has thread ID=x+yDx, for block size (Dx,Dy)

- For a 3-dimensional block, the thread index (x,y,x) has thread ID=x+yDx+zDxDy, for block size (Dx,Dy,Dz)

When a kernel is started, the number of blocks per grid and the number of threads per block are fixed (gridDim and blockDim). CUDA makes

four pieces of information available to each thread:

- The thread index (

threadIdx) - The block index (

blockIdx) - The size and shape of a block (

blockDim) - The size and shape of a grid (

gridDim)

Typically, each thread in a kernel will compute one element of an array. There is a common pattern to do this that most CUDA programs use are shown below.

For a 1-dimensional grid:

tx = cuda.threadIdx.x

bx = cuda.blockIdx.x

bw = cuda.blockDim.x

i = tx + bx * bw

array[i] = compute(i)

For a 2-dimensional grid:

tx = cuda.threadIdx.x

ty = cuda.threadIdx.y

bx = cuda.blockIdx.x

by = cuda.blockIdx.y

bw = cuda.blockDim.x

bh = cuda.blockDim.y

x = tx + bx * bw

y = ty + by * bh

array[x, y] = compute(x, y)

Memory Hierarchy

The CPU and GPU have separate memory spaces. This means that data that is processed by the GPU must be moved from the CPU to the GPU before the computation starts, and the results of the computation must be moved back to the CPU once processing has completed.

Global memory

This memory is accessible to all threads as well as the host (CPU).

- Global memory is allocated and deallocated by the host

- Used to initialize the data that the GPU will work on

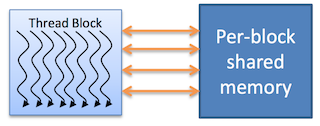

Shared memory

Each thread block has its own shared memory

- Accessible only by threads within the block

- Much faster than local or global memory

- Requires special handling to get maximum performance

- Only exists for the lifetime of the block

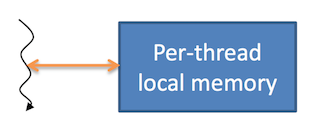

Local memory

Each thread has its own private local memory

- Only exists for the lifetime of the thread

- Generally handled automatically by the compiler

Constant and texture memory

These are read-only memory spaces accessible by all threads.

- Constant memory is used to cache values that are shared by all functional units

- Texture memory is optimized for texturing operations provided by the hardware

CUDA Foundations: Memory allocation, data transfer, kernels and thread management

There are four basic concepts that are always present when programming GPUs with CUDA: Allocating and deallocating memory on the device. Copying data from host memory to device memory and back to host memory, writing functions that operate inside the GPU and managing a multilevel hierarchy of threads capable of describing the operation of thousands of threads working on the GPU.

In this lesson we will discuss those four concepts so you are ready to write basic CUDA programs. Programming GPUs is much more than just those concepts, performance considerations and the ability to properly debug codes are the real challenge when programming GPUs. It is particularly hard to debug well hiding bugs among thousands of concurrent threads, the challenge is significantly harder than with a serial code where a printf here and there will capture the issue. We will not discuss performance and debugging here, just the general structure of a CUDA based code.

Memory allocation

GPU cards are usually come as a hardware card using the PCI express card or NVLink high-speed GPU interconnect bridge. Cards include their own dynamic random memory access memory (DRAM). To operate with GPUs you need to allocate the memory on the device, as the RAM on the node is not directly addressable by the GPU. So one of the first steps in programming GPUs involve the allocation and deallocation of memory on the GPU.

The memory we are referring here in the GPU is called “Global Memory”, it is the equivalent to the RAM on a computer. There are other levels of memory inside a GPU but in most cases we talk about the “Global memory” as “device memory” with those two expressions being used interchangeable for the purposes of this discussion.

Allocating and deallocation of memory on the device is done with cudaMalloc and cudaFree calls as shown here:

float *d_A;

int size=n*sizeof(float);

cudaMalloc((void**)&d_A, size)

...

...

cudaFree(d_A);

It is customary in CUDA programming to clearly identifying which variables are allocated on the device and which ones are allocated on the host. The variable d_A is a pointer, meaning that its contents is a memory location. The variable itself is located in host memory but it will point to a memory location on the device, that is the reason for the prefix d_.

The next line computes the number of bytes that will be allocated on de device for d_A. It is always better to use floats instead of double if the need for the extra precision is not a must. In general on a GPU you get perform at least twice as fast with single precision compared with double and memory in the GPU is a precious asset compared with host memory.

Finally to free memory on the device the function is cudaFree. cudaMalloc and cudaFree look very similar to the traditional Malloc and Free that C developer are used to operate. The similarity is on purpose to facilitate moving into GPU programming with minimal effort for C developers.

Moving data from host to device and back to host

As memory on the device is a separate entity from the memory than the CPU can see, we need functions to move data from the host memory (RAM) into the GPU card “Global memory” (device memory) as well as from device memory back to host.

The function to do that is cudaMemcpy and the final argument of the function defines the direction of the copy:

cudaMemcpy(d_A, A, size, cudaMemcpyHostTodevice)

...

...

cudaMemcpy(d_A, A, size, cudaMemcpyDeviceToHost)

Kernel functions

A kernel function declares the operations that will take place inside the device. The same code on the kernel function will run over every thread span during execution. There are identifiers for the threads that will be used to redirect the computation into different directions at runtime, but the code on each thread is indeed the same.

One example of a kernel function is this:

__global__ simple_kernel(float* A, int n)

{

int i = blockDim.x * blockIdx.x + threadIdx.x;

A[i]=1000*blockIdx.x+threadIdx.x;

}

Example (SAXPY)

#include <stdio.h>

__global__

void saxpy(int n, float a, float *x, float *y)

{

int i = blockIdx.x*blockDim.x + threadIdx.x;

if (i < n) y[i] = a*x[i] + y[i];

}

int main(void)

{

int N = 1<<20;

float *x, *y, *d_x, *d_y;

x = (float*)malloc(N*sizeof(float));

y = (float*)malloc(N*sizeof(float));

cudaMalloc(&d_x, N*sizeof(float));

cudaMalloc(&d_y, N*sizeof(float));

for (int i = 0; i < N; i++) {

x[i] = 1.0f;

y[i] = 2.0f;

}

cudaMemcpy(d_x, x, N*sizeof(float), cudaMemcpyHostToDevice);

cudaMemcpy(d_y, y, N*sizeof(float), cudaMemcpyHostToDevice);

// Perform SAXPY on 1M elements

saxpy<<<(N+255)/256, 256>>>(N, 2.0f, d_x, d_y);

cudaMemcpy(y, d_y, N*sizeof(float), cudaMemcpyDeviceToHost);

float maxError = 0.0f;

for (int i = 0; i < N; i++)

maxError = max(maxError, abs(y[i]-4.0f));

printf("Max error: %f\n", maxError);

cudaFree(d_x);

cudaFree(d_y);

free(x);

free(y);

}

Key Points

CUDA is designed for a specific GPU architecture, namely NVIDIA’s Streaming Multiprocessors.

CUDA has many programming operations that are common to other parallel programming paradigms.

Careful use of data movement is extremely important to obtaining good performance from CUDA programs.

Deep Learning

Overview

Teaching: 30 min

Exercises: 0 minQuestions

What is Deep Learning?

What is a Neural Network?

Why DL is often computed with accelerators (GPUs)

Objectives

Learn the basics of Deep Learning and Neural Networks

Learn how to use GPUs to train neural networks on an HPC cluster

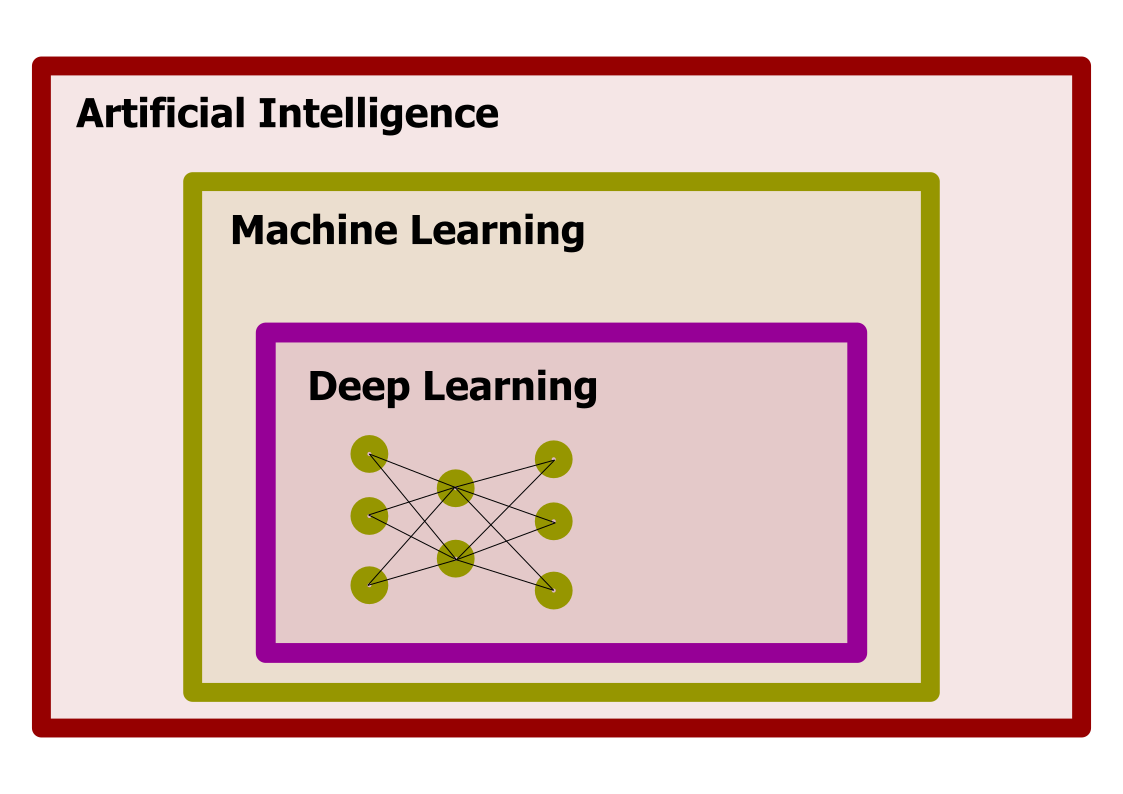

Deep Learning is one of the techniques in Machine Learning with most success in a variety of problems. From Classification to Regression. Its ability to account for complexity is remarkable.

Deep Learning is one of the most active areas in Machine Learning today. However, Neural networks have been around for decades, so the question is why now? As we will learn in this workshop the hype created around Deep Learning in the last decade is due to the convergence of a set of elements that propelled Deep Learning from an obscure topic in Machine Learning to the driving force of many of our activities in daily life. We can summarize those elements as:

-

The availability of large datasets, thanks in part to the internet and big tech companies.

-

Powerful accelerators such as GPUs that are capable of processing data for some tasks (Linear Algebra) at scales outperform CPUs by at least one order of magnitude.

Every time you are using your phone or smart TV, computer, neural networks are working for you. They have become almost both ubiquitous and invisible as electricity became from the beginning of the 20th century. In a way, Machine Learning and Deep Learning are the fuel of some sort of new industrial revolution or at least with the potential to become something like it.

Deep Learning, Machine Learning, and Artificial Intelligence

Deep Learning is part of the area of Machine Learning, a set of techniques to produce models that use examples to build the model, rather than using a hardcoded algorithm. Machine Learning itself is just an area of Artificial Intelligence, an area of computer science dedicated to studying how computers can perform tasks usually considered as intellectual.

Learning from data, a scientific perspective

The idea of learning from data is not foreign to scientist. The objective of science is to create models that explain phenomena and provides predictions that can be confirmed or rejected by experiments or observations.

Scientists create models that not only give us insight about nature but also equations that allow us to make predictions. In most cases, clean equations are simply not possible and we have to use numerical approximations but we try to keep the understanding. Machine Learning is used in cases where mathematical models are known, numerical approximations are not feasible, and we We are satisfied with the answers even if we lost the ability to understand why the parameters of Machine Learning models work the way they do.

In summary, we need 3 conditions for using Machine Learning on a problem:

- Good data

- The existence of patterns on the data

- The lack of a good mathematical model to express the patterns present on the data

Kepler, an example of learning from data

_1971,_MiNr_1649.jpg) |

|

|---|

|

|---|

|

The two shores of Deep Learning

There are two ways to approach Deep Learning. The biological side and the mathematical side.

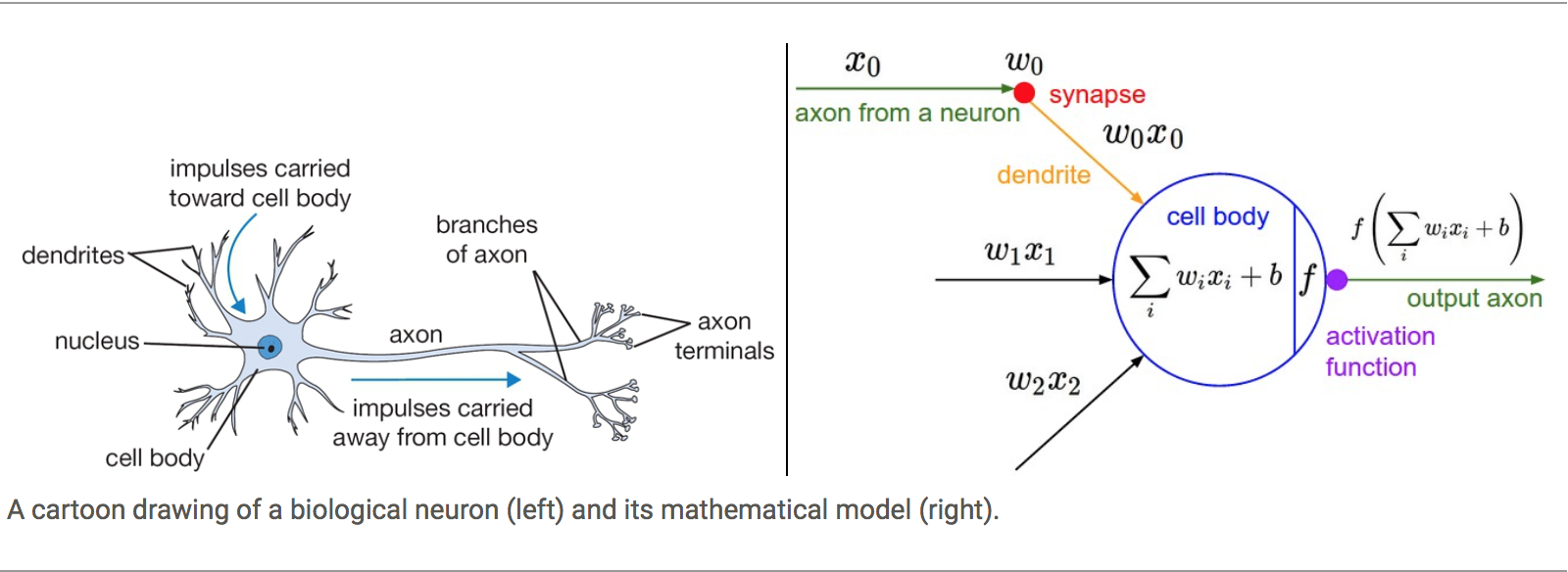

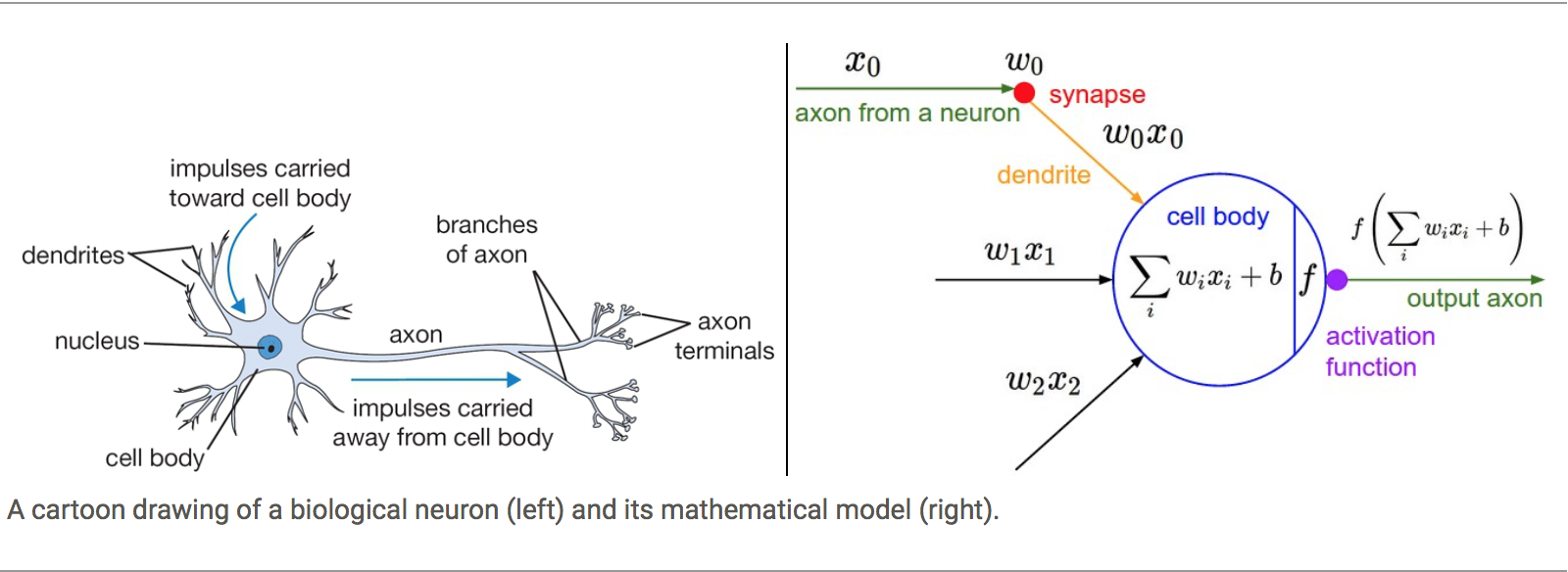

An Artificial Neural Network (ANN) is a computational model that is loosely inspired by the way biological neural networks in the animal brain process information.

From the other side, they can also be considered as a generalization of the Perceptron idea. A mathematical model for producing functions capable of getting close to a target function via an iterative process.

Both shores serve as good analogies if we are careful not to extrapolate the original ideas beyond their regime of validity. Deep Learning is not pretending to be models of the brain and the complexity of Deep Neural networks is far beyond that of what perceptrons were capable of doing.

Let’s explore these two approaches for a moment.

Biological Neural Networks

From one side the idea of simulating synapsis in biological Neural Networks and using the knowledge about activation barriers and multiple connectivities as inspiration to create an Artificial Neural Network. The basic computational unit of the brain is a neuron. Approximately 86 billion neurons can be found in the human nervous system and they are connected with approximately 10¹⁴ — 10¹⁵ synapses

The idea with ANN is that synaptic strengths (the weights w in our mathematical model) are learnable and control the strength of influence and its direction: excitatory (positive weight) or inhibitory (negative weight) of one neuron on another. If the final sum of the different connections is above a certain threshold, the neuron can fire, sending a spike along its axon, which is the output of the network under the provided input.

The Perceptron

The other origin is the idea of Perceptron in Machine Learning.

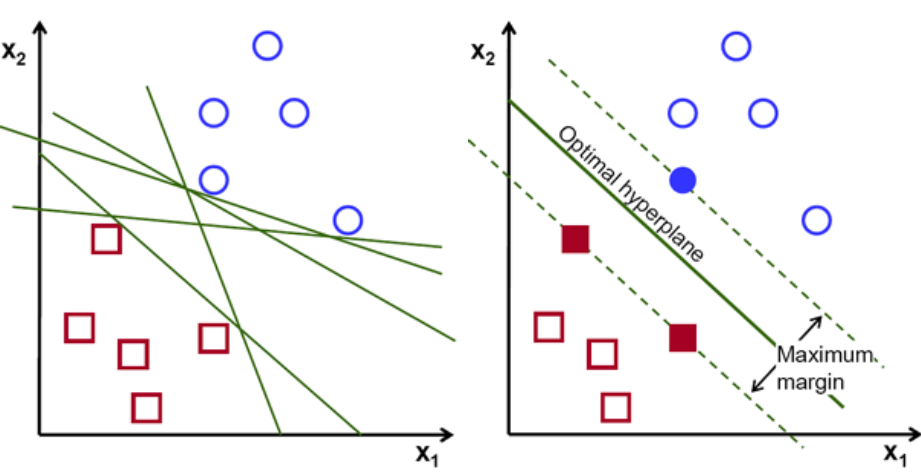

Perceptron is a linear classifier (binary) and used in supervised learning. It helps to classify the given input data. As a binary classifier, it can decide whether or not an input, represented by a vector of numbers, belongs to some specific class.

Complexity of Neural Networks

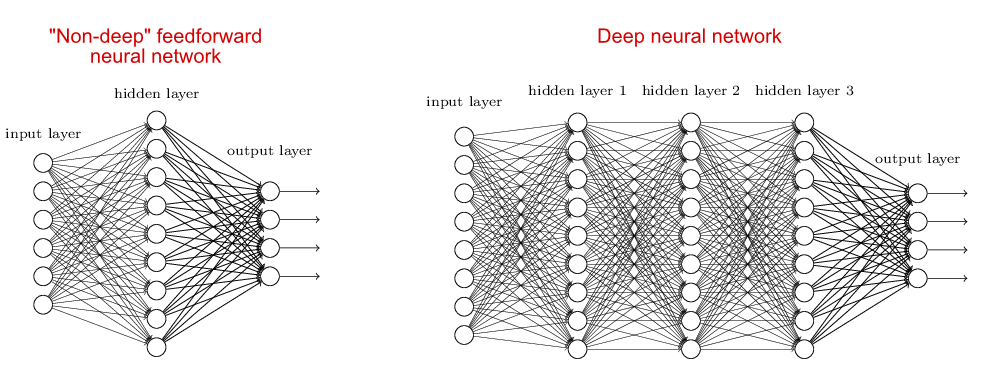

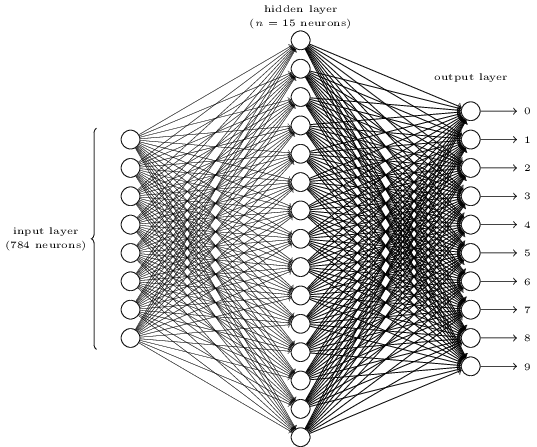

When the neural network has no hidden layer it is just a linear classifier or Perceptron. When hidden layers are added the NN can account for non-linearity, in the case of multiple hidden layers have what is called a Deep Neural Network.

In practice how complex it can be

A Deep Learning model can include:

- Input (With many neurons)

- Layer 1

- …

- …

- Layer N

- Output layer (With many neurons)

For example, the input can be an image with thousands of pixels and 3 colors for each pixel. Hundreds of hidden layers and the output could also be an image. That complexity is responsible for the computational cost of running such networks.

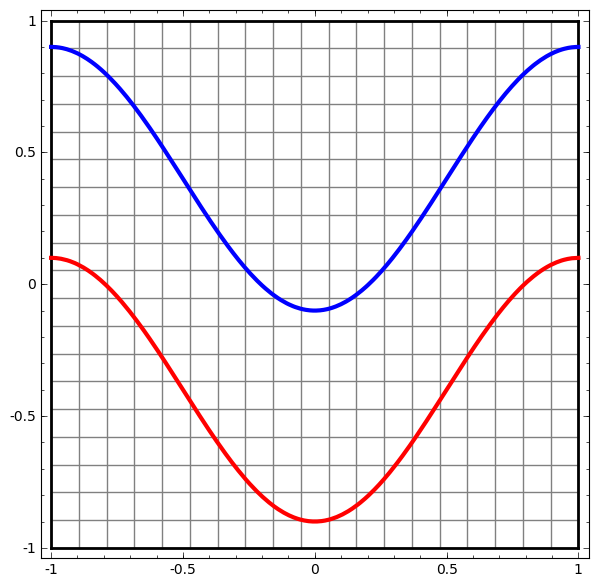

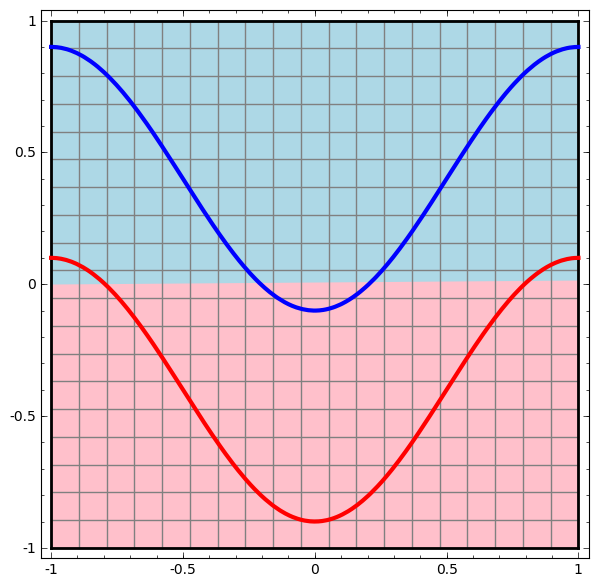

Non-linear transformations with Deep Networks

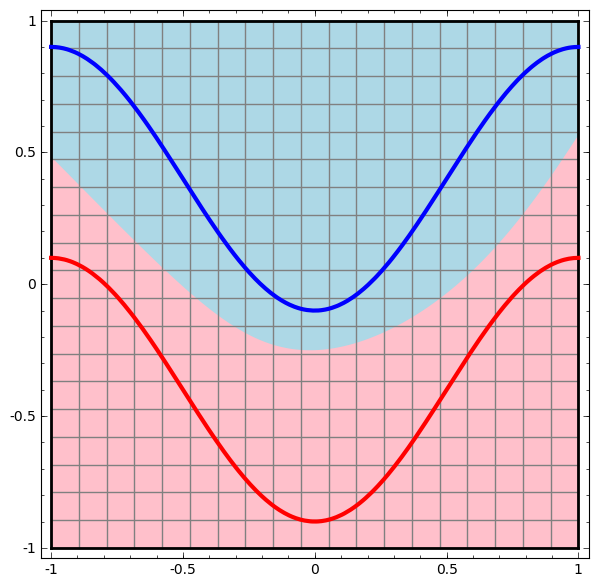

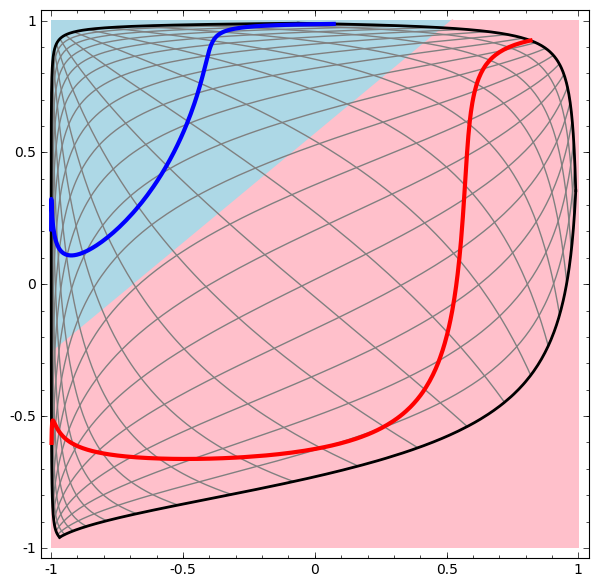

Consider this ilustrative example from this blog: We have two curves, as they are there is no way of creating a straight line that separates both curves. There is however a curve capable of separating the space in two regions where each curve lives on its region. Neural networks approach the problem by transforming the space in a non-linear way, allowing the two curves to be easily separable with a simple line.

|

|

|

|---|---|---|

|

|

Dynamic visualization of the transformations

In its basic form, a neural network consists of the application of affine transformations (scalings, skewings and rotations, and translations) followed by pointwise application of a non-linear function:

|

|---|

|

|

|---|

Basic Neural Network Architecture

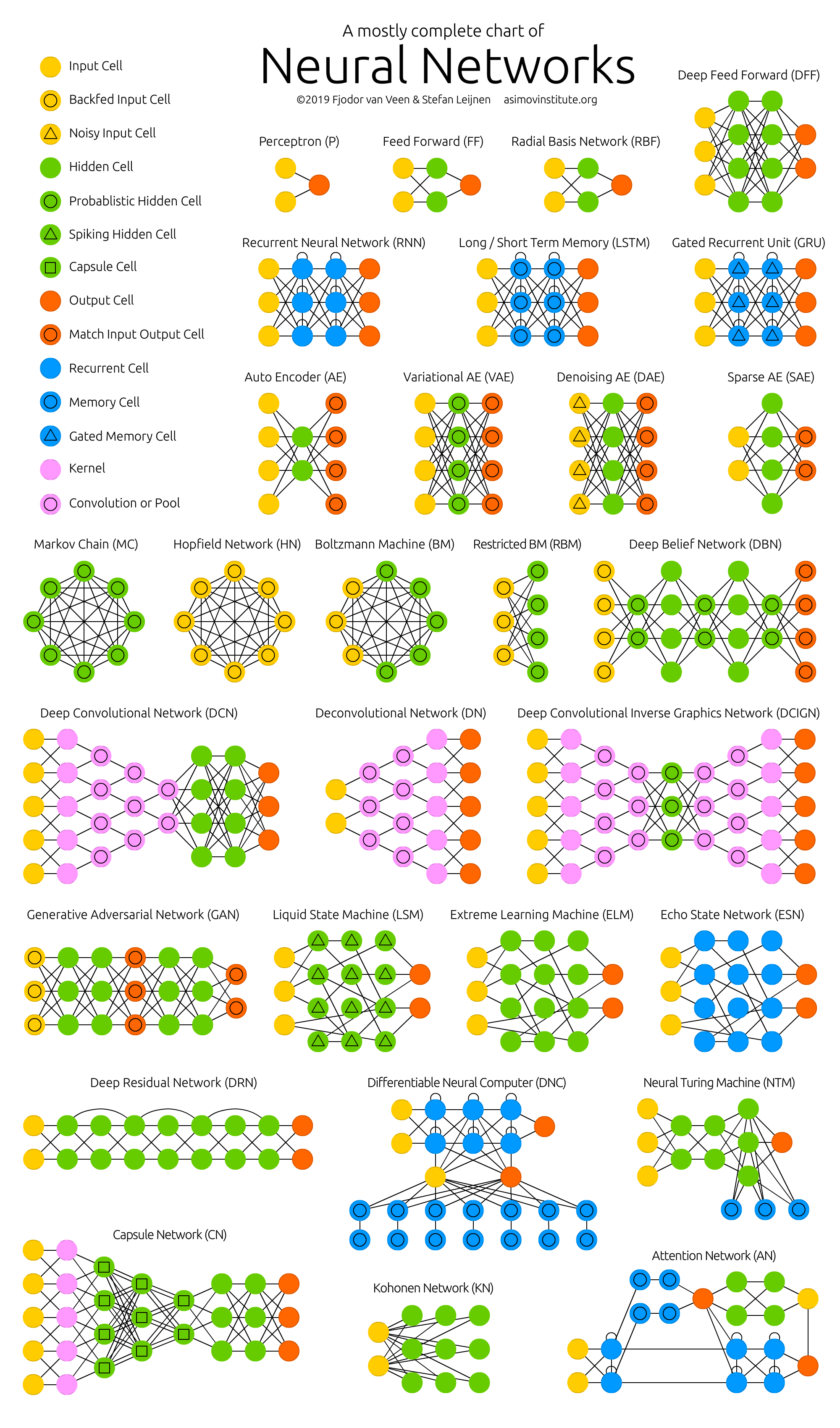

Neural Network Zoo

Since neural networks are one of the more active research fields in machine learning, a large number of modifications have been proposed. In the following figure, a summary of the different node structures is drawn and from that, relations and acronyms are provided such that some of the different networks are related someway. The figure below shows a summary but let me give you a quick overview of a few of them.

1) Feed forward neural networks (FF or FFNN) and perceptrons (P). They feed information from the front to the back (input and output, respectively). Neural networks are often described as having layers, where each layer consists of either input, hidden, or output cells in parallel. A layer alone never has connections and in general two adjacent layers are fully connected (every neuron from one layer to every neuron to another layer). One usually trains FFNNs through back-propagation, giving the network paired datasets of “what goes in” and “what we want to have coming out”. Given that the network has enough hidden neurons, it can theoretically always model the relationship between the input and output. Practically their use is a lot more limited but they are popularly combined with other networks to form new networks.

2) Radial basis functions. This network is simpler than the normal FFNN, as the activation function is a radial function. Each RBFN neuron stores a “prototype”, which is just one of the examples from the training set. When we want to classify a new input, each neuron computes the Euclidean distance between the input and its prototype. Roughly speaking, if the input more closely resembles the class A prototypes than the class B prototypes, it is classified as class A.

3) Recurrent Neural Networks (RNN). These networks are designed to take a series of inputs with no predetermined limit on size. They are networks with loops in them, allowing information to persist.

4) Long/short term memory (LSTM) networks. There is a special kind of RNN where each neuron has a memory cell and three gates: input, output, and forget. The idea of each gate is to allow or stop the flow of information through them.

5) Gated recurrent units (GRU) are a slight variation on LSTMs. They have one less gate and are wired slightly differently: instead of an input, output, and forget gate, they have an update gate.

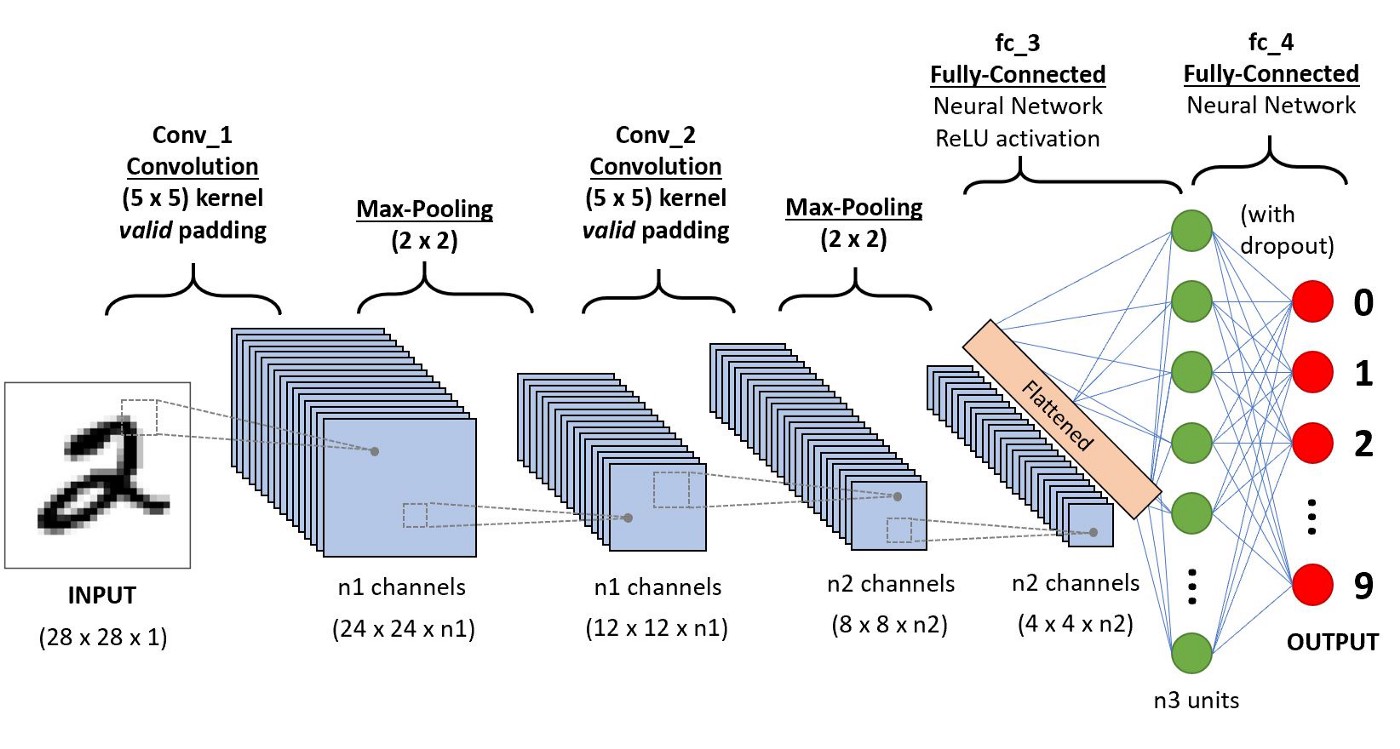

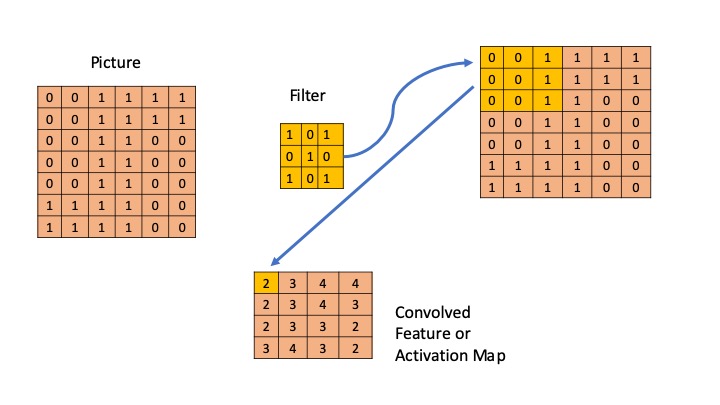

6) Convolutional Neural Networks (ConvNet) is very similar to FFNN, they are made up of neurons that have learnable weights and biases. In a convolutional neural network (CNN, or ConvNet or shift invariant or space invariant) the unit connectivity pattern is inspired by the organization of the visual cortex, Units respond to stimuli in a restricted region of space known as the receptive field. Receptive fields partially overlap, over-covering the entire visual field. The unit response can be approximated mathematically by a convolution operation. They are variations of multilayer perceptrons that use minimal preprocessing. Their wide applications are in image and video recognition, recommender systems, and natural language processing. CNN’s requires large data to train on.

From http://www.asimovinstitute.org/neural-network-zoo

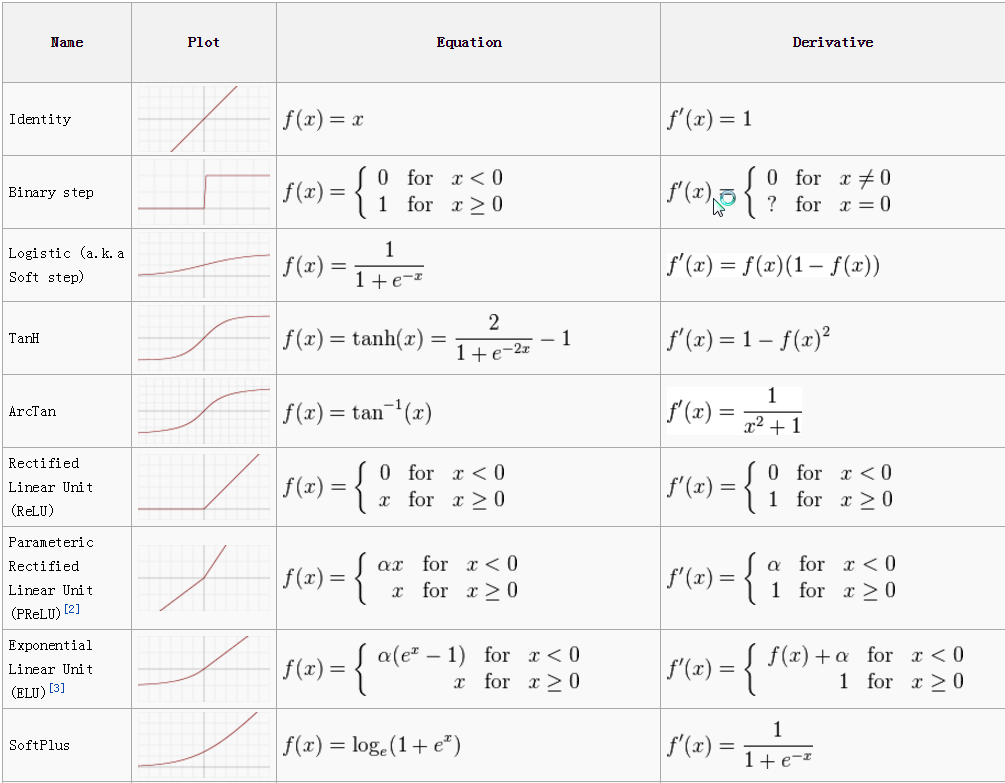

Activation Function

Each internal neuron receives input from several other neurons, computes the aggregate, and propagates the result based on the activation function.

Neurons apply activation functions at these summed inputs.

Activation functions are typically non-linear.

-

The Sigmoid Function produces a value between 0 and 1, so it is intuitive when a probability is desired and was almost standard for many years.

-

The Rectified Linear (ReLU) activation function is zero when the input is negative and is equal to the input when the input is positive. Rectified Linear activation functions are currently the most popular activation function as they are more efficient than the sigmoid or hyperbolic tangent.

-

Sparse activation: In a randomly initialized network, only 50% of hidden units are active.

-

Better gradient propagation: Fewer vanishing gradient problems compared to sigmoidal activation functions that saturate in both directions.

-

Efficient computation: Only comparison, addition, and multiplication.

-

There are Leaky and Noisy variants.

-

-

The Soft Plus shared some of the nice properties of ReLU and still preserves continuity on the derivative.

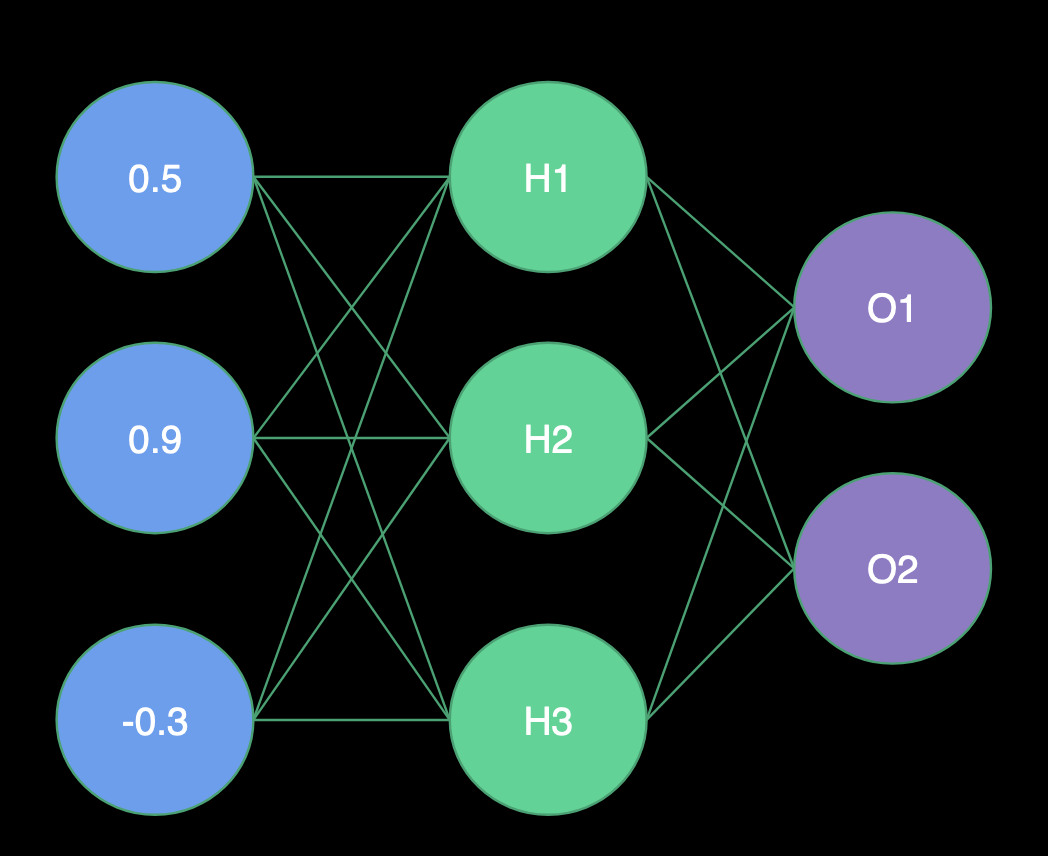

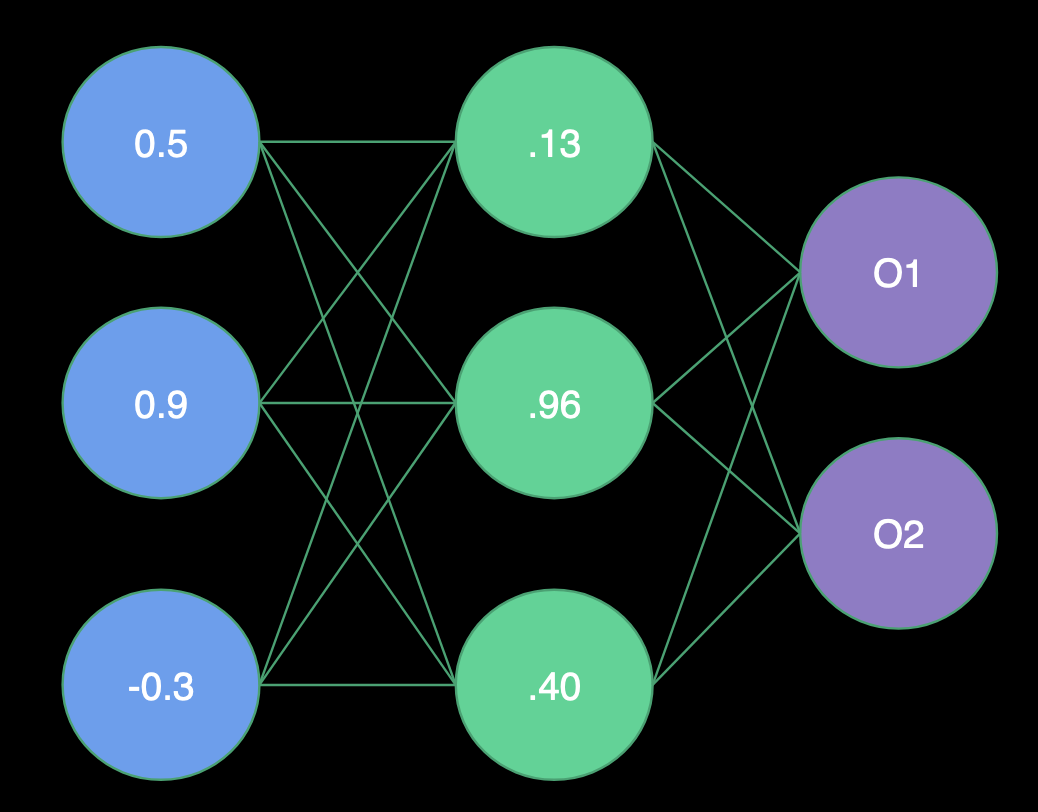

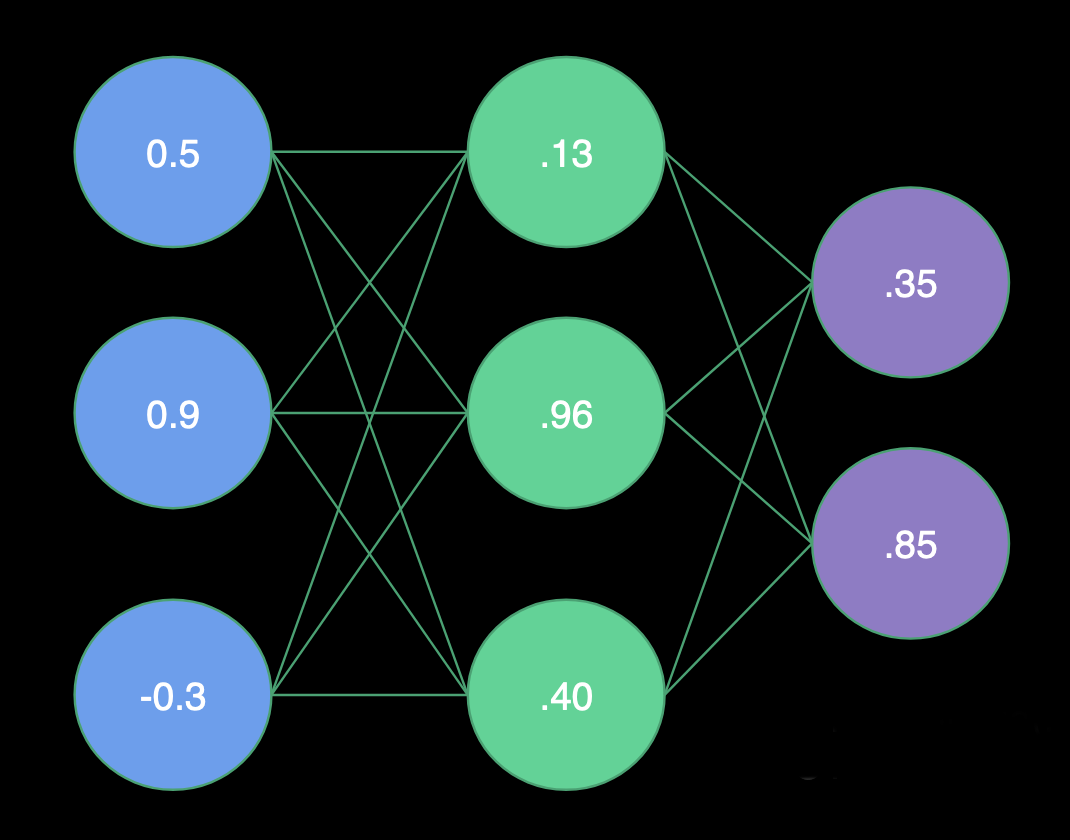

Inference or Foward Propagation

|

|

|

|---|---|---|

| Receiving Input | Computing Hidden Layer | Computing Output |

Receiving Input

- H1 Weights = (1.0, -2.0, 2.0)

- H2 Weights = (2.0, 1.0, -4.0)

- H3 Weights = (1.0, -1.0, 0.0)

- O1 Weights = (-3.0, 1.0, -3.0)

- O2 Weights = (0.0, 1.0, 2.0)

Hidden Layer

- H1 = Sigmoid(0.5 * 1.0 + 0.9 * -2.0 + -0.3 * 2.0) = Sigmoid(-1.9) = .13

- H2 = Sigmoid(0.5 * 2.0 + 0.9 * 1.0 + -0.3 * -4.0) = Sigmoid(3.1) = .96

- H3 = Sigmoid(0.5 * 1.0 + 0.9 * -1.0 + -0.3 * 0.0) = Sigmoid(-0.4) = .40

Output Layer

- O1 = Sigmoid(.13 * -3.0 + .96 * 1.0 + .40 * -3.0) = Sigmoid(-.63) = .35

- O2 = Sigmoid(.13 * 0.0 + .96 * 1.0 + .40 * 2.0) = Sigmoid(1.76) = .85

In terms of Linear Algebra:

[\begin{bmatrix}

1 & -2 & 2

2 & 1 & -4

1 & -1 & 0

\end{bmatrix}

\cdot

\begin{bmatrix}

0.5

0.9

-0.3

\end{bmatrix}

=

\begin{bmatrix}

-1.9

3.1

-0.4

\end{bmatrix}]

The fact that we can describe the problem in terms of Linear Algebra is one of the reasons why Neural Networks are so efficient on GPUs. The same operation as a single execution line looks like this:

Biases

It is also very useful to be able to offset our inputs by some constant. You can think of this as centering the activation function or translating the solution (next slide). We will call this constant the bias, and there will often be one value per layer.

Accounting for Non-Linearity

Neural networks are so effective in classification and regression due to their ability to combine linear and non-linear operations on each step of the evaluation.

- The matrix multiply provides the skew and scale.

- The bias provides the translation.

- The activation function provides the twist.

Training Neural Networks: The backpropagation